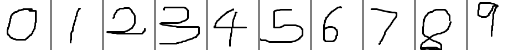

TensorRT Samples: MNIST API

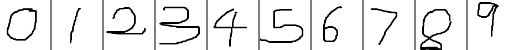

以下是参考TensorRT 2.1.2中的sampleMNISTAPI.cpp文件改写的实现对手写数字0-9识别的测试代码,各个文件内容如下:

common.hpp:

#ifndef FBC_TENSORRT_TEST_COMMON_HPP_

#define FBC_TENSORRT_TEST_COMMON_HPP_#include <cuda_runtime.h>

#include <device_launch_parameters.h>

#include <NvInfer.h>template< typename T >

static inline int check_Cuda(T result, const char * const func, const char * const file, const int line)

{if (result) {fprintf(stderr, "Error CUDA: at %s: %d, error code=%d, func: %s\n", file, line, static_cast<unsigned int>(result), func);cudaDeviceReset(); // Make sure we call CUDA Device Reset before exitingreturn -1;}

}template< typename T >

static inline int check(T result, const char * const func, const char * const file, const int line)

{if (result) {fprintf(stderr, "Error: at %s: %d, error code=%d, func: %s\n", file, line, static_cast<unsigned int>(result), func);return -1;}

}#define checkCudaErrors(val) check_Cuda((val), __FUNCTION__, __FILE__, __LINE__)

#define checkErrors(val) check((val), __FUNCTION__, __FILE__, __LINE__)#define CHECK(x) { \if (x) {} \else { fprintf(stderr, "Check Failed: %s, file: %s, line: %d\n", #x, __FILE__, __LINE__); return -1; } \

}// Logger for GIE info/warning/errors

class Logger : public nvinfer1::ILogger

{void log(Severity severity, const char* msg) override{// suppress info-level messagesif (severity != Severity::kINFO)std::cout << msg << std::endl;}

};#endif // FBC_TENSORRT_TEST_COMMON_HPP_#include <string>

#include <fstream>

#include <iostream>

#include <map>

#include <tuple>#include <NvInfer.h>

#include <NvCaffeParser.h>

#include <cuda_runtime_api.h>

#include <opencv2/opencv.hpp>#include "common.hpp"// reference: TensorRT-2.1.2/samples/sampleMNIST/sampleMNISTAPI.cpp// intput width, input height, output size, input blob name, output blob name, weight file, mean file

typedef std::tuple<int, int, int, std::string, std::string, std::string, std::string> DATA_INFO;// Our weight files are in a very simple space delimited format.

// [type] [size] <data x size in hex>

static std::map<std::string, nvinfer1::Weights> loadWeights(const std::string& file)

{std::map<std::string, nvinfer1::Weights> weightMap;std::ifstream input(file);if (!input.is_open()) {fprintf(stderr, "Unable to load weight file: %s\n", file.c_str());return weightMap;}int32_t count;input >> count;if (count <= 0) {fprintf(stderr, "Invalid weight map file: %d\n", count);return weightMap;}while(count--) {nvinfer1:: Weights wt{nvinfer1::DataType::kFLOAT, nullptr, 0};uint32_t type, size;std::string name;input >> name >> std::dec >> type >> size;wt.type = static_cast<nvinfer1::DataType>(type);if (wt.type == nvinfer1::DataType::kFLOAT) {uint32_t *val = reinterpret_cast<uint32_t*>(malloc(sizeof(val) * size));for (uint32_t x = 0, y = size; x < y; ++x) {input >> std::hex >> val[x];}wt.values = val;} else if (wt.type == nvinfer1::DataType::kHALF) {uint16_t *val = reinterpret_cast<uint16_t*>(malloc(sizeof(val) * size));for (uint32_t x = 0, y = size; x < y; ++x) {input >> std::hex >> val[x];}wt.values = val;}wt.count = size;weightMap[name] = wt;}return weightMap;

}// Creat the Engine using only the API and not any parser.

static nvinfer1::ICudaEngine* createMNISTEngine(unsigned int maxBatchSize, nvinfer1::IBuilder* builder, nvinfer1::DataType dt, const DATA_INFO& info)

{nvinfer1::INetworkDefinition* network = builder->createNetwork();// Create input of shape { 1, 1, 28, 28 } with name referenced by INPUT_BLOB_NAMEauto data = network->addInput(std::get<3>(info).c_str(), dt, nvinfer1::DimsCHW{ 1, std::get<1>(info), std::get<0>(info)});assert(data != nullptr);// Create a scale layer with default power/shift and specified scale parameter.float scale_param = 0.0125f;nvinfer1::Weights power{nvinfer1::DataType::kFLOAT, nullptr, 0};nvinfer1::Weights shift{nvinfer1::DataType::kFLOAT, nullptr, 0};nvinfer1::Weights scale{nvinfer1::DataType::kFLOAT, &scale_param, 1};auto scale_1 = network->addScale(*data, nvinfer1::ScaleMode::kUNIFORM, shift, scale, power);assert(scale_1 != nullptr);// Add a convolution layer with 20 outputs and a 5x5 filter.std::map<std::string, nvinfer1::Weights> weightMap = loadWeights(std::get<5>(info));auto conv1 = network->addConvolution(*scale_1->getOutput(0), 20, nvinfer1::DimsHW{5, 5}, weightMap["conv1filter"], weightMap["conv1bias"]);assert(conv1 != nullptr);conv1->setStride(nvinfer1::DimsHW{1, 1});// Add a max pooling layer with stride of 2x2 and kernel size of 2x2.auto pool1 = network->addPooling(*conv1->getOutput(0), nvinfer1::PoolingType::kMAX, nvinfer1::DimsHW{2, 2});assert(pool1 != nullptr);pool1->setStride(nvinfer1::DimsHW{2, 2});// Add a second convolution layer with 50 outputs and a 5x5 filter.auto conv2 = network->addConvolution(*pool1->getOutput(0), 50, nvinfer1::DimsHW{5, 5}, weightMap["conv2filter"], weightMap["conv2bias"]);assert(conv2 != nullptr);conv2->setStride(nvinfer1::DimsHW{1, 1});// Add a second max pooling layer with stride of 2x2 and kernel size of 2x3>auto pool2 = network->addPooling(*conv2->getOutput(0), nvinfer1::PoolingType::kMAX, nvinfer1::DimsHW{2, 2});assert(pool2 != nullptr);pool2->setStride(nvinfer1::DimsHW{2, 2});// Add a fully connected layer with 500 outputs.auto ip1 = network->addFullyConnected(*pool2->getOutput(0), 500, weightMap["ip1filter"], weightMap["ip1bias"]);assert(ip1 != nullptr);// Add an activation layer using the ReLU algorithm.auto relu1 = network->addActivation(*ip1->getOutput(0), nvinfer1::ActivationType::kRELU);assert(relu1 != nullptr);// Add a second fully connected layer with 20 outputs.auto ip2 = network->addFullyConnected(*relu1->getOutput(0), std::get<2>(info), weightMap["ip2filter"], weightMap["ip2bias"]);assert(ip2 != nullptr);// Add a softmax layer to determine the probability.auto prob = network->addSoftMax(*ip2->getOutput(0));assert(prob != nullptr);prob->getOutput(0)->setName(std::get<4>(info).c_str());network->markOutput(*prob->getOutput(0));// Build the enginebuilder->setMaxBatchSize(maxBatchSize);builder->setMaxWorkspaceSize(1 << 20);auto engine = builder->buildCudaEngine(*network);// we don't need the network any morenetwork->destroy();// Once we have built the cuda engine, we can release all of our held memory.for (auto &mem : weightMap) {free((void*)(mem.second.values));}return engine;

}static int APIToModel(unsigned int maxBatchSize, // batch size - NB must be at least as large as the batch we want to run with)nvinfer1::IHostMemory** modelStream, Logger logger, const DATA_INFO& info)

{// create the buildernvinfer1::IBuilder* builder = nvinfer1::createInferBuilder(logger);// create the model to populate the network, then set the outputs and create an enginenvinfer1::ICudaEngine* engine = createMNISTEngine(maxBatchSize, builder, nvinfer1::DataType::kFLOAT, info);CHECK(engine != nullptr);// serialize the engine, then close everything down(*modelStream) = engine->serialize();engine->destroy();builder->destroy();return 0;

}static int doInference(nvinfer1::IExecutionContext& context, float* input, float* output, int batchSize, const DATA_INFO& info)

{const nvinfer1::ICudaEngine& engine = context.getEngine();// input and output buffer pointers that we pass to the engine - the engine requires exactly IEngine::getNbBindings(),// of these, but in this case we know that there is exactly one input and one output.CHECK(engine.getNbBindings() == 2);void* buffers[2];// In order to bind the buffers, we need to know the names of the input and output tensors.// note that indices are guaranteed to be less than IEngine::getNbBindings()int inputIndex = engine.getBindingIndex(std::get<3>(info).c_str()), outputIndex = engine.getBindingIndex(std::get<4>(info).c_str());// create GPU buffers and a streamcheckCudaErrors(cudaMalloc(&buffers[inputIndex], batchSize * std::get<1>(info) * std::get<0>(info) * sizeof(float)));checkCudaErrors(cudaMalloc(&buffers[outputIndex], batchSize * std::get<2>(info) * sizeof(float)));cudaStream_t stream;checkCudaErrors(cudaStreamCreate(&stream));// DMA the input to the GPU, execute the batch asynchronously, and DMA it back:checkCudaErrors(cudaMemcpyAsync(buffers[inputIndex], input, batchSize * std::get<1>(info) * std::get<0>(info) * sizeof(float), cudaMemcpyHostToDevice, stream));context.enqueue(batchSize, buffers, stream, nullptr);checkCudaErrors(cudaMemcpyAsync(output, buffers[outputIndex], batchSize * std::get<2>(info) * sizeof(float), cudaMemcpyDeviceToHost, stream));cudaStreamSynchronize(stream);// release the stream and the bufferscudaStreamDestroy(stream);checkCudaErrors(cudaFree(buffers[inputIndex]));checkCudaErrors(cudaFree(buffers[outputIndex]));return 0;

}int test_mnist_api()

{Logger logger; // multiple instances of IRuntime and/or IBuilder must all use the same logger// stuff we know about the network and the caffe input/output blobsconst DATA_INFO info(28, 28, 10, "data", "prob", "models/mnistapi.wts", "models/mnist_mean.binaryproto");// create a model using the API directly and serialize it to a streamnvinfer1::IHostMemory* modelStream{ nullptr };APIToModel(1, &modelStream, logger, info);// parse the mean file produced by caffe and subtract it from the imagenvcaffeparser1::ICaffeParser* parser = nvcaffeparser1::createCaffeParser();nvcaffeparser1::IBinaryProtoBlob* meanBlob = parser->parseBinaryProto(std::get<6>(info).c_str());parser->destroy();const float* meanData = reinterpret_cast<const float*>(meanBlob->getData());nvinfer1::IRuntime* runtime = nvinfer1::createInferRuntime(logger);nvinfer1::ICudaEngine* engine = runtime->deserializeCudaEngine(modelStream->data(), modelStream->size(), nullptr);nvinfer1::IExecutionContext* context = engine->createExecutionContext();uint8_t fileData[std::get<1>(info) * std::get<0>(info)];const std::string image_path{ "images/digit/" };for (int i = 0; i < 10; ++i) {const std::string image_name = image_path + std::to_string(i) + ".png";cv::Mat mat = cv::imread(image_name, 0);if (!mat.data) {fprintf(stderr, "read image fail: %s\n", image_name.c_str());return -1;}cv::resize(mat, mat, cv::Size(std::get<0>(info), std::get<1>(info)));mat.convertTo(mat, CV_32FC1);float data[std::get<1>(info)*std::get<0>(info)];const float* p = (float*)mat.data;for (int j = 0; j < std::get<1>(info)*std::get<0>(info); ++j) {data[j] = p[j] - meanData[j];}// run inferencefloat prob[std::get<2>(info)];doInference(*context, data, prob, 1, info);float val{-1.f};int idx{-1};for (int t = 0; t < std::get<2>(info); ++t) {if (val < prob[t]) {val = prob[t];idx = t;}}fprintf(stdout, "expected value: %d, actual value: %d, probability: %f\n", i, idx, val);}meanBlob->destroy();if (modelStream) modelStream->destroy();// destroy the enginecontext->destroy();engine->destroy();runtime->destroy();return 0;

}

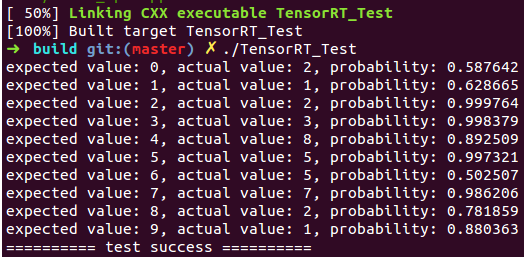

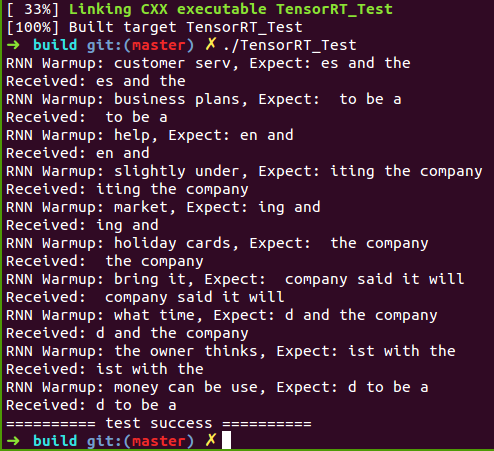

执行结果如下:(与 http://blog.csdn.net/fengbingchun/article/details/78552908 中结果一致)

测试代码编译步骤如下(ReadMe.txt):

在Linux下通过CMake编译TensorRT_Test中的测试代码步骤:

1. 将终端定位到CUDA_Test/prj/linux_tensorrt_cmake,依次执行如下命令:$ mkdir build$ cd build$ cmake ..$ make (生成TensorRT_Test执行文件)$ ln -s ../../../test_data/models ./ (将models目录软链接到build目录下)$ ln -s ../../../test_data/images ./ (将images目录软链接到build目录下)$ ./TensorRT_Test

2. 对于有需要用OpenCV参与的读取图像的操作,需要先将对应文件中的图像路径修改为Linux支持的路径格式

GitHub: https://github.com/fengbingchun/CUDA_Test

相关文章:

免费学习AI公开课:打卡、冲击排行榜,还有福利领取

CSDN 技术公开课 Plus--AI公开课再度升级内容全新策划:贴近开发者,更多样、更落地形式多样升级:线上线下、打卡学习,资料福利,共同交流成长,扫描下方小助手二维码,回复:公开课&#…

Gamma阶段第一次scrum meeting

每日任务内容 队员昨日完成任务明日要完成的任务张圆宁#91 用户体验与优化:发现用户体验细节问题https://github.com/rRetr0Git/rateMyCourse/issues/91#91 用户体验与优化:发现并优化用户体验,修复问题https://github.com/rRetr0Git/rateMyC…

windows 切换 默认 jdk 版本

set JAVA_HOMEC:\jdk1.6.0u24 set PATH%JAVA_HOME%\bin;%PATH%转载于:https://www.cnblogs.com/dmdj/p/3756887.html

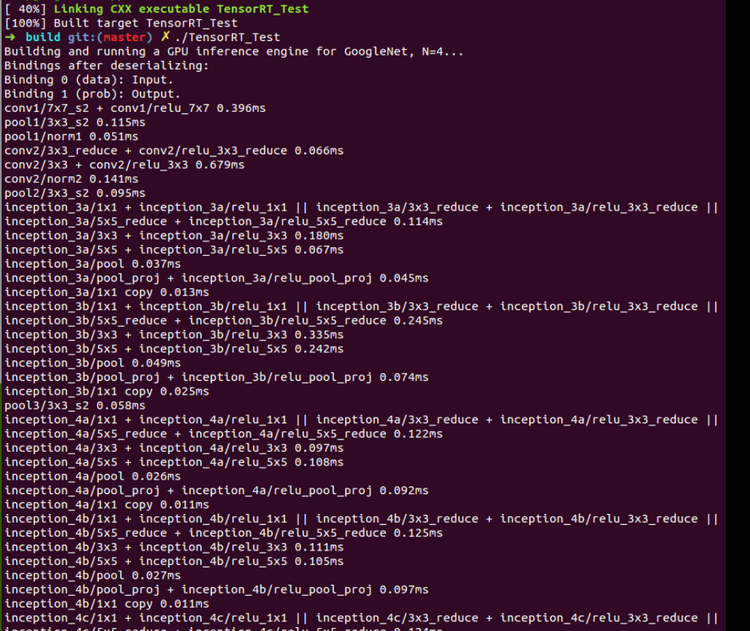

TensorRT Samples: GoogleNet

关于TensorRT的介绍可以参考: http://blog.csdn.net/fengbingchun/article/details/78469551 以下是参考TensorRT 2.1.2中的sampleGoogleNet.cpp文件改写的测试代码,文件(googlenet.cpp)内容如下:#include <iostream> #include <t…

Visual Studio Code Go 插件文档翻译

此插件为 Go 语言在 VS Code 中开发提供了多种语言支持。 阅读版本变更日志了解此插件过去几个版本的更改内容。 1. 语言功能 (Language Features) 1.1 智能感知 (IntelliSense) 编码时符号自动补全(使用 gocode )编码时函数签名帮助提示(使用…

资源 | 吴恩达《机器学习训练秘籍》中文版58章节完整开源

整理 | Jane出品 | AI科技大本营(ID:rgznai100)一年前,吴恩达老师的《Machine Learning Yearning》(机器学习训练秘籍)中文版正式发布,经过一年多的陆续更新,近日,这本书的中文版 58…

js字符串加密的几种方法

在做web前端的时候免不了要用javascript来处理一些简单操作,其实如果要用好JQuery, Prototype,Dojo 等其中一两个javascript框架并不简单,它提高你的web交互和用户体验,从而能使你的web前端有非一样的感觉,如海阔凭鱼跃。当然&…

Vue开发入门看这篇文章就够了

摘要: 很多值得了解的细节。 原文:Vue开发看这篇文章就够了作者:RandomFundebug经授权转载,版权归原作者所有。 介绍 Vue 中文网Vue githubVue.js 是一套构建用户界面(UI)的渐进式JavaScript框架库和框架的区别 我们所说的前端框架…

TensorRT Samples: CharRNN

关于TensorRT的介绍可以参考: http://blog.csdn.net/fengbingchun/article/details/78469551 以下是参考TensorRT 2.1.2中的sampleCharRNN.cpp文件改写的测试代码,文件(charrnn.cpp)内容如下:#include <assert.h> #include <str…

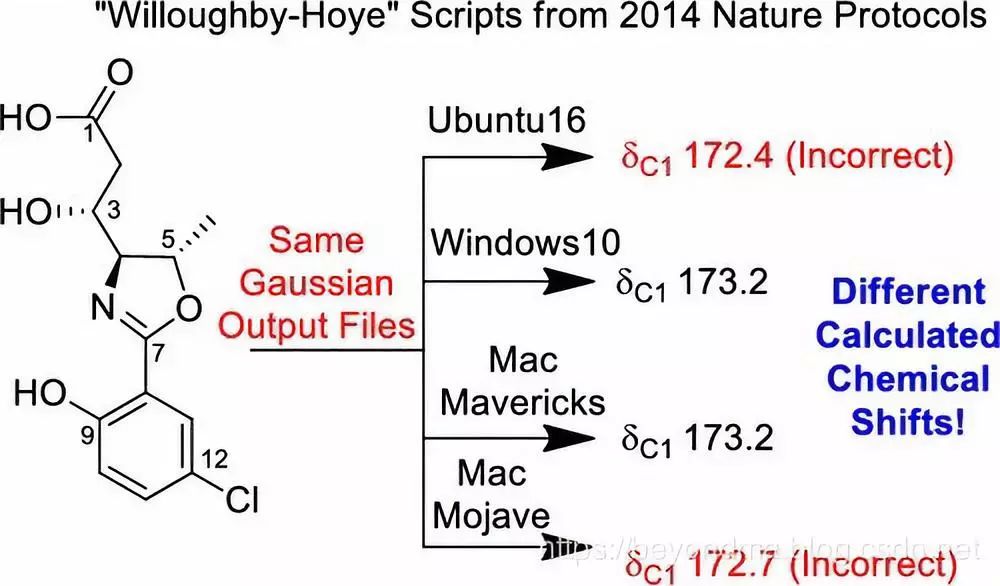

Python脚本BUG引发学界震动,影响有多大?

作者 | beyondma编辑 | Jane来源 | CSDN博客近日一篇“A guide to small-molecule structure assignment through computation of (1H and 13C) NMR chemical shifts”文章火爆网络,据作者看到的资料上看这篇论文自身的结果没有什么问题,但是,…

C++中public、protect和private用法区别

Calsspig : public animal,意思是外部代码可以随意访问 Classpig : protect animal ,意思是外部代码无法通过该子类访问基类中的public Classpig : private animal ,意思是告诉编译器从基类继承的每一个成员都当成private,即只有这个子类可以访问 转载于:https://blog.51cto.…

TensorRT Samples: MNIST(Plugin, add a custom layer)

关于TensorRT的介绍可以参考:http://blog.csdn.net/fengbingchun/article/details/78469551 以下是参考TensorRT 2.1.2中的samplePlugin.cpp文件改写的通过IPlugin添加一个全连接层实现对手写数字0-9识别的测试代码,plugin.cpp文件内容如下:…

AutoML很火,过度吹捧的结果?

作者 | Denis Vorotyntsev译者 | Shawnice编辑 | Jane出品 | AI科技大本营(ID:rgznai100)【导语】现在,很多企业都很关注AutoML领域,很多开发者也开始接触和从事AutoML相关的研究与应用工作,作者也是&#…

tomcat6 配置web管理端访问权限

配置tomcat 管理端登陆 /apache-tomcat-6.0.35/conf/tomcat-users.xml 配置文件,使用时需要把注释去掉<!-- <!-- <role rolename"tomcat"/> <role rolename"role1"/> <user username"tomcat" password"…

@程序员:Python 3.8正式发布,重要新功能都在这里

整理 | Jane、夕颜出品 | AI科技大本营(ID:rgznai100)【导读】最新版本的Python发布了!今年夏天,Python 3.8发布beta版本,但在2019年10月14日,第一个正式版本已准备就绪。现在,我们都…

TensorRT Samples: MNIST(serialize TensorRT model)

关于TensorRT的介绍可以参考: http://blog.csdn.net/fengbingchun/article/details/78469551 这里实现在构建阶段将TensorRT model序列化存到本地文件,然后在部署阶段直接load TensorRT model序列化的文件进行推理,mnist_infer.cpp文件内容…

【mysql错误】用as别名 做where条件,报未知的列 1054 - Unknown column 'name111' in 'field list'...

需求:SELECT a AS b WHRER b1; //这样使用会报错,说b不存在。 因为mysql底层跑SQL语句时:where 后的筛选条件在先, as B的别名在后。所以机器看到where 后的别名是不认的,所以会报说B不存在。 这个b只是字段a查询结…

C++2年经验

网络 sql 基础算法 最多到图和树 常用的几种设计模式,5以内即可转载于:https://www.cnblogs.com/liujin2012/p/3766106.html

在Caffe中调用TensorRT提供的MNIST model

在TensorRT 2.1.2中提供了MNIST的model,这里拿来用Caffe的代码调用实现,原始的mnist_mean.binaryproto文件调整为了纯二进制文件mnist_tensorrt_mean.binary,测试结果与使用TensorRT调用(http://blog.csdn.net/fengbingchun/article/details/…

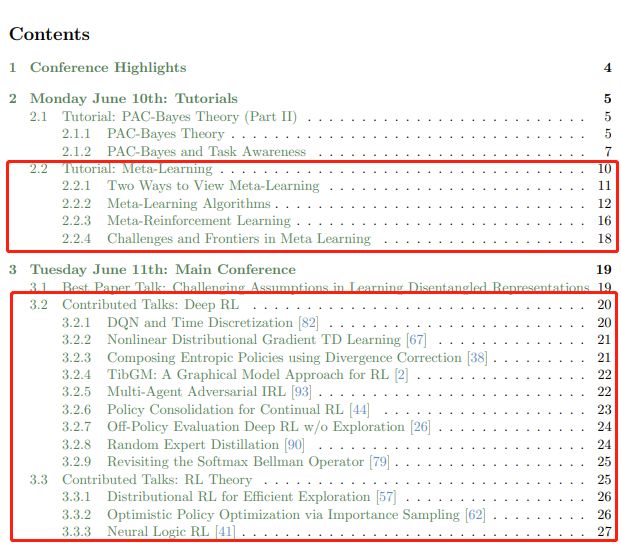

142页ICML会议强化学习笔记整理,值得细读

作者 | David Abel编辑 | DeepRL来源 | 深度强化学习实验室(ID: Deep-RL)ICML 是 International Conference on Machine Learning的缩写,即国际机器学习大会。ICML如今已发展为由国际机器学习学会(IMLS)主办的年度机器…

CF1148F - Foo Fighters

CF1148F - Foo Fighters 题意:你有n个物品,每个都有val和mask。 你要选择一个数s,如果一个物品的mask & s含有奇数个1,就把val变成-val。 求一个s使得val总和变号。 解:分步来做。发现那个奇数个1可以变成&#x…

html传參中?和amp;

<a href"MealServlet?typefindbyid&mid<%m1.getMealId()%> 在这句传參中?之后的代表要传递的參数当中有两个參数第一个为type第二个为mid假设是一个參数就不用加&假设是多个參数须要加上&来传递

实战:手把手教你实现用语音智能控制电脑 | 附完整代码

作者 | 叶圣出品 | AI科技大本营(ID:rgznai100)导语:本篇文章将基于百度API实现对电脑的语音智能控制,不需要任何硬件上的支持,仅仅依靠一台电脑即可以实现。作者经过测试,效果不错,同时可以依据…

C++/C++11中左值、左值引用、右值、右值引用的使用

C的表达式要不然是右值(rvalue),要不然就是左值(lvalue)。这两个名词是从C语言继承过来的,原本是为了帮助记忆:左值可以位于赋值语句的左侧,右值则不能。 在C语言中,二者的区别就没那么简单了。一个左值表达式的求值结…

Could not create the view: An unexpected exception was thrown. Myeclipse空间报错

转载于:https://blog.51cto.com/82654993/1424339

Banknote Dataset(钞票数据集)介绍

Banknote Dataset(钞票数据集):这是从纸币鉴别过程中的图像里提取的数据,用来预测钞票的真伪的数据集。该数据集中含有1372个样本,每个样本由5个数值型变量构成,4个输入变量和1个输出变量。小波变换工具用于从图像中提取特征。这是…

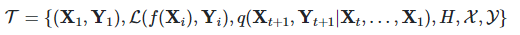

快速适应性很重要,但不是元学习的全部目标

作者 | Khurram Javed, Hengshuai Yao, Martha White译者 | Monanfei出品 | AI科技大本营(ID:rgznai100)实践证明,基于梯度的元学习在学习模型初始化、表示形式和更新规则方面非常有效,该模型允许从少量样本中进行快速适应。这些方…

面试题-自旋锁,以及jvm对synchronized的优化

背景 想要弄清楚这些问题,需要弄清楚其他的很多问题。 比如,对象,而对象本身又可以延伸出很多其他的问题。 我们平时不过只是在使用对象而已,怎么使用?就是new 对象。这只是语法层面的使用,相当于会了一门编…

DNS解析故障

在实际应用过程中可能会遇到DNS解析错误的问题,就是说当我们访问一个域名时无法完成将其解析到IP地址的工作,而直接输入网站IP却可以正常访问,这就是因为DNS解析出现故障造成的。这个现象发生的机率比较大,所以本文将从零起步教给…

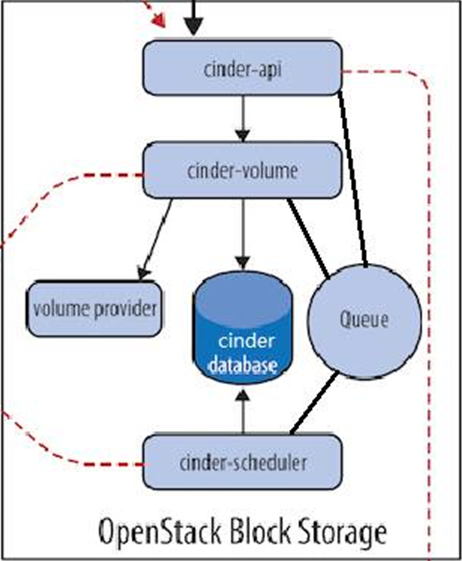

cinder存储服务

一、cinder 介绍: 理解 Block Storage 操作系统获得存储空间的方式一般有两种: 1、通过某种协议(SAS,SCSI,SAN,iSCSI 等)挂接裸硬盘,然后分区、格式化、创建文件系统;或者直接使用裸硬盘存储数据࿰…