TensorRT Samples: MNIST(Plugin, add a custom layer)

以下是参考TensorRT 2.1.2中的samplePlugin.cpp文件改写的通过IPlugin添加一个全连接层实现对手写数字0-9识别的测试代码,plugin.cpp文件内容如下:

#include <assert.h>

#include <iostream>

#include <memory>

#include <vector>

#include <string>#include <cuda_runtime_api.h>

#include <cudnn.h>

#include <cublas_v2.h>

#include <NvInfer.h>

#include <NvCaffeParser.h>

#include <opencv2/opencv.hpp>#include "common.hpp"// reference: TensorRT-2.1.2/samples/samplePlugin/samplePlugin.cpp

// demonstrates how to add a custom layer to TensorRT. It replaces the final fully connected layer of the MNIST sample with a direct call to cuBLASnamespace {typedef std::tuple<int, int, int, std::string, std::string> DATA_INFO; // intput width, input height, output size, input blob name, output blob nameint caffeToGIEModel(const std::string& deployFile, // name for caffe prototxtconst std::string& modelFile, // name for model const std::vector<std::string>& outputs, // network outputsunsigned int maxBatchSize, // batch size - NB must be at least as large as the batch we want to run with)nvcaffeparser1::IPluginFactory* pluginFactory, // factory for plugin layersnvinfer1::IHostMemory *&gieModelStream, Logger logger) // output stream for the GIE model

{// create the buildernvinfer1::IBuilder* builder = nvinfer1::createInferBuilder(logger);// parse the caffe model to populate the network, then set the outputsnvinfer1::INetworkDefinition* network = builder->createNetwork();nvcaffeparser1::ICaffeParser* parser = nvcaffeparser1::createCaffeParser();parser->setPluginFactory(pluginFactory);bool fp16 = builder->platformHasFastFp16();const nvcaffeparser1::IBlobNameToTensor* blobNameToTensor = parser->parse(deployFile.c_str(),modelFile.c_str(), *network, fp16 ? nvinfer1::DataType::kHALF : nvinfer1::DataType::kFLOAT);// specify which tensors are outputsfor (auto& s : outputs)network->markOutput(*blobNameToTensor->find(s.c_str()));// Build the enginebuilder->setMaxBatchSize(maxBatchSize);builder->setMaxWorkspaceSize(1 << 20);builder->setHalf2Mode(fp16);nvinfer1::ICudaEngine* engine = builder->buildCudaEngine(*network);CHECK(engine != nullptr);// we don't need the network any more, and we can destroy the parsernetwork->destroy();parser->destroy();// serialize the engine, then close everything downgieModelStream = engine->serialize();engine->destroy();builder->destroy();nvcaffeparser1::shutdownProtobufLibrary();

}int doInference(nvinfer1::IExecutionContext& context, float* input, float* output, int batchSize, const DATA_INFO& info)

{const nvinfer1::ICudaEngine& engine = context.getEngine();// input and output buffer pointers that we pass to the engine - the engine requires exactly IEngine::getNbBindings(),// of these, but in this case we know that there is exactly one input and one output.CHECK(engine.getNbBindings() == 2);void* buffers[2];// In order to bind the buffers, we need to know the names of the input and output tensors.// note that indices are guaranteed to be less than IEngine::getNbBindings()int inputIndex = engine.getBindingIndex(std::get<3>(info).c_str()), outputIndex = engine.getBindingIndex(std::get<4>(info).c_str());// create GPU buffers and a streamcudaMalloc(&buffers[inputIndex], batchSize * std::get<1>(info) * std::get<0>(info) * sizeof(float));cudaMalloc(&buffers[outputIndex], batchSize * std::get<2>(info) * sizeof(float));cudaStream_t stream;cudaStreamCreate(&stream);// DMA the input to the GPU, execute the batch asynchronously, and DMA it back:cudaMemcpyAsync(buffers[inputIndex], input, batchSize * std::get<1>(info) * std::get<0>(info) * sizeof(float), cudaMemcpyHostToDevice, stream);context.enqueue(batchSize, buffers, stream, nullptr);cudaMemcpyAsync(output, buffers[outputIndex], batchSize * std::get<2>(info)*sizeof(float), cudaMemcpyDeviceToHost, stream);cudaStreamSynchronize(stream);// release the stream and the bufferscudaStreamDestroy(stream);cudaFree(buffers[inputIndex]);cudaFree(buffers[outputIndex]);return 0;

}class FCPlugin: public nvinfer1::IPlugin

{

public:FCPlugin(const nvinfer1::Weights* weights, int nbWeights, int nbOutputChannels) : mNbOutputChannels(nbOutputChannels){// since we want to deal with the case where there is no bias, we can't infer// the number of channels from the bias weights.assert(nbWeights == 2);mKernelWeights = copyToDevice(weights[0].values, weights[0].count);mBiasWeights = copyToDevice(weights[1].values, weights[1].count);assert(mBiasWeights.count == 0 || mBiasWeights.count == nbOutputChannels);mNbInputChannels = int(weights[0].count / nbOutputChannels);}// create the plugin at runtime from a byte streamFCPlugin(const void* data, size_t length){const char* d = reinterpret_cast<const char*>(data), *a = d;mNbInputChannels = read<int>(d);mNbOutputChannels = read<int>(d);int biasCount = read<int>(d);mKernelWeights = deserializeToDevice(d, mNbInputChannels * mNbOutputChannels);mBiasWeights = deserializeToDevice(d, biasCount);assert(d == a + length);}~FCPlugin(){cudaFree(const_cast<void*>(mKernelWeights.values));cudaFree(const_cast<void*>(mBiasWeights.values));}int getNbOutputs() const override{return 1;}nvinfer1::Dims getOutputDimensions(int index, const nvinfer1::Dims* inputs, int nbInputDims) override{assert(index == 0 && nbInputDims == 1 && inputs[0].nbDims == 3);assert(mNbInputChannels == inputs[0].d[0] * inputs[0].d[1] * inputs[0].d[2]);return nvinfer1::DimsCHW(mNbOutputChannels, 1, 1);}void configure(const nvinfer1::Dims* inputDims, int nbInputs, const nvinfer1::Dims* outputDims, int nbOutputs, int maxBatchSize) override{}int initialize() override{cudnnCreate(&mCudnn); // initialize cudnn and cublascublasCreate(&mCublas);cudnnCreateTensorDescriptor(&mSrcDescriptor); // create cudnn tensor descriptors we need for bias additioncudnnCreateTensorDescriptor(&mDstDescriptor);return 0;}virtual void terminate() override{cublasDestroy(mCublas);cudnnDestroy(mCudnn);}virtual size_t getWorkspaceSize(int maxBatchSize) const override{return 0;}virtual int enqueue(int batchSize, const void*const * inputs, void** outputs, void* workspace, cudaStream_t stream) override{float kONE = 1.0f, kZERO = 0.0f;cublasSetStream(mCublas, stream);cudnnSetStream(mCudnn, stream);cublasSgemm(mCublas, CUBLAS_OP_T, CUBLAS_OP_N, mNbOutputChannels, batchSize, mNbInputChannels, &kONE, reinterpret_cast<const float*>(mKernelWeights.values), mNbInputChannels, reinterpret_cast<const float*>(inputs[0]), mNbInputChannels, &kZERO, reinterpret_cast<float*>(outputs[0]), mNbOutputChannels);if (mBiasWeights.count) {cudnnSetTensor4dDescriptor(mSrcDescriptor, CUDNN_TENSOR_NCHW, CUDNN_DATA_FLOAT, 1, mNbOutputChannels, 1, 1);cudnnSetTensor4dDescriptor(mDstDescriptor, CUDNN_TENSOR_NCHW, CUDNN_DATA_FLOAT, batchSize, mNbOutputChannels, 1, 1);cudnnAddTensor(mCudnn, &kONE, mSrcDescriptor, mBiasWeights.values, &kONE, mDstDescriptor, outputs[0]);}return 0;}virtual size_t getSerializationSize() override{// 3 integers (number of input channels, number of output channels, bias size), and then the weights:return sizeof(int)*3 + mKernelWeights.count*sizeof(float) + mBiasWeights.count*sizeof(float);}virtual void serialize(void* buffer) override{char* d = reinterpret_cast<char*>(buffer), *a = d;write(d, mNbInputChannels);write(d, mNbOutputChannels);write(d, (int)mBiasWeights.count);serializeFromDevice(d, mKernelWeights);serializeFromDevice(d, mBiasWeights);assert(d == a + getSerializationSize());}private:template<typename T> void write(char*& buffer, const T& val){*reinterpret_cast<T*>(buffer) = val;buffer += sizeof(T);}template<typename T> T read(const char*& buffer){T val = *reinterpret_cast<const T*>(buffer);buffer += sizeof(T);return val;}nvinfer1::Weights copyToDevice(const void* hostData, size_t count){void* deviceData;cudaMalloc(&deviceData, count * sizeof(float));cudaMemcpy(deviceData, hostData, count * sizeof(float), cudaMemcpyHostToDevice);return nvinfer1::Weights{ nvinfer1::DataType::kFLOAT, deviceData, int64_t(count) };}void serializeFromDevice(char*& hostBuffer, nvinfer1::Weights deviceWeights){cudaMemcpy(hostBuffer, deviceWeights.values, deviceWeights.count * sizeof(float), cudaMemcpyDeviceToHost);hostBuffer += deviceWeights.count * sizeof(float);}nvinfer1::Weights deserializeToDevice(const char*& hostBuffer, size_t count){nvinfer1::Weights w = copyToDevice(hostBuffer, count);hostBuffer += count * sizeof(float);return w; }int mNbOutputChannels, mNbInputChannels;cudnnHandle_t mCudnn;cublasHandle_t mCublas;nvinfer1::Weights mKernelWeights, mBiasWeights;cudnnTensorDescriptor_t mSrcDescriptor, mDstDescriptor;

};// integration for serialization

class PluginFactory : public nvinfer1::IPluginFactory, public nvcaffeparser1::IPluginFactory

{

public:// caffe parser plugin implementationbool isPlugin(const char* name) override{return !strcmp(name, "ip2");}virtual nvinfer1::IPlugin* createPlugin(const char* layerName, const nvinfer1::Weights* weights, int nbWeights) override{// there's no way to pass parameters through from the model definition, so we have to define it here explicitlystatic const int NB_OUTPUT_CHANNELS = 10; assert(isPlugin(layerName) && nbWeights == 2 && weights[0].type == nvinfer1::DataType::kFLOAT && weights[1].type == nvinfer1::DataType::kFLOAT);assert(mPlugin.get() == nullptr);mPlugin = std::unique_ptr<FCPlugin>(new FCPlugin(weights, nbWeights, NB_OUTPUT_CHANNELS));return mPlugin.get();}// deserialization plugin implementationnvinfer1::IPlugin* createPlugin(const char* layerName, const void* serialData, size_t serialLength) override{ assert(isPlugin(layerName));assert(mPlugin.get() == nullptr);mPlugin = std::unique_ptr<FCPlugin>(new FCPlugin(serialData, serialLength));return mPlugin.get();}// the application has to destroy the plugin when it knows it's safe to do sovoid destroyPlugin(){mPlugin.release();}std::unique_ptr<FCPlugin> mPlugin{ nullptr };

};} // namespaceint test_plugin()

{// stuff we know about the network and the caffe input/output blobsconst DATA_INFO info(28, 28, 10, "data", "prob");const std::string deploy_file {"models/mnist.prototxt"};const std::string model_file {"models/mnist.caffemodel"};const std::string mean_file {"models/mnist_mean.binaryproto"};Logger logger; // multiple instances of IRuntime and/or IBuilder must all use the same logger// create a GIE model from the caffe model and serialize it to a streamPluginFactory pluginFactory;nvinfer1::IHostMemory* gieModelStream{ nullptr };caffeToGIEModel(deploy_file, model_file, std::vector<std::string>{std::get<4>(info).c_str()}, 1, &pluginFactory, gieModelStream, logger);pluginFactory.destroyPlugin();nvcaffeparser1::ICaffeParser* parser = nvcaffeparser1::createCaffeParser();nvcaffeparser1::IBinaryProtoBlob* meanBlob = parser->parseBinaryProto(mean_file.c_str());parser->destroy();// deserialize the engine nvinfer1::IRuntime* runtime = nvinfer1::createInferRuntime(logger);nvinfer1::ICudaEngine* engine = runtime->deserializeCudaEngine(gieModelStream->data(), gieModelStream->size(), &pluginFactory);nvinfer1::IExecutionContext *context = engine->createExecutionContext();// parse the mean file and subtract it from the imageconst float* meanData = reinterpret_cast<const float*>(meanBlob->getData());const std::string image_path{ "images/digit/" };for (int i = 0; i < 10; ++i) {const std::string image_name = image_path + std::to_string(i) + ".png";cv::Mat mat = cv::imread(image_name, 0);if (!mat.data) {fprintf(stderr, "read image fail: %s\n", image_name.c_str());return -1;}cv::resize(mat, mat, cv::Size(std::get<0>(info), std::get<1>(info)));mat.convertTo(mat, CV_32FC1);float data[std::get<1>(info)*std::get<0>(info)];const float* p = (float*)mat.data;for (int j = 0; j < std::get<1>(info)*std::get<0>(info); ++j) {data[j] = p[j] - meanData[j];}// run inferencefloat prob[std::get<2>(info)];doInference(*context, data, prob, 1, info);float val{-1.f};int idx{-1};for (int t = 0; t < std::get<2>(info); ++t) {if (val < prob[t]) {val = prob[t];idx = t;}}fprintf(stdout, "expected value: %d, actual value: %d, probability: %f\n", i, idx, val);}meanBlob->destroy();if (gieModelStream) gieModelStream->destroy();// destroy the enginecontext->destroy();engine->destroy();runtime->destroy();pluginFactory.destroyPlugin();return 0;

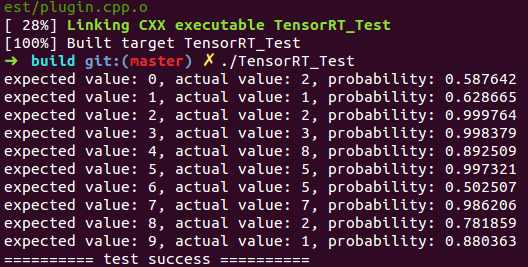

}执行结果如下(与http://blog.csdn.net/fengbingchun/article/details/78552908 中结果一致):

在Linux下通过CMake编译TensorRT_Test中的测试代码步骤:

1. 将终端定位到CUDA_Test/prj/linux_tensorrt_cmake,依次执行如下命令:$ mkdir build$ cd build$ cmake ..$ make (生成TensorRT_Test执行文件)$ ln -s ../../../test_data/models ./ (将models目录软链接到build目录下)$ ln -s ../../../test_data/images ./ (将images目录软链接到build目录下)$ ./TensorRT_Test

2. 对于有需要用OpenCV参与的读取图像的操作,需要先将对应文件中的图像路径修改为Linux支持的路径格式GitHub: https://github.com/fengbingchun/CUDA_Test

相关文章:

AutoML很火,过度吹捧的结果?

作者 | Denis Vorotyntsev译者 | Shawnice编辑 | Jane出品 | AI科技大本营(ID:rgznai100)【导语】现在,很多企业都很关注AutoML领域,很多开发者也开始接触和从事AutoML相关的研究与应用工作,作者也是&#…

tomcat6 配置web管理端访问权限

配置tomcat 管理端登陆 /apache-tomcat-6.0.35/conf/tomcat-users.xml 配置文件,使用时需要把注释去掉<!-- <!-- <role rolename"tomcat"/> <role rolename"role1"/> <user username"tomcat" password"…

@程序员:Python 3.8正式发布,重要新功能都在这里

整理 | Jane、夕颜出品 | AI科技大本营(ID:rgznai100)【导读】最新版本的Python发布了!今年夏天,Python 3.8发布beta版本,但在2019年10月14日,第一个正式版本已准备就绪。现在,我们都…

TensorRT Samples: MNIST(serialize TensorRT model)

关于TensorRT的介绍可以参考: http://blog.csdn.net/fengbingchun/article/details/78469551 这里实现在构建阶段将TensorRT model序列化存到本地文件,然后在部署阶段直接load TensorRT model序列化的文件进行推理,mnist_infer.cpp文件内容…

【mysql错误】用as别名 做where条件,报未知的列 1054 - Unknown column 'name111' in 'field list'...

需求:SELECT a AS b WHRER b1; //这样使用会报错,说b不存在。 因为mysql底层跑SQL语句时:where 后的筛选条件在先, as B的别名在后。所以机器看到where 后的别名是不认的,所以会报说B不存在。 这个b只是字段a查询结…

C++2年经验

网络 sql 基础算法 最多到图和树 常用的几种设计模式,5以内即可转载于:https://www.cnblogs.com/liujin2012/p/3766106.html

在Caffe中调用TensorRT提供的MNIST model

在TensorRT 2.1.2中提供了MNIST的model,这里拿来用Caffe的代码调用实现,原始的mnist_mean.binaryproto文件调整为了纯二进制文件mnist_tensorrt_mean.binary,测试结果与使用TensorRT调用(http://blog.csdn.net/fengbingchun/article/details/…

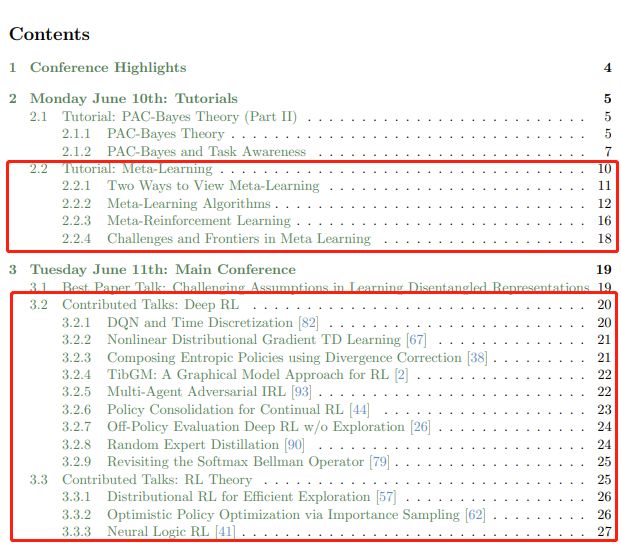

142页ICML会议强化学习笔记整理,值得细读

作者 | David Abel编辑 | DeepRL来源 | 深度强化学习实验室(ID: Deep-RL)ICML 是 International Conference on Machine Learning的缩写,即国际机器学习大会。ICML如今已发展为由国际机器学习学会(IMLS)主办的年度机器…

CF1148F - Foo Fighters

CF1148F - Foo Fighters 题意:你有n个物品,每个都有val和mask。 你要选择一个数s,如果一个物品的mask & s含有奇数个1,就把val变成-val。 求一个s使得val总和变号。 解:分步来做。发现那个奇数个1可以变成&#x…

html传參中?和amp;

<a href"MealServlet?typefindbyid&mid<%m1.getMealId()%> 在这句传參中?之后的代表要传递的參数当中有两个參数第一个为type第二个为mid假设是一个參数就不用加&假设是多个參数须要加上&来传递

实战:手把手教你实现用语音智能控制电脑 | 附完整代码

作者 | 叶圣出品 | AI科技大本营(ID:rgznai100)导语:本篇文章将基于百度API实现对电脑的语音智能控制,不需要任何硬件上的支持,仅仅依靠一台电脑即可以实现。作者经过测试,效果不错,同时可以依据…

C++/C++11中左值、左值引用、右值、右值引用的使用

C的表达式要不然是右值(rvalue),要不然就是左值(lvalue)。这两个名词是从C语言继承过来的,原本是为了帮助记忆:左值可以位于赋值语句的左侧,右值则不能。 在C语言中,二者的区别就没那么简单了。一个左值表达式的求值结…

Could not create the view: An unexpected exception was thrown. Myeclipse空间报错

转载于:https://blog.51cto.com/82654993/1424339

Banknote Dataset(钞票数据集)介绍

Banknote Dataset(钞票数据集):这是从纸币鉴别过程中的图像里提取的数据,用来预测钞票的真伪的数据集。该数据集中含有1372个样本,每个样本由5个数值型变量构成,4个输入变量和1个输出变量。小波变换工具用于从图像中提取特征。这是…

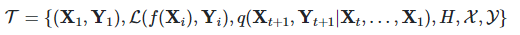

快速适应性很重要,但不是元学习的全部目标

作者 | Khurram Javed, Hengshuai Yao, Martha White译者 | Monanfei出品 | AI科技大本营(ID:rgznai100)实践证明,基于梯度的元学习在学习模型初始化、表示形式和更新规则方面非常有效,该模型允许从少量样本中进行快速适应。这些方…

面试题-自旋锁,以及jvm对synchronized的优化

背景 想要弄清楚这些问题,需要弄清楚其他的很多问题。 比如,对象,而对象本身又可以延伸出很多其他的问题。 我们平时不过只是在使用对象而已,怎么使用?就是new 对象。这只是语法层面的使用,相当于会了一门编…

DNS解析故障

在实际应用过程中可能会遇到DNS解析错误的问题,就是说当我们访问一个域名时无法完成将其解析到IP地址的工作,而直接输入网站IP却可以正常访问,这就是因为DNS解析出现故障造成的。这个现象发生的机率比较大,所以本文将从零起步教给…

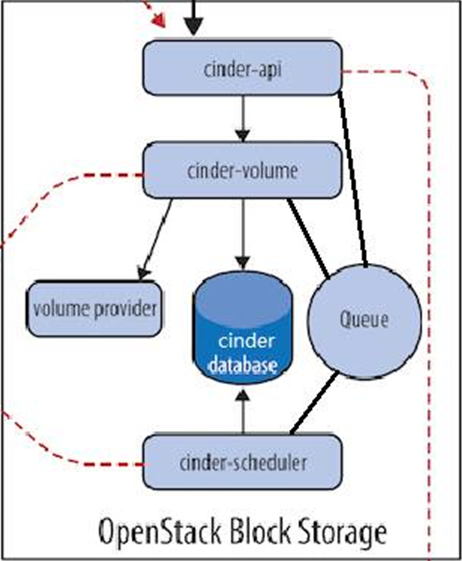

cinder存储服务

一、cinder 介绍: 理解 Block Storage 操作系统获得存储空间的方式一般有两种: 1、通过某种协议(SAS,SCSI,SAN,iSCSI 等)挂接裸硬盘,然后分区、格式化、创建文件系统;或者直接使用裸硬盘存储数据࿰…

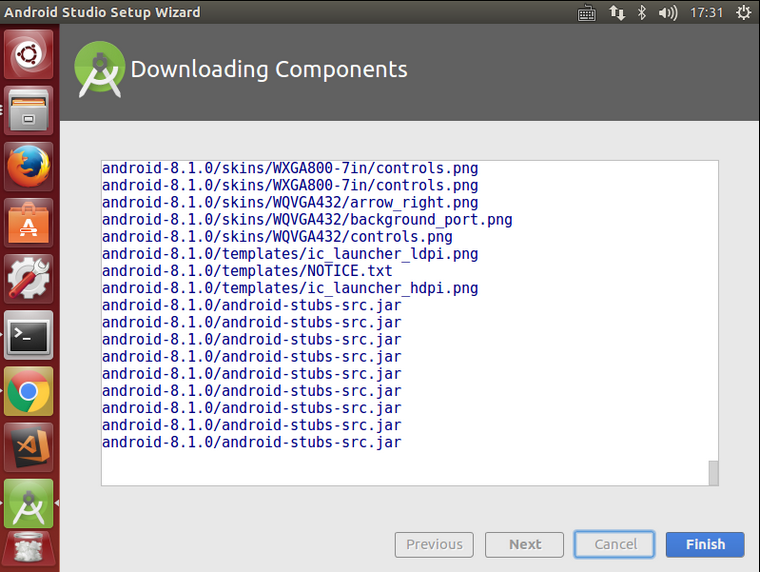

Ubuntu 14.04 64位机上配置Android Studio操作步骤

Android Studio是一个为Android平台开发程序的集成开发环境。2013年5月16日在Google I/O上发布,可供开发者免费使用。Android Studio基于JetBrains IntelliJ IDEA,为Android开发特殊定制,并在Windows、OS X和Linux平台上均可运行。1. 从 htt…

大规模1.4亿中文知识图谱数据,我把它开源了

作者 | Just出品 | AI科技大本营(ID:rgznai100)人工智能从感知阶段逐步进入认知智能的过程中,知识图谱技术将为机器提供认知思维能力和关联分析能力,可以应用于机器人问答系统、内容推荐等系统中。不过要降低知识图谱技术应用的门…

使用CSS 3创建不规则图形

2019独角兽企业重金招聘Python工程师标准>>> 前言 CSS 创建复杂图形的技术即将会被广泛支持,并且应用到实际项目中。本篇文章的目的是为大家开启它的冰山一角。我希望这篇文章能让你对不规则图形有一个初步的了解。 现在,我们已经可以使用CSS…

谷歌丰田联合成果ALBERT了解一下:新轻量版BERT,参数小18倍,性能依旧SOTA

作者 | Less Wright编译 | ronghuaiyang来源 | AI公园(ID:AI_Paradise)【导读】这是来自Google和Toyota的新NLP模型,超越Bert,参数小了18倍。你以前的NLP模型参数效率低下,而且有些过时。祝你有美好的一天。谷歌Resear…

C++中extern C的使用

C程序有时需要调用其它语言编写的函数,最常见的是调用C语言编写的函数。像所有其它名字一样,其它语言中的函数名字也必须在C中进行声明,并且该声明必须指定返回类型和形参列表。对于其它语言编写的函数来说,编译器检查…

Linux之tmpwatch命令

1、tmpwatch命令功能简介[rootvms002 /]# whatis tmpwatch tmpwatch (8) - removes files which havent been accessed for a period of... #删除一段时间内未被访问的文件tmpwatch删除最近一段时间内没有被访问的文件,时间以小时为单位,节省磁盘空间。…

你不得不知道的Visual Studio 2012(1)- 每日必用功能

2019独角兽企业重金招聘Python工程师标准>>> Visual Studio 2012已经正式发布,有很多花哨的新特性,也有很多方便使用者的新功能,当然也有负面声音。对于我们程序员,最关心的还是如何快速掌握VS2012,用于平时…

C++11中std::unique_lock的使用

std::unique_lock为锁管理模板类,是对通用mutex的封装。std::unique_lock对象以独占所有权的方式(unique owership)管理mutex对象的上锁和解锁操作,即在unique_lock对象的声明周期内,它所管理的锁对象会一直保持上锁状态;而unique…

为何Google将几十亿行源代码放在一个仓库?| CSDN博文精选

作者 | Rachel Potvin,Josh Levenberg译者 | 张建军编辑 | apddd【AI科技大本营导读】与大多数开发者的想象不同,Google只有一个代码仓库——全公司使用不同语言编写的超过10亿文件,近百TB源代码都存放在自行开发的版本管理系统Piper中&#…

小小hanoi

为什么80%的码农都做不了架构师?>>> View Code #include " iostream " using namespace std; int k 0 ; void hanoi( int m , char a , char b, char c){ if (m 1 ) { k ; printf( " %c->%c " ,a , c); return…

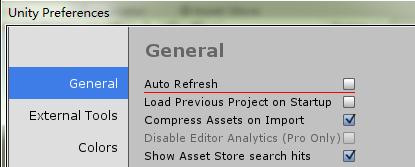

Unity3D心得分享

本篇文章的内容以各种tips为主,不间断更新 2019/05/10 最近更新: 使用Instantiate初始化参数去实例对象 Unity DEMO学习 Unity3D Adam Demo的学习与研究 Unity3D The Blacksmith Demo部分内容学习 Viking Village维京村落demo中的地面积水效果 Viking V…

django搭建示例-ubantu环境

python3安装--------------------------------------------------------------------------- 最新的django依赖python3,同时ubantu系统默认自带python2与python3,这里单独安装一套python3,并且不影响原来的python环境 django demo使用sqlite3,…