OpenCV4Android开发实录(2): 使用OpenCV3.4.1库实现人脸检测

OpenCV4Android开发实录(2): 使用OpenCV3.3.0库实现人脸检测

转载请声明出处:http://write.blog.csdn.net/postedit/78992490

OpenCV4Android系列:

1. OpenCV4Android开发实录(1):移植OpenCV3.3.0库到Android Studio

2.OpenCV4Android开发实录(2): 使用OpenCV3.3.0库实现人脸检测

上一篇文章OpenCV4Android开发实录(1):移植OpenCV3.3.0库到Android Studio大概介绍了下OpenCV库的基本情况,阐述了将OpenCV库移植到Android Studio项目中的具体步骤。本文将在此文的基础上,通过对OpenCV框架中的人脸检测模块做相应介绍,然后实现人脸检测功能。

一、人脸检测模块移植

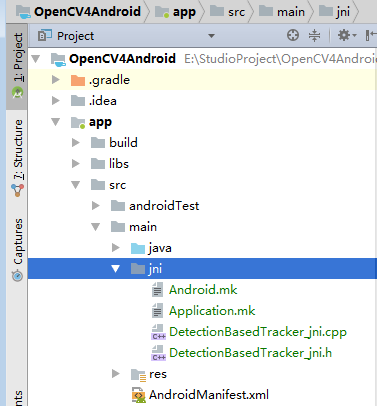

1.拷贝opencv-3.3.0-android-sdk\OpenCV-android-sdk\samples\face-detection\jni目录到工程app module的main目录下

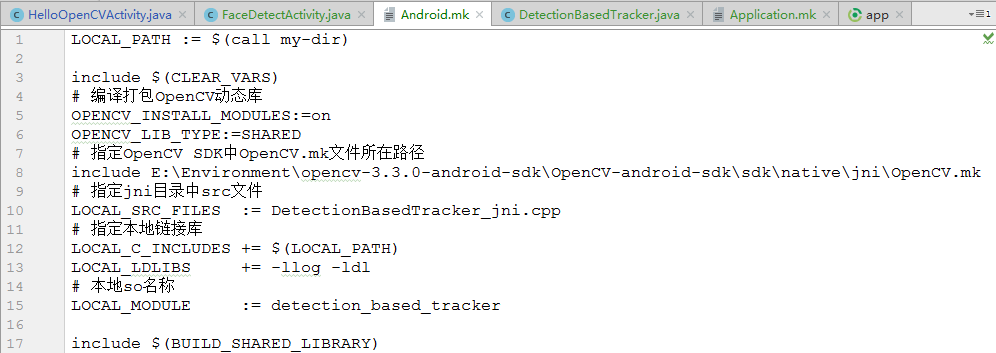

2.修改jni目录下的Android.mk

(1) 将

- #OPENCV_INSTALL_MODULES:=off

- #OPENCV_LIB_TYPE:=SHARED

- OPENCV_INSTALL_MODULES:=on

- OPENCV_LIB_TYPE:=SHARED

(2) 将

- ifdef OPENCV_ANDROID_SDK

- ifneq ("","$(wildcard $(OPENCV_ANDROID_SDK)/OpenCV.mk)")

- <span style="white-space:pre;"> </span>include ${OPENCV_ANDROID_SDK}/OpenCV.mk

- else

- <span style="white-space:pre;"> </span>include ${OPENCV_ANDROID_SDK}/sdk/native/jni/OpenCV.mk

- endif

- <span style="white-space:pre;"> </span>include ../../sdk/native/jni/OpenCV.mk

- endif

- include E:\Environment\opencv-3.3.0-android-sdk\OpenCV-android-sdk\sdk\native\jni\OpenCV.mk

其中,include包含的就是OpenCV SDK中OpenCV.mk文件所存储的绝对路径。最终Android.mk修改效果如下:

3.修改jni目录下Application.mk。由于在导入OpenCV libs时只拷贝了armeabi 、armeabi-v7a、arm64-v8a,因此这里指定编译平台也为上述三个;修改APP_PLaTFORM版本为android-16(可根据自身情况而定),具体如下:

- APP_STL := gnustl_static

- APP_CPPFLAGS := -frtti –fexceptions

- # 指定编译平台

- APP_ABI := armeabi armeabi-v7a arm64-v8a

- # 指定Android平台

- APP_PLATFORM := android-16

4.修改DetectionBasedTracker_jni.h和DetectionBasedTracker_jni.cpp文件,将源文件中所有包含前缀“Java_org_opencv_samples_facedetect_”替换为“Java_com_jiangdg_opencv4android_natives_”,其中com.jiangdg.opencv4android.natives是Java层类DetectionBasedTracker.java所在的包路径,该类包含了人脸检测相关的native方法,否则,在调用自己编译生成的so库时会提示找不到该本地函数错误,以DetectionBasedTracker_jni.h为例:

- /* DO NOT EDIT THIS FILE - it is machine generated */

- #include <jni.h>

- /* Header for class org_opencv_samples_fd_DetectionBasedTracker */

- #ifndef _Included_org_opencv_samples_fd_DetectionBasedTracker

- #define _Included_org_opencv_samples_fd_DetectionBasedTracker

- #ifdef __cplusplus

- extern "C" {

- #endif

- /*

- * Class: org_opencv_samples_fd_DetectionBasedTracker

- * Method: nativeCreateObject

- * Signature: (Ljava/lang/String;F)J

- */

- JNIEXPORT jlong JNICALL Java_com_jiangdg_opencv4android_natives_DetectionBasedTracker_nativeCreateObject

- (JNIEnv *, jclass, jstring, jint);

- /*

- * Class: org_opencv_samples_fd_DetectionBasedTracker

- * Method: nativeDestroyObject

- * Signature: (J)V

- */

- JNIEXPORT void JNICALL Java_com_jiangdg_opencv4android_natives_DetectionBasedTracker_nativeDestroyObject

- (JNIEnv *, jclass, jlong);

- /*

- * Class: org_opencv_samples_fd_DetectionBasedTracker

- * Method: nativeStart

- * Signature: (J)V

- */

- JNIEXPORT void JNICALL Java_com_jiangdg_opencv4android_natives_DetectionBasedTracker_nativeStart

- (JNIEnv *, jclass, jlong);

- /*

- * Class: org_opencv_samples_fd_DetectionBasedTracker

- * Method: nativeStop

- * Signature: (J)V

- */

- JNIEXPORT void JNICALL Java_com_jiangdg_opencv4android_natives_DetectionBasedTracker_nativeStop

- (JNIEnv *, jclass, jlong);

- /*

- * Class: org_opencv_samples_fd_DetectionBasedTracker

- * Method: nativeSetFaceSize

- * Signature: (JI)V

- */

- JNIEXPORT void JNICALL Java_com_jiangdg_opencv4android_natives_DetectionBasedTracker_nativeSetFaceSize

- (JNIEnv *, jclass, jlong, jint);

- /*

- * Class: org_opencv_samples_fd_DetectionBasedTracker

- * Method: nativeDetect

- * Signature: (JJJ)V

- */

- JNIEXPORT void JNICALL Java_com_jiangdg_opencv4android_natives_DetectionBasedTracker_nativeDetect

- (JNIEnv *, jclass, jlong, jlong, jlong);

- #ifdef __cplusplus

- }

- #endif

- #endif

5.打开Android Studio中的Terminal窗口,使用cd命令切换到工程jni目录所在位置,并执行ndk-build命令,然后会自动在工程的app/src/main目录下生成libs和obj目录,其中libs目录存放的是目标动态库libdetection_based_tracker.so。

生成so库:

注意:如果执行ndk-build命令提示命令不存在,说明你的ndk环境变量没有配置好。

6.修改app模块build.gradle中的sourceSets字段,禁止自动调用ndk-build命令,设置目标so的存放路径,代码如下:- android {

- compileSdkVersion 25

- defaultConfig {

- applicationId "com.jiangdg.opencv4android"

- minSdkVersion 15

- targetSdkVersion 25

- versionCode 1

- versionName "1.0"

- }

- ….// 代码省略

- sourceSets {

- main {

- jni.srcDirs = [] //禁止自动调用ndk-build命令

- jniLibs.srcDir 'src/main/libs' // 设置目标的so存放路径

- }

- }

- ….// 代码省略

- }

其中,jni.srcDirs = []的作用是禁用gradle默认的ndk-build,防止AS自己生成android.mk编译jni工程,jniLibs.srcDir 'src/main/libs'的作用设置目标的so存放路径,以将自己生成的so组装到apk中。

二、源码解析使用OpenCV3.3.0库实现人脸检测功能主要包含以下四个步骤,即:

(1) 初始化加载OpenCV库引擎;

(2) 通过OpenCV库开启Camera渲染;

(3) 加载人脸检测模型;

(4) 调用人脸检测本地动态库实现人脸识别;

1.初始化加载OpenCV库引擎

OpenCV库的加载有两种方式,一种通过OpenCV Manager进行动态加载,也就是官方推荐的方式,这种方式需要另外安装OpenCV Manager,主要通过调用OpenCVLoader.initAsync()方法进行初始化;另一种为静态加载,也就是本文所使用的方法,即先将相关架构的so包拷贝到工程的libs目录,通过调用OpenCVLoader.initDebug()方法进行初始化,类似于调用system.loadLibrary("opencv_java")。

- if (!OpenCVLoader.initDebug()) {

- // 静态加载OpenCV失败,使用OpenCV Manager初始化

- // 参数:OpenCV版本;上下文;加载结果回调接口

- OpenCVLoader.initAsync(OpenCVLoader.OPENCV_VERSION_3_3_0,

- this, mLoaderCallback);

- } else {

- // 如果静态加载成功,直接调用onManagerConnected方法

- mLoaderCallback.onManagerConnected(LoaderCallbackInterface.SUCCESS);

- }

- private BaseLoaderCallback mLoaderCallback = new BaseLoaderCallback(this) {

- @Override

- public void onManagerConnected(int status) {

- switch (status) {

- case LoaderCallbackInterface.SUCCESS:

- // OpenCV初始化加载成功,再加载本地so库

- System.loadLibrary("detection_based_tracker");

- // 加载人脸检测模型

- …..

- // 初始化人脸检测引擎

- …..

- // 开启渲染Camera

- mCameraView.enableView();

- break;

- default:

- super.onManagerConnected(status);

- break;

- }

- }

- };

在OpenCV中与Camera紧密相关的主要有两个类,即CameraBridgeViewBase和JavaCameraView,其中,CameraBridgeViewBase是一个基类,继承于SuarfaceView和SurafaceHolder.Callback接口,用于实现Camera与OpenCV库之间的交互,它主要的作用是控制Camera、处理视频帧以及调用相关内部接口对视频帧做相关调整,然后将调整后的视频帧数据渲染到手机屏幕上。比如enableView()方法、disableView()方法用于连接到Camera和断开与Camera的连接,代码如下:

- public void enableView() {

- synchronized(mSyncObject) {

- mEnabled = true;

- checkCurrentState();

- }

- public void disableView() {

- synchronized(mSyncObject) {

- mEnabled = false;

- checkCurrentState();

- }

- private void checkCurrentState() {

- Log.d(TAG, "call checkCurrentState");

- int targetState;

- if (mEnabled && mSurfaceExist && getVisibility() == VISIBLE) {

- targetState = STARTED;

- } else {

- targetState = STOPPED;

- }

- if (targetState != mState) {

- /* The state change detected. Need to exit the current state and enter target state */

- processExitState(mState);

- mState = targetState;

- processEnterState(mState);

- }

- }

- private void processEnterState(int state) {

- Log.d(TAG, "call processEnterState: " + state);

- switch(state) {

- case STARTED:

- // 调用connectCamera()抽象方法,启动Camera

- onEnterStartedState();

- // 调用连接成功监听器接口方法

- if (mListener != null) {

- mListener.onCameraViewStarted(mFrameWidth, mFrameHeight);

- }

- break;

- case STOPPED:

- // 调用disconnectCamera()抽象方法,停用Camera

- onEnterStoppedState();

- // 调用断开连接监听器接口方法

- if (mListener != null) {

- mListener.onCameraViewStopped();

- }

- break;

- };

- @Override

- protected boolean connectCamera(int width, int height) {

- // 初始化Camera,连接到Camera

- if (!initializeCamera(width, height))

- return false;

- mCameraFrameReady = false;

- // 开启一个与Camera相关的工作线程CameraWorker

- Log.d(TAG, "Starting processing thread");

- mStopThread = false;

- mThread = new Thread(new CameraWorker());

- mThread.start();

- return true;

- }

- @Override

- protected void disconnectCamera() {

- // 断开Camera连接,释放相关资源

- try {

- mStopThread = true;

- Log.d(TAG, "Notify thread");

- synchronized (this) {

- this.notify();

- }

- // 停止工作线程

- if (mThread != null)

- mThread.join();

- } catch (InterruptedException e) {

- e.printStackTrace();

- } finally {

- mThread = null;

- }

- /* Now release camera */

- releaseCamera();

- mCameraFrameReady = false;

- private class CameraWorker implements Runnable {

- @Override

- public void run() {

- do {

- …..//代码省略

- if (!mStopThread && hasFrame) {

- if (!mFrameChain[1 - mChainIdx].empty())

- deliverAndDrawFrame(mCameraFrame[1 - mChainIdx]);

- }

- } while (!mStopThread);

- }

- }

为了得到更好的人脸检测性能,OpenCV在SDK中提供了多个frontface检测器(人脸模型),存放在..\opencv-3.3.0-android-sdk\OpenCV-android-sdk\sdk\etc\目录下,这篇对OpenCV自带的人脸检测模型做了比较,结果显示LBP实时性要好些。因此,本文选用目lbpcascades录下lbpcascade_frontalface.xml模型,该模型包括了3000个正样本和1500个负样本,我们将其拷贝到AS工程的res/raw目录下,并通过getDir方法保存到/data/data/com.jiangdg.opencv4android/ cascade目录下。

- InputStream is = getResources().openRawResource(R.raw.lbpcascade_frontalface);

- File cascadeDir = getDir("cascade", Context.MODE_PRIVATE);

- mCascadeFile = new File(cascadeDir, "lbpcascade_frontalface.xml");

- FileOutputStream os = new FileOutputStream(mCascadeFile);

- byte[] buffer = new byte[4096];

- int byteesRead;

- while ((byteesRead = is.read(buffer)) != -1) {

- os.write(buffer, 0, byteesRead);

- }

- is.close();

- os.close();

4. 人脸检测

在opencv-3.3.0-android-sdk的face-detection示例项目中,提供了CascadeClassifier和

DetectionBasedTracker两种方式来实现人脸检测,其中,CascadeClassifier是OpenCV用于人脸检测的一个级联分类器,DetectionBasedTracker是通过JNI编程实现的人脸检测。两种方式我都试用了下,发现DetectionBasedTracker方式还是比CascadeClassifier稳定些,CascadeClassifier会存在一定频率的误检。

- public class DetectionBasedTracker {

- private long mNativeObj = 0;

- // 构造方法:初始化人脸检测引擎

- public DetectionBasedTracker(String cascadeName, int minFaceSize) {

- mNativeObj = nativeCreateObject(cascadeName, minFaceSize);

- }

- // 开始人脸检测

- public void start() {

- nativeStart(mNativeObj);

- }

- // 停止人脸检测

- public void stop() {

- nativeStop(mNativeObj);

- }

- // 设置人脸最小尺寸

- public void setMinFaceSize(int size) {

- nativeSetFaceSize(mNativeObj, size);

- }

- // 检测

- public void detect(Mat imageGray, MatOfRect faces) {

- nativeDetect(mNativeObj, imageGray.getNativeObjAddr(), faces.getNativeObjAddr());

- }

- // 释放资源

- public void release() {

- nativeDestroyObject(mNativeObj);

- mNativeObj = 0;

- }

- // native方法

- private static native long nativeCreateObject(String cascadeName, int minFaceSize);

- private static native void nativeDestroyObject(long thiz);

- private static native void nativeStart(long thiz);

- private static native void nativeStop(long thiz);

- private static native void nativeSetFaceSize(long thiz, int size);

- private static native void nativeDetect(long thiz, long inputImage, long faces);

- }

- @Override

- public Mat onCameraFrame(CameraBridgeViewBase.CvCameraViewFrame inputFrame) {

- ….// 代码省略

- // 获取检测到的脸部数据

- MatOfRect faces = new MatOfRect();

- …// 代码省略

- if (mNativeDetector != null) {

- mNativeDetector.detect(mGray, faces);

- }

- // 绘制检测框

- Rect[] facesArray = faces.toArray();

- for (int i = 0; i < facesArray.length; i++) {

- Imgproc.rectangle(mRgba, facesArray[i].tl(), facesArray[i].br(), FACE_RECT_COLOR, 3);

- }

- return mRgba;

- }

注:由于篇幅原因,关于人脸检测的C/C++实现代码(原理),我们将在后续文章中讨论。

三、效果演示

1. FaceDetectActivity.class

- /**

- * 人脸检测

- *

- * Created by jiangdongguo on 2018/1/4.

- */

- public class FaceDetectActivity extends AppCompatActivity implements CameraBridgeViewBase.CvCameraViewListener2 {

- private static final int JAVA_DETECTOR = 0;

- private static final int NATIVE_DETECTOR = 1;

- private static final String TAG = "FaceDetectActivity";

- @BindView(R.id.cameraView_face)

- CameraBridgeViewBase mCameraView;

- private Mat mGray;

- private Mat mRgba;

- private int mDetectorType = NATIVE_DETECTOR;

- private int mAbsoluteFaceSize = 0;

- private float mRelativeFaceSize = 0.2f;

- private DetectionBasedTracker mNativeDetector;

- private CascadeClassifier mJavaDetector;

- private static final Scalar FACE_RECT_COLOR = new Scalar(0, 255, 0, 255);

- private File mCascadeFile;

- private BaseLoaderCallback mLoaderCallback = new BaseLoaderCallback(this) {

- @Override

- public void onManagerConnected(int status) {

- switch (status) {

- case LoaderCallbackInterface.SUCCESS:

- // OpenCV初始化加载成功,再加载本地so库

- System.loadLibrary("detection_based_tracker");

- try {

- // 加载人脸检测模式文件

- InputStream is = getResources().openRawResource(R.raw.lbpcascade_frontalface);

- File cascadeDir = getDir("cascade", Context.MODE_PRIVATE);

- mCascadeFile = new File(cascadeDir, "lbpcascade_frontalface.xml");

- FileOutputStream os = new FileOutputStream(mCascadeFile);

- byte[] buffer = new byte[4096];

- int byteesRead;

- while ((byteesRead = is.read(buffer)) != -1) {

- os.write(buffer, 0, byteesRead);

- }

- is.close();

- os.close();

- // 使用模型文件初始化人脸检测引擎

- mJavaDetector = new CascadeClassifier(mCascadeFile.getAbsolutePath());

- if (mJavaDetector.empty()) {

- Log.e(TAG, "加载cascade classifier失败");

- mJavaDetector = null;

- } else {

- Log.d(TAG, "Loaded cascade classifier from " + mCascadeFile.getAbsolutePath());

- }

- mNativeDetector = new DetectionBasedTracker(mCascadeFile.getAbsolutePath(), 0);

- cascadeDir.delete();

- } catch (FileNotFoundException e) {

- e.printStackTrace();

- } catch (IOException e) {

- e.printStackTrace();

- }

- // 开启渲染Camera

- mCameraView.enableView();

- break;

- default:

- super.onManagerConnected(status);

- break;

- }

- }

- };

- @Override

- protected void onCreate(@Nullable Bundle savedInstanceState) {

- super.onCreate(savedInstanceState);

- getWindow().addFlags(WindowManager.LayoutParams.FLAG_FULLSCREEN);

- setContentView(R.layout.activity_facedetect);

- // 绑定View

- ButterKnife.bind(this);

- mCameraView.setVisibility(CameraBridgeViewBase.VISIBLE);

- // 注册Camera渲染事件监听器

- mCameraView.setCvCameraViewListener(this);

- }

- @Override

- protected void onResume() {

- super.onResume();

- // 静态初始化OpenCV

- if (!OpenCVLoader.initDebug()) {

- Log.d(TAG, "无法加载OpenCV本地库,将使用OpenCV Manager初始化");

- OpenCVLoader.initAsync(OpenCVLoader.OPENCV_VERSION_3_3_0, this, mLoaderCallback);

- } else {

- Log.d(TAG, "成功加载OpenCV本地库");

- mLoaderCallback.onManagerConnected(LoaderCallbackInterface.SUCCESS);

- }

- }

- @Override

- protected void onPause() {

- super.onPause();

- // 停止渲染Camera

- if (mCameraView != null) {

- mCameraView.disableView();

- }

- }

- @Override

- protected void onDestroy() {

- super.onDestroy();

- // 停止渲染Camera

- if (mCameraView != null) {

- mCameraView.disableView();

- }

- }

- @Override

- public void onCameraViewStarted(int width, int height) {

- // 灰度图像

- mGray = new Mat();

- // R、G、B彩色图像

- mRgba = new Mat();

- }

- @Override

- public void onCameraViewStopped() {

- mGray.release();

- mRgba.release();

- }

- @Override

- public Mat onCameraFrame(CameraBridgeViewBase.CvCameraViewFrame inputFrame) {

- mRgba = inputFrame.rgba();

- mGray = inputFrame.gray();

- // 设置脸部大小

- if (mAbsoluteFaceSize == 0) {

- int height = mGray.rows();

- if (Math.round(height * mRelativeFaceSize) > 0) {

- mAbsoluteFaceSize = Math.round(height * mRelativeFaceSize);

- }

- mNativeDetector.setMinFaceSize(mAbsoluteFaceSize);

- }

- // 获取检测到的脸部数据

- MatOfRect faces = new MatOfRect();

- if (mDetectorType == JAVA_DETECTOR) {

- if (mJavaDetector != null) {

- mJavaDetector.detectMultiScale(mGray, faces, 1.1, 2, 2,

- new Size(mAbsoluteFaceSize, mAbsoluteFaceSize), new Size());

- }

- } else if (mDetectorType == NATIVE_DETECTOR) {

- if (mNativeDetector != null) {

- mNativeDetector.detect(mGray, faces);

- }

- } else {

- Log.e(TAG, "Detection method is not selected!");

- }

- // 绘制检测框

- Rect[] facesArray = faces.toArray();

- for (int i = 0; i < facesArray.length; i++) {

- Imgproc.rectangle(mRgba, facesArray[i].tl(), facesArray[i].br(), FACE_RECT_COLOR, 3);

- }

- Log.i(TAG, "共检测到 " + faces.toArray().length + " 张脸");

- return mRgba;

- }

- }

- <?xml version="1.0" encoding="utf-8"?>

- <RelativeLayout xmlns:android="http://schemas.android.com/apk/res/android"

- xmlns:opencv="http://schemas.android.com/apk/res-auto"

- android:layout_width="match_parent"

- android:layout_height="match_parent"

- android:orientation="vertical">

- <org.opencv.android.JavaCameraView

- android:id="@+id/cameraView_face"

- android:layout_width="match_parent"

- android:layout_height="match_parent"

- android:visibility="gone"

- opencv:camera_id="any"

- opencv:show_fps="true" />

- </RelativeLayout>

- <?xml version="1.0" encoding="utf-8"?>

- <manifest xmlns:android="http://schemas.android.com/apk/res/android"

- package="com.jiangdg.opencv4android">

- <uses-permission android:name="android.permission.CAMERA"/>

- <uses-feature android:name="android.hardware.camera" android:required="false"/>

- <uses-feature android:name="android.hardware.camera.autofocus" android:required="false"/>

- <uses-feature android:name="android.hardware.camera.front" android:required="false"/>

- <uses-feature android:name="android.hardware.camera.front.autofocus" android:required="false"/>

- <supports-screens android:resizeable="true"

- android:smallScreens="true"

- android:normalScreens="true"

- android:largeScreens="true"

- android:anyDensity="true" />

- <application

- android:allowBackup="true"

- android:icon="@mipmap/ic_launcher"

- android:label="@string/app_name"

- android:roundIcon="@mipmap/ic_launcher_round"

- android:supportsRtl="true"

- android:theme="@style/AppTheme">

- <activity android:name=".MainActivity">

- <intent-filter>

- <action android:name="android.intent.action.MAIN" />

- <category android:name="android.intent.category.LAUNCHER" />

- </intent-filter>

- </activity>

- <activity android:name=".HelloOpenCVActivity"

- android:screenOrientation="landscape"

- android:configChanges="keyboardHidden|orientation"/>

- <activity android:name=".FaceDetectActivity"

- android:screenOrientation="landscape"

- android:configChanges="keyboardHidden|orientation"/>

- </application>

- </manifest>

源码下载:https://github.com/jiangdongguo/OpenCV4Android(欢迎star & fork)

更新于2018-3-13

四、使用Cmake方式编译和升级到OpenCV3.4.1

在上面工程中,有两个不好的体验:(1)每次编译so时必须手动调用ndk-build命令;(2)在编写C/C++代码时没有代码提示,也没有报错警告。我想这两种情况无论是哪个都是让人感觉很不爽的,因此,今天打算在OpenCV3.4.1版本的基础上对项目进行重构下,使用Cmake方式来进行编译。

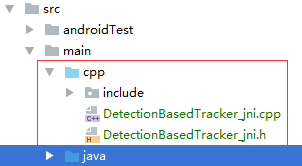

1. 新建main/app/cpp目录。将jni目录下的"DetectionBasedTracker_jni.cpp" 和"DetectionBasedTracker_jni.h" 文件拷贝到该目录下,并将opencv-3.4.1源码..\opencv-3.4.1-android-sdk\OpenCV-android-sdk\sdk\native\jni\目录下的整个include目录拷贝到该cpp目录下,同时可以删除整个app/src/main/jni目录

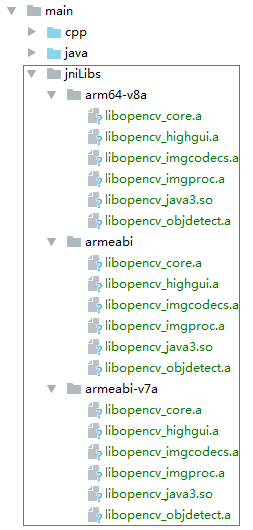

2. 新建main/app/jniLibs目录,将opencv-3.4.1源码中..\opencv-3.4.1-android-sdk\OpenCV-android-sdk\sdk\native\libs或staticlibs相关架构的动态库(.so)和静态库(.a)文件拷贝到该jniLibs目录下,同时删除libs、obj目录。

3. 在app目录下新建脚本文件CMakeLists.txt,该文件用于编写Cmake编译运行需要的脚本。需要注意的是,你在jniLibs目录导入了哪些静态库和动态库,在CmakeList.txt编写自动编译脚本时只能导入和链接这些库:

- # Sets the minimum version of CMake required to build the native

- # library. You should either keep the default value or only pass a

- # value of 3.4.0 or lower.

- cmake_minimum_required(VERSION 3.4.1)

- # Creates and names a library, sets it as either STATIC

- # or SHARED, and provides the relative paths to its source code.

- # You can define multiple libraries, and CMake builds it for you.

- # Gradle automatically packages shared libraries with your APK.

- set(CMAKE_VERBOSE_MAKEFILE on)

- set(libs "${CMAKE_SOURCE_DIR}/src/main/jniLibs")

- include_directories(${CMAKE_SOURCE_DIR}/src/main/cpp/include)

- #--------------------------------------------------- import ---------------------------------------------------#

- add_library(libopencv_java3 SHARED IMPORTED )

- set_target_properties(libopencv_java3 PROPERTIES

- IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_java3.so")

- add_library(libopencv_core STATIC IMPORTED )

- set_target_properties(libopencv_core PROPERTIES

- IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_core.a")

- add_library(libopencv_highgui STATIC IMPORTED )

- set_target_properties(libopencv_highgui PROPERTIES

- IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_highgui.a")

- add_library(libopencv_imgcodecs STATIC IMPORTED )

- set_target_properties(libopencv_imgcodecs PROPERTIES

- IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_imgcodecs.a")

- add_library(libopencv_imgproc STATIC IMPORTED )

- set_target_properties(libopencv_imgproc PROPERTIES

- IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_imgproc.a")

- add_library(libopencv_objdetect STATIC IMPORTED )

- set_target_properties(libopencv_objdetect PROPERTIES

- IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_objdetect.a")

- #add_library(libopencv_calib3d STATIC IMPORTED )

- #set_target_properties(libopencv_calib3d PROPERTIES

- # IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_calib3d.a")

- #

- #

- #add_library(libopencv_dnn STATIC IMPORTED )

- #set_target_properties(libopencv_core PROPERTIES

- # IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_dnn.a")

- #

- #add_library(libopencv_features2d STATIC IMPORTED )

- #set_target_properties(libopencv_features2d PROPERTIES

- # IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_features2d.a")

- #

- #add_library(libopencv_flann STATIC IMPORTED )

- #set_target_properties(libopencv_flann PROPERTIES

- # IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_flann.a")

- #

- #add_library(libopencv_ml STATIC IMPORTED )

- #set_target_properties(libopencv_ml PROPERTIES

- # IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_ml.a")

- #

- #add_library(libopencv_photo STATIC IMPORTED )

- #set_target_properties(libopencv_photo PROPERTIES

- # IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_photo.a")

- #

- #add_library(libopencv_shape STATIC IMPORTED )

- #set_target_properties(libopencv_shape PROPERTIES

- # IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_shape.a")

- #

- #add_library(libopencv_stitching STATIC IMPORTED )

- #set_target_properties(libopencv_stitching PROPERTIES

- # IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_stitching.a")

- #

- #add_library(libopencv_superres STATIC IMPORTED )

- #set_target_properties(libopencv_superres PROPERTIES

- # IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_superres.a")

- #

- #add_library(libopencv_video STATIC IMPORTED )

- #set_target_properties(libopencv_video PROPERTIES

- # IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_video.a")

- #

- #add_library(libopencv_videoio STATIC IMPORTED )

- #set_target_properties(libopencv_videoio PROPERTIES

- # IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_videoio.a")

- #

- #add_library(libopencv_videostab STATIC IMPORTED )

- #set_target_properties(libopencv_videostab PROPERTIES

- # IMPORTED_LOCATION "${libs}/${ANDROID_ABI}/libopencv_videostab.a")

- set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=gnu++11 -fexceptions -frtti")

- add_library( # Sets the name of the library.

- opencv341

- # Sets the library as a shared library.

- SHARED

- # Provides a relative path to your source file(s).

- # Associated headers in the same location as their source

- # file are automatically included.

- src/main/cpp/DetectionBasedTracker_jni.cpp)

- find_library( # Sets the name of the path variable.

- log-lib

- # Specifies the name of the NDK library that

- # you want CMake to locate.

- log)

- target_link_libraries(opencv341 android log

- libopencv_java3 #used for java sdk

- #17 static libs in total

- #libopencv_calib3d

- libopencv_core

- #libopencv_dnn

- #libopencv_features2d

- #libopencv_flann

- libopencv_highgui

- libopencv_imgcodecs

- libopencv_imgproc

- #libopencv_ml

- libopencv_objdetect

- #libopencv_photo

- #libopencv_shape

- #libopencv_stitching

- #libopencv_superres

- #libopencv_video

- #libopencv_videoio

- #libopencv_videostab

- ${log-lib}

- )

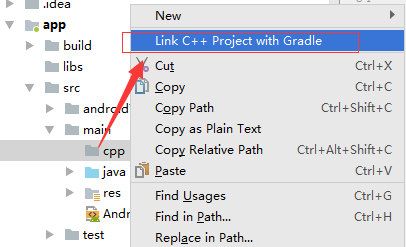

4. 右击选中app/src/main/cpp目录,选择"Link C++ Project with Gradle"并浏览选择本项目中的CmakeLists.txt文件,将C++环境关联到gradle

5. 将工程中的openCVLibrary330 module更新到openCVLibrary341,修改app目录下的gradle.build文件

- apply plugin: 'com.android.application'

- android {

- compileSdkVersion 25

- defaultConfig {

- //...代码省略

- externalNativeBuild {

- cmake {

- arguments "-DANDROID_ARM_NEON=TRUE", "-DANDROID_TOOLCHAIN=clang","-DCMAKE_BUILD_TYPE=Release"

- //'-DANDROID_STL=gnustl_static'

- cppFlags "-std=c++11","-frtti", "-fexceptions"

- }

- }

- // 设置输出指定目标平台so

- ndk{

- abiFilters 'armeabi-v7a','arm64-v8a','armeabi'

- }

- }

- // ...代码省略

- externalNativeBuild {

- cmake {

- path 'CMakeLists.txt'

- }

- }

- }

- dependencies {

- //.. 代码省略

- implementation project(':openCVLibrary341')

- }

相关文章:

活动|跟着微软一起,拥抱开源吧!

由开源社主办的中国开源年会2016 (COSCon16 - China Open Source Conference 2016) 即将于今年10月15日-16日在北京举办。微软大咖将为您呈现区块链,容器,大数据,Xamarin等时下热点技术,参会者还可获取价值1,500 元 Azure 服务使用…

【HDU/算法】最短路问题 杭电OJ 2544 (Dijkstra,Dijkstra+priority_queue,Floyd,Bellman_ford,SPFA)

最短路径问题是图论中很重要的问题。 解决最短路径几个经典的算法 1、Dijkstra算法 单源最短路径(贪心),还有用 priority_queue 进行优化的 Dijkstra 算法。 2、bellman-ford算法 例题:【ACM】POJ 3259 Wormholes 允许负权边…

javaSE基础知识 1.5整数类型

整数的四种声明类型它们分别是,byte,short,int,long,这四种类型所占用的空间是不同的byte是占用1个字节,它的取值范围是 -128~127,short是占用2个字节,他的取值范围是-32768~32767&a…

源码分析-GLSurfaceView的内部实现

GLSurfaceView类是继承自SurfaceView的,并且实现了SurfaceHolder.Callback2接口。GLSurfaceView内部管理着一个surface,专门负责OpenGL渲染。GLSurfaceView内部通过GLThread和EGLHelper为我们完成了EGL环境渲染和渲染线程的创建及管理,使我们…

【POJ/算法】 3259 Wormholes(Bellman-Ford算法, SPFA ,FLoyd算法)

Bellman-Ford算法 Bellman-Ford算法的优点是可以发现负圈,缺点是时间复杂度比Dijkstra算法高。而SPFA算法是使用队列优化的Bellman-Ford版本,其在时间复杂度和编程难度上都比其他算法有优势。 Bellman-Ford算法流程分为三个阶段: 第一步&am…

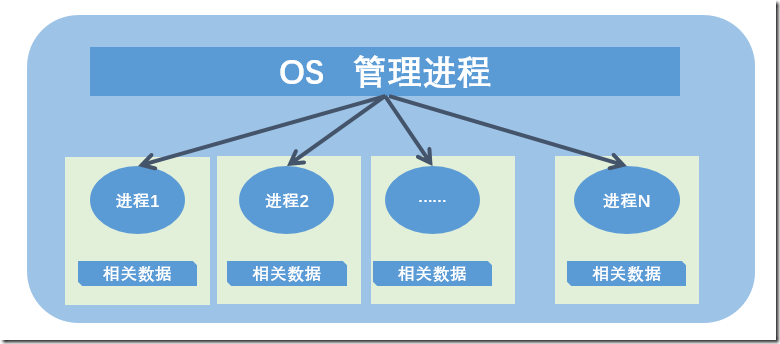

进程控制概念简介 多线程上篇(三)

进程控制 进程的基本数据信息是操作系统控制管理进程的数据集合,这些信息就是用来控制进程的,此处我们说的进程控制就是进程的管理。比如进程有状态,那么进程的创建、终止,状态的切换,这都不是进程自主进行的ÿ…

Android OpenGL使用GLSurfaceView预览视频

Android OpenGL使用GLSurfaceView预览视频第一章 相关知识介绍在介绍具体的功能之前,先对一些主要的类和方法进行一些介绍,这样可以更好的理解整个程序1.1 GLSurfaceView在谷歌的官方文档中是这样解释GLSurfaceView的:An implementation of S…

【Android 基础】Animation 动画介绍和实现

转载自:http://www.cnblogs.com/yc-755909659/p/4290114.html1.Animation 动画类型Android的animation由四种类型组成:XML中alph渐变透明度动画效果scale渐变尺寸伸缩动画效果translate画面转换位置移动动画效果rotate画面转移旋转动画效果JavaCode中Alp…

【Codeforces】1111B - Average Superhero Gang Power

http://codeforces.com/problemset/problem/1111/B n 表示要输入的数据的个数 k 最每一个数据最多可以进行多少次操作 m 一共可以进行多少次操作 一次操作:删除这个数,或者给这个数加1 如果n为1的话,那么只要找出m和k的最小值加到那个数…

刷前端面经笔记(七)

1.描述一下渐进增强和优雅降级 优雅降级(graceful degradation):一开始就构建站点的完整功能,然后针对浏览器测试和修复。渐进增强(progressive enhancement):一开始只构建站点的最少特性,然后不断针对各浏览器追加功能。 2.为什么…

AR资料与连接梳理

AR引擎相关技术 ------------------------------ ARcore:https://developers.google.cn/ar/discover/ ARkit:https://developer.apple.com/arkit/ 以上重点关注,比较新有一些新的功能大家可以自行体验。 ARToolkithttp://www.artoolkit.orght…

Queues 队列

1. Definiation What is a queue? A queue is a list. With a queue, inseration is done at one end (known as rear) whereas deletion is performed at the other end (known as front). 2. Operations 指针对列 无法自定义队长 // array queue #include<iostream> u…

【HDU】1005 Number Sequence (有点可爱)

http://acm.hdu.edu.cn/showproblem.php?pid1005 A number sequence is defined as follows: f(1) 1, f(2) 1, f(n) (A * f(n - 1) B * f(n - 2)) mod 7. Given A, B, and n, you are to calculate the value of f(n). 直接递归求解f(n)的话,会MLE 在计算…

CNCF案例研究:奇虎360

公司:奇虎360地点:中国北京行业:计算机软件 挑战 中国软件巨头奇虎360科技的搜索部门,so.com是中国第二大搜索引擎,市场份额超过35%。该公司一直在使用传统的手动操作来部署环境,随着项目数量的…

C#代码实现对Windows凭据的管理

今天有个任务,那就是使用C#代码实现对windows凭据管理的操作。例如:向windows凭据管理中添加凭据、删除凭据以及查询凭据等功能。于是乎,就开始在网上查找。经过漫长的查询路,终于在一片英文博客中找到了相关代码。经过实验&#…

Android:JNI 与 NDK到底是什么

前言 在Android开发中,使用 NDK开发的需求正逐渐增大但很多人却搞不懂 JNI 与 NDK 到底是怎么回事今天,我将先介绍JNI 与 NDK & 之间的区别,手把手进行 NDK的使用教学,希望你们会喜欢 目录 1. JNI介绍 1.1 简介 定义&…

【ACM】LightOJ - 1008 Fibsieve`s Fantabulous Birthday (找规律,找...)

https://vjudge.net/problem/LightOJ-1008 题目很好理解,第一行表示测试样例的个数,接下来输入一个大于等于1的数,按照格式输出这个数的坐标 蓝色的是 奇数的平方; 红色的是 偶数的平方; 黄色的是对角线:…

Computed property XXX was assigned to but it has no setter

报错视图: 原因: 组件中v-model“XXX”,而XXX是vuex state中的某个变量vuex中是单项流,v-model是vue中的双向绑定,但是在computed中只通过get获取参数值,没有set无法改变参数值解决方法: 1.在co…

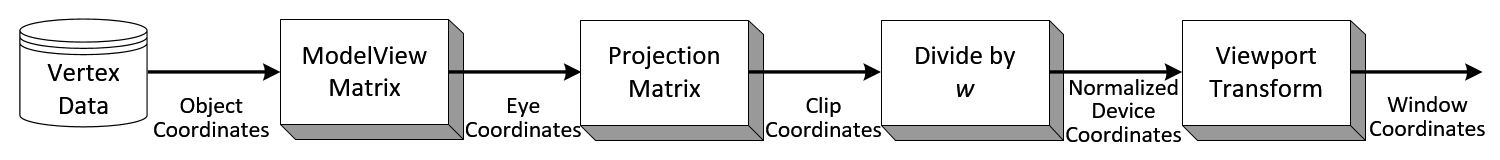

OpenGL 矩阵变换

origin refer :http://www.songho.ca/opengl/gl_transform.html#modelviewOpenGL 矩阵变换Related Topics: OpenGL Pipeline, OpenGL Projection Matrix, OpenGL Matrix Class Download: matrixModelView.zip, matrixProjection.zipOverviewOpenGL Transform MatrixExample: GL…

2016.8.11 DataTable合并及排除重复方法

合并: DataTable prosxxx; DataTable pstaryyy; //将两张DataTable合成一张 foreach (DataRow dr in pstar.Rows) { pros.ImportRow(dr); } DataTable设置主键,并判断重复 DataTable allpros xxx; 单列设为主键: //设置第某列为主键 allpros.…

【ACM】LightOJ - 1010 Knights in Chessboard(不是搜索...)

https://vjudge.net/problem/LightOJ-1010 给定一个mn的棋盘,你想把棋子放在哪里。你必须找到棋盘上最多可以放置的骑士数量,这样就不会有两个骑士互相攻击。不熟悉棋手的注意,棋手可以在棋盘上攻击8个位置,如下图所示。 不论输入…

webpack-dev-server 和webapck --watch的区别

webpack-dev-server 和webapck --watch 都可以监测到代码变化 , 区别是:webpack-der-server 监测到代码变化后,浏览器可以看到及时更新的效果,但是并没有自动打包修改的代码; webpack --watch 在监测到代码变化后自动打…

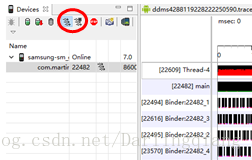

Android 应用进行性能分析/APP/系统性能分析

如何对 Android 应用进行性能分析记录一下自己在使用DDMS的过程:开启AS,打开并运行项目 找到TOOL/选择Android Device Monitor一款 App 流畅与否安装在自己的真机里,玩几天就能有个大概的感性认识。不过通过专业的分析工具可以使我们更好的分…

公钥与私钥,HTTPS详解

1.公钥与私钥原理1)鲍勃有两把钥匙,一把是公钥,另一把是私钥2)鲍勃把公钥送给他的朋友们----帕蒂、道格、苏珊----每人一把。3)苏珊要给鲍勃写一封保密的信。她写完后用鲍勃的公钥加密,就可以达到保密的效果。4)鲍勃收信后,用私钥…

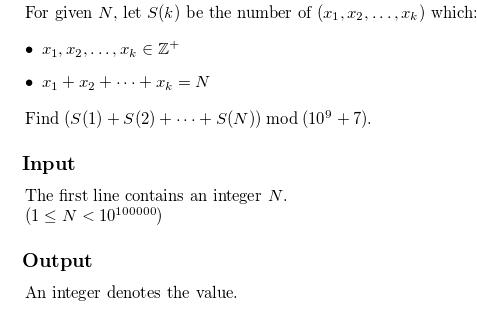

【ACM】杭电OJ 4704 Sum (隔板原理+组合数求和公式+费马小定理+快速幂)

http://acm.hdu.edu.cn/showproblem.php?pid4704 1.隔板原理 1~N有N个元素,每个元素代表一个1.分成K个数,即在(N-1)个空挡里放置(K-1)块隔板 即求组合数C(N-1,0)C(N-1,1)...C(N-1,N-1) 2.组合数求和公式 C(n,0)C(…

Vue 中 CSS 动画原理

下面这段代码,是点击按钮实现hello world显示与隐藏 <div id"root"><div v-if"show">hello world</div><button click"handleClick">按钮</button> </div> let vm new Vue({el: #root,data: {s…

【ACM】UVA - 340 Master-Mind Hints(一定要好好学英语...)

https://vjudge.net/problem/UVA-340 N 表示 密码的个数 第一行是正确的密码 下面的行直到N个0之前,都是猜测的序列,输出的括号(A,B) A表示对应位置与密码相符合的个数,B表示出现在序列中的数字但是位…

SLAM的前世今生

SLAM的前世 从研究生开始切入到视觉SLAM领域,应用背景为AR中的视觉导航与定位。 定位、定向、测速、授时是人们惆怅千年都未能完全解决的问题,最早的时候,古人只能靠夜观天象和司南来做简单的定向。直至元代,出于对定位的需求&a…

No resource found that matches the given name '@style/Theme.AppCompat.Light'

为什么80%的码农都做不了架构师?>>> Android导入项目时出现此问题的解决办法: 1.查看是否存在此目录(D:\android-sdk\extras\android\support\v7\appcompat),若没有此目录,在项目右键Android T…

极限编程 (Extreme Programming) - 迭代计划 (Iterative Planning)

(Source: XP - Iteration Planning) 在每次迭代开始时调用迭代计划会议,以生成该迭代的编程任务计划。每次迭代为1到3周。 客户从发布计划中按照对客户最有价值的顺序选择用户故事进行此次迭代。还选择了要修复的失败验收测试。客户选择的用户故事的估计总计达到上次…