LIVE555中RTSP客户端接收媒体流分析及测试代码

LIVE555中testProgs目录下的testRTSPClient.cpp代码用于测试接收RTSP URL指定的媒体流,向服务器端发送的命令包括:DESCRIBE、SETUP、PLAY、TERADOWN。

1. 设置使用环境:new一个BasicTaskScheduler对象;new一个BasicUsageEnvironment对象;

2. new一个RTSPClient对象;

3. 向服务器发送一个RTSP “DESCRIBE”命令,以获取stream的SDP描述(description),异步操作: RTSPClient::sendDescribeCommand;

4. 处理从服务器端返回的“DESCRIBE”响应:SDP描述,通过SDP描述创建一个会话MediaSession,接着为此会话创建数据源对象MediaSubsessionIterator,continueAfterDESCRIBE为回调函数;

5. 向服务器发送一个RTSP “SETUP”命令,异步操作,在setupNextSubsession函数中:RTSPClient::sendSetupCommand;

6. 处理从服务器端返回的”SETUP”响应:创建一个MediaSink对象DummySink对象用于接收流数据,continueAfterSETUP为回调函数;

7. 向服务器发送一个RTSP “PLAY”命令,异步操作,在setupNextSubsession函数中:RTSPClient::sendPlayCommand;

8. 处理从服务器端返回的”PLAY”响应:设置一个定时器用于结束接收流,continueAfterPLAY为回调函数;

9. 接收流数据,包括音频流和视频流,可通过MediaSubsession::mediumName()函数来判断此流是视频流(video)还是音频流(audio);可通过MediaSubsession::codecName()函数来判断是采用的哪种编码标准;每次接收流的大小是不同的,可通过frameSize来获取当次接收流的字节数,流数据在fReceiveBuffer中获取;在每次接收视频流数据的开头需要加入0x00000001,这样FFmpeg才能成功解码;

10. 当出现异常情况或正常结束接收流时会向服务器发送一个RTSP “TERADOWN”命令,异步操作,在shutdownStream中:RTSPClient::sendTeardownCommand。

注:

1. 每一路都需要单独创建一个RTSPClient对象。

2. 可以在某个时间点上设置eventLoopWatchVariable为非0值来正常结束程序。

3. MediaSource是所有Source的基类;MediaSink是所有Sink的基类;它们均又继承自Media类。MediaSession用于表示一个RTP会话,一个MediaSession可能包含多个子会话(MediaSubSession)。Source和Sink通过RTP子会话联系在一起。Source:发送端,流的起点。Sink:接收端,流的终点,数据流保存到文件或显示,数据通过Source传递到Sink。每一个MediaSubSession内部维护一个Source和Sink。

以下是基于testRTSPClient.cpp的测试代码:

#include "funset.hpp"

#include <iostream>

#include <thread>

#include <chrono>

#include <liveMedia.hh>

#include <BasicUsageEnvironment.hh>namespace {// Forward function definitions:

// RTSP 'response handlers':

void continueAfterDESCRIBE(RTSPClient* rtspClient, int resultCode, char* resultString);

void continueAfterSETUP(RTSPClient* rtspClient, int resultCode, char* resultString);

void continueAfterPLAY(RTSPClient* rtspClient, int resultCode, char* resultString);// Other event handler functions:

// called when a stream's subsession (e.g., audio or video substream) ends

void subsessionAfterPlaying(void* clientData);

// called when a RTCP "BYE" is received for a subsession

void subsessionByeHandler(void* clientData, char const* reason);

// called at the end of a stream's expected duration (if the stream has not already signaled its end using a RTCP "BYE")

void streamTimerHandler(void* clientData);// The main streaming routine (for each "rtsp://" URL):

void openURL(UsageEnvironment& env, char const* progName, char const* rtspURL);// Used to iterate through each stream's 'subsessions', setting up each one:

void setupNextSubsession(RTSPClient* rtspClient);// Used to shut down and close a stream (including its "RTSPClient" object):

void shutdownStream(RTSPClient* rtspClient, int exitCode = 1);// A function that outputs a string that identifies each stream (for debugging output). Modify this if you wish:

UsageEnvironment& operator<<(UsageEnvironment& env, const RTSPClient& rtspClient)

{return env << "[URL:\"" << rtspClient.url() << "\"]: ";

}// A function that outputs a string that identifies each subsession (for debugging output). Modify this if you wish:

UsageEnvironment& operator<<(UsageEnvironment& env, const MediaSubsession& subsession)

{return env << subsession.mediumName() << "/" << subsession.codecName();

}char eventLoopWatchVariable = 0;// Define a class to hold per-stream state that we maintain throughout each stream's lifetime:

class StreamClientState {

public:StreamClientState();virtual ~StreamClientState();public:MediaSubsessionIterator* iter;MediaSession* session;MediaSubsession* subsession;TaskToken streamTimerTask;double duration;

};// If you're streaming just a single stream (i.e., just from a single URL, once), then you can define and use just a single

// "StreamClientState" structure, as a global variable in your application. However, because - in this demo application - we're

// showing how to play multiple streams, concurrently, we can't do that. Instead, we have to have a separate "StreamClientState"

// structure for each "RTSPClient". To do this, we subclass "RTSPClient", and add a "StreamClientState" field to the subclass:

class ourRTSPClient : public RTSPClient {

public:static ourRTSPClient* createNew(UsageEnvironment& env, char const* rtspURL,int verbosityLevel = 0, char const* applicationName = NULL, portNumBits tunnelOverHTTPPortNum = 0);protected:ourRTSPClient(UsageEnvironment& env, char const* rtspURL, int verbosityLevel, char const* applicationName, portNumBits tunnelOverHTTPPortNum);// called only by createNew();virtual ~ourRTSPClient();public:StreamClientState scs;

};// Define a data sink (a subclass of "MediaSink") to receive the data for each subsession (i.e., each audio or video 'substream').

// In practice, this might be a class (or a chain of classes) that decodes and then renders the incoming audio or video.

// Or it might be a "FileSink", for outputting the received data into a file (as is done by the "openRTSP" application).

// In this example code, however, we define a simple 'dummy' sink that receives incoming data, but does nothing with it.

class DummySink : public MediaSink {

public:static DummySink* createNew(UsageEnvironment& env,MediaSubsession& subsession, // identifies the kind of data that's being receivedchar const* streamId = NULL); // identifies the stream itself (optional)private:DummySink(UsageEnvironment& env, MediaSubsession& subsession, char const* streamId);// called only by "createNew()"virtual ~DummySink();static void afterGettingFrame(void* clientData, unsigned frameSize, unsigned numTruncatedBytes,struct timeval presentationTime, unsigned durationInMicroseconds);void afterGettingFrame(unsigned frameSize, unsigned numTruncatedBytes, struct timeval presentationTime, unsigned durationInMicroseconds);private:// redefined virtual functions:virtual Boolean continuePlaying();private:u_int8_t* fReceiveBuffer;MediaSubsession& fSubsession;char* fStreamId;

};#define RTSP_CLIENT_VERBOSITY_LEVEL 1 // by default, print verbose output from each "RTSPClient"static unsigned rtspClientCount = 0; // Counts how many streams (i.e., "RTSPClient"s) are currently in use.void openURL(UsageEnvironment& env, char const* progName, char const* rtspURL)

{// Begin by creating a "RTSPClient" object. Note that there is a separate "RTSPClient" object for each stream that we wish// to receive (even if more than stream uses the same "rtsp://" URL).RTSPClient* rtspClient = ourRTSPClient::createNew(env, rtspURL, RTSP_CLIENT_VERBOSITY_LEVEL, progName);if (rtspClient == NULL) {env << "Failed to create a RTSP client for URL \"" << rtspURL << "\": " << env.getResultMsg() << "\n";return;}++rtspClientCount;// Next, send a RTSP "DESCRIBE" command, to get a SDP description for the stream.// Note that this command - like all RTSP commands - is sent asynchronously; we do not block, waiting for a response.// Instead, the following function call returns immediately, and we handle the RTSP response later, from within the event loop:rtspClient->sendDescribeCommand(continueAfterDESCRIBE);

}// Implementation of the RTSP 'response handlers':

void continueAfterDESCRIBE(RTSPClient* rtspClient, int resultCode, char* resultString)

{do {UsageEnvironment& env = rtspClient->envir(); // aliasStreamClientState& scs = ((ourRTSPClient*)rtspClient)->scs; // aliasif (resultCode != 0) {env << *rtspClient << "Failed to get a SDP description: " << resultString << ", resultCode: " << resultCode << "\n";delete[] resultString;break;}char* const sdpDescription = resultString;env << *rtspClient << "Got a SDP description:\n" << sdpDescription << "\n";// Create a media session object from this SDP description:scs.session = MediaSession::createNew(env, sdpDescription);delete[] sdpDescription; // because we don't need it anymoreif (scs.session == NULL) {env << *rtspClient << "Failed to create a MediaSession object from the SDP description: " << env.getResultMsg() << "\n";break;} else if (!scs.session->hasSubsessions()) {env << *rtspClient << "This session has no media subsessions (i.e., no \"m=\" lines)\n";break;}// Then, create and set up our data source objects for the session. We do this by iterating over the session's 'subsessions',// calling "MediaSubsession::initiate()", and then sending a RTSP "SETUP" command, on each one.// (Each 'subsession' will have its own data source.)scs.iter = new MediaSubsessionIterator(*scs.session);setupNextSubsession(rtspClient);return;} while (0);// An unrecoverable error occurred with this stream.shutdownStream(rtspClient);

}// By default, we request that the server stream its data using RTP/UDP.

// If, instead, you want to request that the server stream via RTP-over-TCP, change the following to True:

#define REQUEST_STREAMING_OVER_TCP Falsevoid setupNextSubsession(RTSPClient* rtspClient)

{UsageEnvironment& env = rtspClient->envir(); // aliasStreamClientState& scs = ((ourRTSPClient*)rtspClient)->scs; // aliasscs.subsession = scs.iter->next();if (scs.subsession != NULL) {if (!scs.subsession->initiate()) {env << *rtspClient << "Failed to initiate the \"" << *scs.subsession << "\" subsession: " << env.getResultMsg() << "\n";setupNextSubsession(rtspClient); // give up on this subsession; go to the next one} else {env << *rtspClient << "Initiated the \"" << *scs.subsession << "\" subsession (";if (scs.subsession->rtcpIsMuxed()) {env << "client port " << scs.subsession->clientPortNum();} else {env << "client ports " << scs.subsession->clientPortNum() << "-" << scs.subsession->clientPortNum() + 1;}env << ")\n";// Continue setting up this subsession, by sending a RTSP "SETUP" command:rtspClient->sendSetupCommand(*scs.subsession, continueAfterSETUP, False, REQUEST_STREAMING_OVER_TCP);}return;}// We've finished setting up all of the subsessions. Now, send a RTSP "PLAY" command to start the streaming:if (scs.session->absStartTime() != NULL) {// Special case: The stream is indexed by 'absolute' time, so send an appropriate "PLAY" command:rtspClient->sendPlayCommand(*scs.session, continueAfterPLAY, scs.session->absStartTime(), scs.session->absEndTime());} else {scs.duration = scs.session->playEndTime() - scs.session->playStartTime();rtspClient->sendPlayCommand(*scs.session, continueAfterPLAY);}

}void continueAfterSETUP(RTSPClient* rtspClient, int resultCode, char* resultString)

{do {UsageEnvironment& env = rtspClient->envir(); // aliasStreamClientState& scs = ((ourRTSPClient*)rtspClient)->scs; // aliasif (resultCode != 0) {env << *rtspClient << "Failed to set up the \"" << *scs.subsession << "\" subsession: " << resultString << ", resultCode: " << resultCode << "\n";break;}env << *rtspClient << "Set up the \"" << *scs.subsession << "\" subsession (";if (scs.subsession->rtcpIsMuxed()) {env << "client port " << scs.subsession->clientPortNum();} else {env << "client ports " << scs.subsession->clientPortNum() << "-" << scs.subsession->clientPortNum() + 1;}env << ")\n";// Having successfully setup the subsession, create a data sink for it, and call "startPlaying()" on it.// (This will prepare the data sink to receive data; the actual flow of data from the client won't start happening until later,// after we've sent a RTSP "PLAY" command.)scs.subsession->sink = DummySink::createNew(env, *scs.subsession, rtspClient->url());// perhaps use your own custom "MediaSink" subclass insteadif (scs.subsession->sink == NULL) {env << *rtspClient << "Failed to create a data sink for the \"" << *scs.subsession<< "\" subsession: " << env.getResultMsg() << "\n";break;}env << *rtspClient << "Created a data sink for the \"" << *scs.subsession << "\" subsession\n";scs.subsession->miscPtr = rtspClient; // a hack to let subsession handler functions get the "RTSPClient" from the subsession scs.subsession->sink->startPlaying(*(scs.subsession->readSource()), subsessionAfterPlaying, scs.subsession);// Also set a handler to be called if a RTCP "BYE" arrives for this subsession:if (scs.subsession->rtcpInstance() != NULL) {scs.subsession->rtcpInstance()->setByeWithReasonHandler(subsessionByeHandler, scs.subsession);}} while (0);delete[] resultString;// Set up the next subsession, if any:setupNextSubsession(rtspClient);

}void continueAfterPLAY(RTSPClient* rtspClient, int resultCode, char* resultString)

{Boolean success = False;do {UsageEnvironment& env = rtspClient->envir(); // aliasStreamClientState& scs = ((ourRTSPClient*)rtspClient)->scs; // aliasif (resultCode != 0) {env << *rtspClient << "Failed to start playing session: " << resultString << ", resultCode: " << resultCode << "\n";break;}// Set a timer to be handled at the end of the stream's expected duration (if the stream does not already signal its end// using a RTCP "BYE"). This is optional. If, instead, you want to keep the stream active - e.g., so you can later// 'seek' back within it and do another RTSP "PLAY" - then you can omit this code.// (Alternatively, if you don't want to receive the entire stream, you could set this timer for some shorter value.)if (scs.duration > 0) {unsigned const delaySlop = 2; // number of seconds extra to delay, after the stream's expected duration. (This is optional.)scs.duration += delaySlop;unsigned uSecsToDelay = (unsigned)(scs.duration * 1000000);scs.streamTimerTask = env.taskScheduler().scheduleDelayedTask(uSecsToDelay, (TaskFunc*)streamTimerHandler, rtspClient);}env << *rtspClient << "Started playing session";if (scs.duration > 0) {env << " (for up to " << scs.duration << " seconds)";}env << "...\n";success = True;} while (0);delete[] resultString;if (!success) {// An unrecoverable error occurred with this stream.shutdownStream(rtspClient);}

}// Implementation of the other event handlers:

void subsessionAfterPlaying(void* clientData)

{MediaSubsession* subsession = (MediaSubsession*)clientData;RTSPClient* rtspClient = (RTSPClient*)(subsession->miscPtr);// Begin by closing this subsession's stream:Medium::close(subsession->sink);subsession->sink = NULL;// Next, check whether *all* subsessions' streams have now been closed:MediaSession& session = subsession->parentSession();MediaSubsessionIterator iter(session);while ((subsession = iter.next()) != NULL) {if (subsession->sink != NULL) return; // this subsession is still active}// All subsessions' streams have now been closed, so shutdown the client:shutdownStream(rtspClient);

}void subsessionByeHandler(void* clientData, char const* reason)

{MediaSubsession* subsession = (MediaSubsession*)clientData;RTSPClient* rtspClient = (RTSPClient*)subsession->miscPtr;UsageEnvironment& env = rtspClient->envir(); // aliasenv << *rtspClient << "Received RTCP \"BYE\"";if (reason != NULL) {env << " (reason:\"" << reason << "\")";delete[] reason;}env << " on \"" << *subsession << "\" subsession\n";// Now act as if the subsession had closed:subsessionAfterPlaying(subsession);

}void streamTimerHandler(void* clientData)

{ourRTSPClient* rtspClient = (ourRTSPClient*)clientData;StreamClientState& scs = rtspClient->scs; // aliasscs.streamTimerTask = NULL;// Shut down the stream:shutdownStream(rtspClient);

}void shutdownStream(RTSPClient* rtspClient, int exitCode)

{UsageEnvironment& env = rtspClient->envir(); // aliasStreamClientState& scs = ((ourRTSPClient*)rtspClient)->scs; // alias// First, check whether any subsessions have still to be closed:if (scs.session != NULL) {Boolean someSubsessionsWereActive = False;MediaSubsessionIterator iter(*scs.session);MediaSubsession* subsession;while ((subsession = iter.next()) != NULL) {if (subsession->sink != NULL) {Medium::close(subsession->sink);subsession->sink = NULL;if (subsession->rtcpInstance() != NULL) {subsession->rtcpInstance()->setByeHandler(NULL, NULL); // in case the server sends a RTCP "BYE" while handling "TEARDOWN"}someSubsessionsWereActive = True;}}if (someSubsessionsWereActive) {// Send a RTSP "TEARDOWN" command, to tell the server to shutdown the stream.// Don't bother handling the response to the "TEARDOWN".rtspClient->sendTeardownCommand(*scs.session, NULL);}}env << *rtspClient << "Closing the stream.\n";Medium::close(rtspClient);// Note that this will also cause this stream's "StreamClientState" structure to get reclaimed.if (--rtspClientCount == 0) {// The final stream has ended, so exit the application now.// (Of course, if you're embedding this code into your own application, you might want to comment this out,// and replace it with "eventLoopWatchVariable = 1;", so that we leave the LIVE555 event loop, and continue running "main()".)//exit(exitCode);eventLoopWatchVariable = 1;}

}// Implementation of "ourRTSPClient":

ourRTSPClient* ourRTSPClient::createNew(UsageEnvironment& env, char const* rtspURL,int verbosityLevel, char const* applicationName, portNumBits tunnelOverHTTPPortNum)

{return new ourRTSPClient(env, rtspURL, verbosityLevel, applicationName, tunnelOverHTTPPortNum);

}ourRTSPClient::ourRTSPClient(UsageEnvironment& env, char const* rtspURL,int verbosityLevel, char const* applicationName, portNumBits tunnelOverHTTPPortNum): RTSPClient(env, rtspURL, verbosityLevel, applicationName, tunnelOverHTTPPortNum, -1)

{

}ourRTSPClient::~ourRTSPClient()

{

}// Implementation of "StreamClientState":

StreamClientState::StreamClientState(): iter(NULL), session(NULL), subsession(NULL), streamTimerTask(NULL), duration(0.0)

{

}StreamClientState::~StreamClientState()

{delete iter;if (session != NULL) {// We also need to delete "session", and unschedule "streamTimerTask" (if set)UsageEnvironment& env = session->envir(); // aliasenv.taskScheduler().unscheduleDelayedTask(streamTimerTask);Medium::close(session);}

}// Implementation of "DummySink":

// Even though we're not going to be doing anything with the incoming data, we still need to receive it.

// Define the size of the buffer that we'll use:

#define DUMMY_SINK_RECEIVE_BUFFER_SIZE 100000DummySink* DummySink::createNew(UsageEnvironment& env, MediaSubsession& subsession, char const* streamId)

{return new DummySink(env, subsession, streamId);

}DummySink::DummySink(UsageEnvironment& env, MediaSubsession& subsession, char const* streamId): MediaSink(env), fSubsession(subsession)

{fStreamId = strDup(streamId);fReceiveBuffer = new u_int8_t[DUMMY_SINK_RECEIVE_BUFFER_SIZE];

}DummySink::~DummySink()

{delete[] fReceiveBuffer;delete[] fStreamId;

}void DummySink::afterGettingFrame(void* clientData, unsigned frameSize, unsigned numTruncatedBytes, struct timeval presentationTime, unsigned durationInMicroseconds)

{DummySink* sink = (DummySink*)clientData;sink->afterGettingFrame(frameSize, numTruncatedBytes, presentationTime, durationInMicroseconds);

}// If you don't want to see debugging output for each received frame, then comment out the following line:

#define DEBUG_PRINT_EACH_RECEIVED_FRAME 1void DummySink::afterGettingFrame(unsigned frameSize, unsigned numTruncatedBytes, struct timeval presentationTime, unsigned /*durationInMicroseconds*/)

{// We've just received a frame of data. (Optionally) print out information about it:

#ifdef DEBUG_PRINT_EACH_RECEIVED_FRAMEif (fStreamId != NULL) envir() << "Stream \"" << fStreamId << "\"; ";envir() << fSubsession.mediumName() << "/" << fSubsession.codecName() << ":\tReceived " << frameSize << " bytes";if (numTruncatedBytes > 0) envir() << " (with " << numTruncatedBytes << " bytes truncated)";char uSecsStr[6 + 1]; // used to output the 'microseconds' part of the presentation timesprintf(uSecsStr, "%06u", (unsigned)presentationTime.tv_usec);envir() << ".\tPresentation time: " << (int)presentationTime.tv_sec << "." << uSecsStr;if (fSubsession.rtpSource() != NULL && !fSubsession.rtpSource()->hasBeenSynchronizedUsingRTCP()) {envir() << "!"; // mark the debugging output to indicate that this presentation time is not RTCP-synchronized}

#ifdef DEBUG_PRINT_NPTenvir() << "\tNPT: " << fSubsession.getNormalPlayTime(presentationTime);

#endifenvir() << "\n";

#endif// Then continue, to request the next frame of data:continuePlaying();

}Boolean DummySink::continuePlaying()

{if (fSource == NULL) return False; // sanity check (should not happen)// Request the next frame of data from our input source. "afterGettingFrame()" will get called later, when it arrives:fSource->getNextFrame(fReceiveBuffer, DUMMY_SINK_RECEIVE_BUFFER_SIZE, afterGettingFrame, this, onSourceClosure, this);return True;

}void shutdown_rtsp_client_stream()

{while (1) {std::this_thread::sleep_for(std::chrono::seconds(2));eventLoopWatchVariable = 1;break;}

}} // namespaceint test_live555_rtsp_client()

{// reference: live/testProgs/testRTSPClient.cpp// Begin by setting up our usage environment:TaskScheduler* scheduler = BasicTaskScheduler::createNew();UsageEnvironment* env = BasicUsageEnvironment::createNew(*scheduler);// We need at least one "rtsp://" URL argument:int argc = 2;char* argv[2] = { "test_live555_rtsp_client", "rtsp://184.72.239.149/vod/mp4://BigBuckBunny_175k.mov"};// There are argc-1 URLs: argv[1] through argv[argc-1]. Open and start streaming each one:for (int i = 1; i <= argc - 1; ++i) {openURL(*env, argv[0], argv[i]);}std::thread th(shutdown_rtsp_client_stream);// All subsequent activity takes place within the event loop:// This function call does not return, unless, at some point in time, "eventLoopWatchVariable" gets set to something non-zero.env->taskScheduler().doEventLoop(&eventLoopWatchVariable);th.join();// If you choose to continue the application past this point (i.e., if you comment out the "return 0;" statement above),// and if you don't intend to do anything more with the "TaskScheduler" and "UsageEnvironment" objects,// then you can also reclaim the (small) memory used by these objects by uncommenting the following code:env->reclaim(); env = NULL;delete scheduler; scheduler = NULL;return 0;

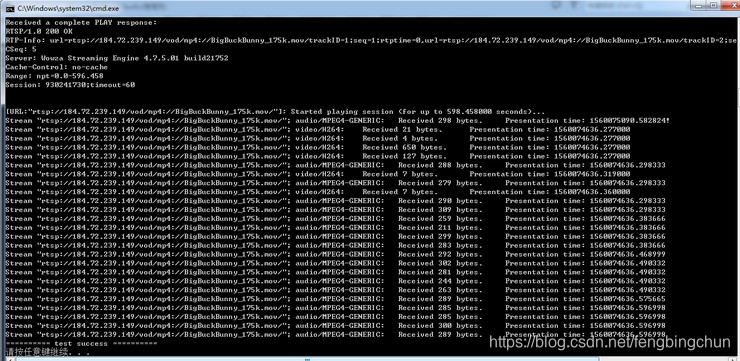

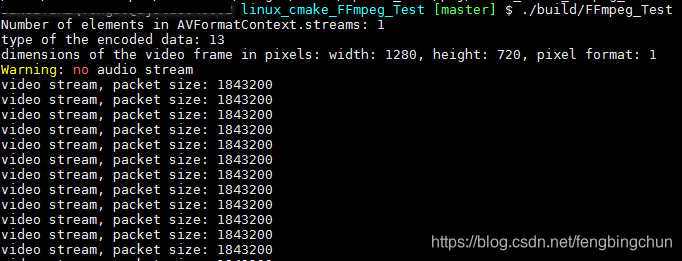

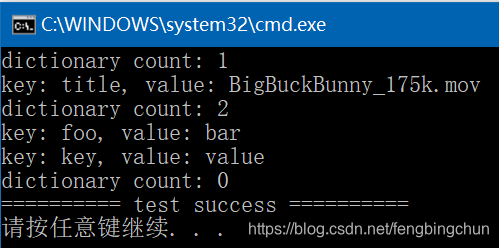

}执行结果如下:

GitHub:https://github.com/fengbingchun/OpenCV_Test

相关文章:

swift代理传值

比如我们这个场景,B要给A传值,那B就拥有代理属性, A就是B的代理,很简单吧!有代理那就离不开协议,所以第一步就是声明协议。在那里声明了?谁拥有代理属性就在那里声明,所以代码就是这…

重磅:腾讯正式开源图计算框架Plato,十亿级节点图计算进入分钟级时代

整理 | 唐小引 来源 | CSDN(ID:CSDNnews)腾讯开源进化 8 年,进入爆发期。 继刚刚连续开源 TubeMQ、Tencent Kona JDK、TBase、TKEStack 四款重点开源项目后,腾讯开源再次迎来重磅项目!北京时间 11 月 14 日…

类似ngnix的多进程监听用例

2019独角兽企业重金招聘Python工程师标准>>> 多进程监听适合于短连接,且连接间无交集的应用。前两天简单写了一个,在这里保存一下。 #include <sys/types.h>#include <stdarg.h>#include <signal.h>#include <unistd.h&…

今日头条李磊等最新论文:用于文本生成的核化贝叶斯Softmax

译者 | Raku 出品 | AI科技大本营(ID:rgznai100)摘要用于文本生成的神经模型需要在解码阶段具有适当词嵌入的softmax层,大多数现有方法采用每个单词单点嵌入的方式,但是一个单词可能具有多种意义,在不同的背景下&#…

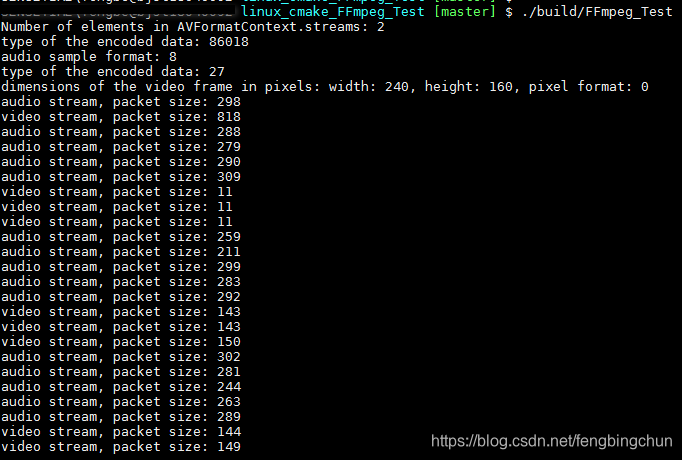

FFmpeg中RTSP客户端拉流测试代码

之前在https://blog.csdn.net/fengbingchun/article/details/91355410中给出了通过LIVE555实现拉流的测试代码,这里通过FFmpeg来实现,代码量远小于LIVE555,实现模块在libavformat。 在4.0及以上版本中,FFmpeg有了些变动ÿ…

虚拟机下运行linux通过nat模式与主机通信、与外网连接

首先:打开虚拟机的编辑菜单下的虚拟网络编辑器,选中VMnet8 NAT模式。通过NAT设置获取网关IP,通过DHCP获取可配置的IP区间。同时,将虚拟机的虚拟机菜单的设置选项中的网络适配器改为NAT模式。即可! 打开linux࿰…

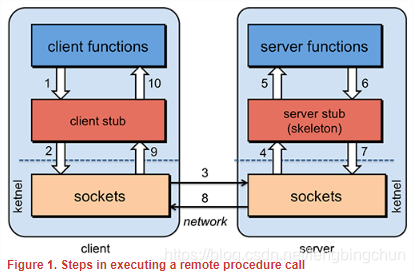

远程过程调用RPC简介

RPC(Remote Procedure Call, 远程过程调用):是一种通过网络从远程计算机程序上请求服务,而不需要了解底层网络技术的思想。 RPC是一种技术思想而非一种规范或协议,常见RPC技术和框架有: (1). 应用级的服务框架:阿里的…

iOS开发:沙盒机制以及利用沙盒存储字符串、数组、字典等数据

iOS开发:沙盒机制以及利用沙盒存储字符串、数组、字典等数据 1、初识沙盒:(1)、存储在内存中的数据,程序关闭,内存释放,数据就会丢失,这种数据是临时的。要想数据永久保存,将数据保存成文件&am…

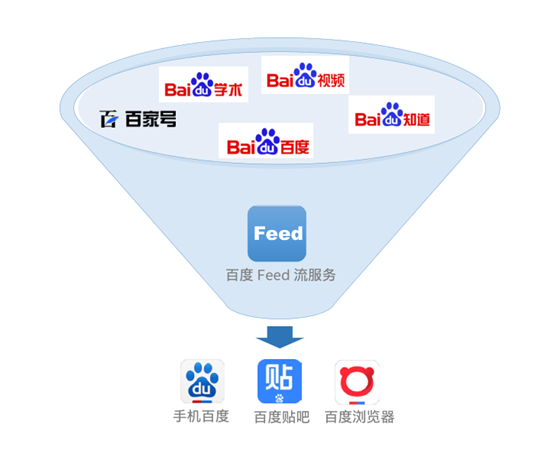

支撑亿级用户“刷手机”,百度Feed流背后的新技术装备有多牛?

导读:截止到2018年底,我国网民使用手机上网的比例已高达98.6%,移动互联网基本全方位覆盖。智能手机的操作模式让我们更倾向于通过简单的“划屏”动作,相对于传统的文本交互方式来获取信息,用户更希望一拿起手机就能刷到…

玩转高性能超猛防火墙nf-HiPAC

中华国学,用英文讲的,稀里糊涂听了个大概,不得不佩服西方人的缜密的逻辑思维,竟然把玄之又玄的道家思想说的跟牛顿定律一般,佩服。归家,又收到了邮件,还是关于nf-hipac的,不知不觉就…

ios 沙盒 plist 数据的读取和存储

plist 只能存储基本的数据类型 和 array 字典 [objc] view plaincopy - (void)saveArray { // 1.获得沙盒根路径 NSString *home NSHomeDirectory(); // 2.document路径 NSString *docPath [home stringByAppendingPathComponent:"Document…

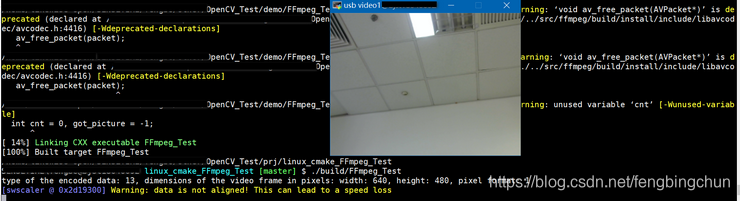

FFmpeg实现获取USB摄像头视频流测试代码

通过USB摄像头(注:windows7/10下使用内置摄像头,linux下接普通的usb摄像头(Logitech))获取视频流用到的模块包括avformat和avdevice。头文件仅include avdevice.h即可,因为avdevice.h中会include avformat.h。libavdevice库是libavformat的一…

重磅!明略发布数据中台战略和三大解决方案

11月15日,明略科技在上海举办以“FASTER 聚变增长新动力”为主题的2019数据智能峰会,宣布“打造智能时代的企业中台”新战略,同时推出了两大新产品“新一代数据中台”和“营销智能平台”,以及三大行业解决方案,分别是“…

Android程序完全退出的三种方法

1. Dalvik VM的本地方法 android.os.Process.killProcess(android.os.Process.myPid()) //获取PID,目前获取自己的也只有该API,否则从/proc中自己的枚举其他进程吧,不过要说明的是,结束其他进程不一定有权限,不然就…

FFmpeg通过摄像头实现对视频流进行解码并显示测试代码(旧接口)

这里通过USB摄像头(注:windows7/10下使用内置摄像头,linux下接普通的usb摄像头(Logitech))获取视频流,然后解码,最后再用opencv显示。用到的模块包括avformat、avcodec和avdevice。libavdevice库是libavformat的一个补充库(comple…

IOS数据存储之文件沙盒存储

前言: 之前学习了数据存储的NSUserDefaults,归档和解档,对于项目开发中如果要存储一些文件,比如图片,音频,视频等文件的时候就需要用到文件存储了。文件沙盒存储主要存储非机密数据,大的数据。 …

剖析Focal Loss损失函数: 消除类别不平衡+挖掘难分样本 | CSDN博文精选

作者 | 图像所浩南哥来源 | CSDN博客论文名称:《 Focal Loss for Dense Object Detection 》论文下载:https://arxiv.org/pdf/1708.02002.pdf论文代码:https://github.com/facebookresearch/Detectron/tree/master/configs/12_2017_baselines…

windows下mysql开启慢查询

mysql在windows系统中的配置文件一般是my.ini,我的路径是c:\mysql\my.ini,你根据自己安装mysql路径去查找[mysqld]#The TCP/IP Port the MySQL Server will listen onport3306#开启慢查询log-slow-queries E:\Program Files\MySQL\MySQL Server 5.5\mysql_slow_query.loglong_…

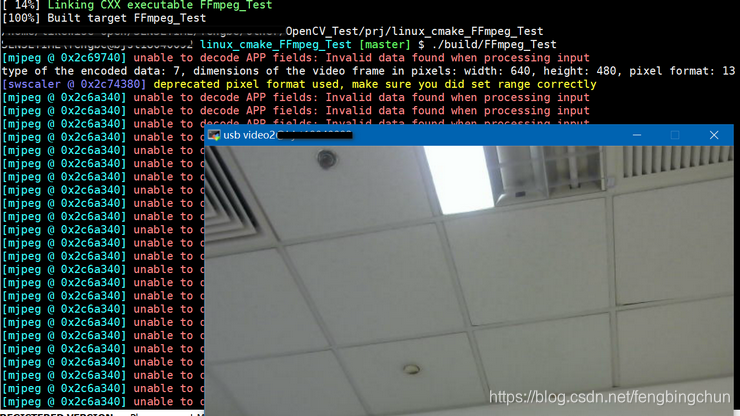

FFmpeg通过摄像头实现对视频流进行解码并显示测试代码(新接口)

在https://blog.csdn.net/fengbingchun/article/details/93975325 中给出了通过旧接口即FFmpeg中已废弃的接口实现通过摄像头获取视频流然后解码并显示的测试代码,这里通过使用FFmpeg中的新接口再次实现通过的功能,主要涉及到的接口函数包括:…

iOS经典讲解之获取沙盒文件路径写入和读取简单对象

#import "RootViewController.h" interface RootViewController () end 实现文件: implementation RootViewController - (void)viewDidLoad { [super viewDidLoad]; [self path]; [self writeFile]; [self readingFi…

Google最新论文:Youtube视频推荐如何做多目标排序

作者 | 深度传送门来源 | 深度传送门(ID:deep_deliver)导读:本文是“深度推荐系统”专栏的第十五篇文章,这个系列将介绍在深度学习的强力驱动下,给推荐系统工业界所带来的最前沿的变化。本文主要介绍下Google在RecSys …

Jmeter 笔记

Apache JMeter是Apache组织开发的基于Java的压力测试工具。用于对软件做压力测试,它最初被设计用于Web应用测试但后来扩展到其他测试领域。 它可以用于测试静态和动态资源例如静态文件、Java 小服务程序、CGI 脚本、Java 对象、数据库, FTP 服务器&#…

王贻芳院士:为什么中国要探究中微子实验?

演讲嘉宾 | 王贻芳(中国科学院院士、中科院高能物理研究所所长)整理 | 德状出品 | AI科技大本营(ID:rgznai100)日前,在2019腾讯科学WE大会期间,中国科学院院士、高能物理研究所所长王贻芳分享了中微子与光电…

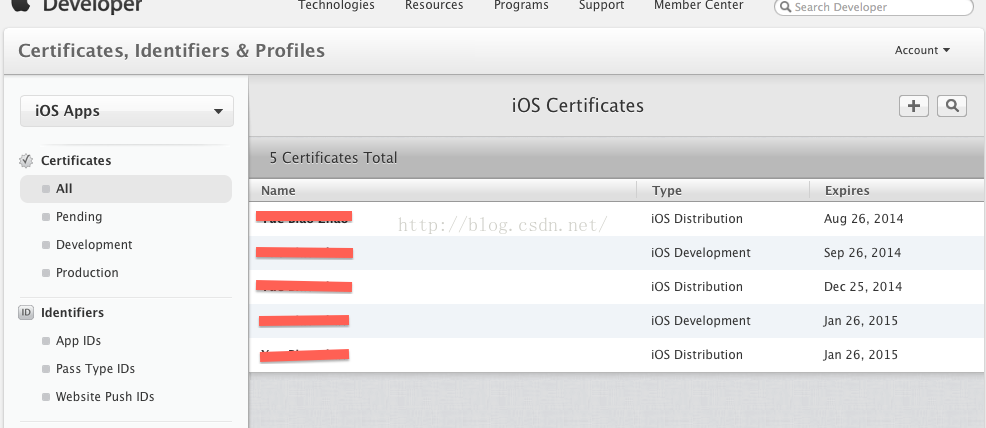

一个苹果证书供多台电脑开发使用——导出p12文件

摘要 在苹果开发者网站申请的证书,是授权mac设备的开发或者发布的证书,这意味着一个设备对应一个证书,但是99美元账号只允许生成3个发布证书,两个开发证书,这满足不了多mac设备的使用,使用p12文件可以解决这…

FFmpeg中AVDictionary介绍

FFmpeg中的AVDictionary是一个结构体,简单的key/value存储,经常使用AVDictionary设置或读取内部参数,声明如下,具体实现在libavutil模块中的dict.c/h,提供此结构体是为了与libav兼容,但它实现效率低下&…

RocketMQ3.2.2生产者发送消息自动创建Topic队列数无法超过4个

问题现象RocketMQ3.2.2版本,测试时尝试发送消息时自动创建Topic,设置了队列数量为8:producer.setDefaultTopicQueueNums(8);同时设置broker服务器的配置文件broker.properties:defaultTopicQueueNums16但实际创建后从控制台及后台…

iOS各种宏定义

#ifndef MacroDefinition_h #define MacroDefinition_h //************************ 获取设备屏幕尺寸********************************************** //宽度 #define SCREEN_WIDTH [UIScreen mainScreen].bounds.size.width //高度 #define SCREENH_HEIGHT [UIScree…

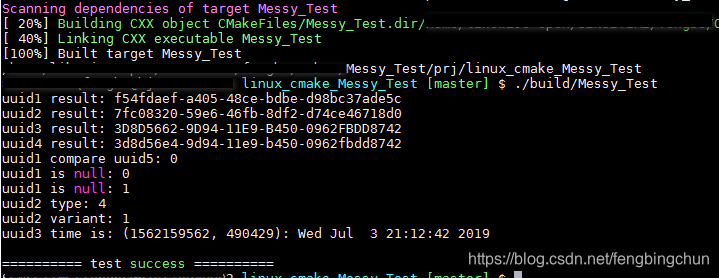

开源库libuuid简介及使用

libuuid是一个开源的用于生成UUID(Universally Unique Identifier,通用唯一标识符)的库,它的源码可从https://sourceforge.net/projects/libuuid/ 下载,最新版本为1.0.3,更新于2013年4月27日,此库仅支持在类Linux下编译…

深度学习会议论文不好找?这个ConfTube网站全都有

BDTC大会官网:https://t.csdnimg.cn/q4TY作者 | 刘畅 出品 | AI科技大本营(ID:rgznai1000)最近跟身边的硕士生、博士生聊天,发现有一个共同话题,大家都想要知道哪款产品能防止掉头发?养发育发已经成了茶余饭…

Java用for循环Map

为什么80%的码农都做不了架构师?>>> 根据JDK5的新特性,用For循环Map,例如循环Map的Key for(String dataKey : paraMap.keySet()) { System.out.println(dataKey ); } 注意的是,paraMap 是怎么样定义的,如果是简单的Map paraMap new …