Hive-1.2.0学习笔记(一)安装配置

鲁春利的工作笔记,好记性不如烂笔头

下载hive:http://hive.apache.org/index.html

Hive是基于Hadoop的一个数据仓库工具,提供了SQL查询功能,能够将SQL语句解析成MapReduce任务对存储在HDFS上的数据进行处理。

MySQ安装

Hive有三种运行模式:

1.内嵌模式:默认情况下,Hive元数据保存在内嵌的 Derby 数据库中,只能允许一个会话连接,只适合简单的测试;

2.本地模式:将元数据库保存在本地的独立数据库中(比如说MySQL),能够支持多会话和多用户连接;

3.远程模式:如果我们的Hive客户端比较多,在每个客户端都安装MySQL服务还是会造成一定的冗余和浪费,这种情况下,就可以更进一步,将MySQL也独立出来,将元数据保存在远端独立的MySQL服务中。

1、内嵌模式

通过上述方式直接运行,不做其他任何配置将会作为内嵌模式运行,Hive的元数据(metadata)信息存储在Derby数据库中(元数据是指的其他数据的描述信息,如MySQL的information_schema库)。

使用Derby存储元数据问题见:http://luchunli.blog.51cto.com/2368057/1718422

查看hive-default.xml文件

<configuration><property><name>javax.jdo.option.ConnectionURL</name><value>jdbc:derby:;databaseName=metastore_db;create=true</value><description>JDBC connect string for a JDBC metastore</description></property><property><name>javax.jdo.option.ConnectionDriverName</name><value>org.apache.derby.jdbc.EmbeddedDriver</value><description>Driver class name for a JDBC metastore</description></property><property><name>javax.jdo.option.ConnectionUserName</name><value>APP</value><description>username to use against metastore database</description></property><property><name>javax.jdo.option.ConnectionPassword</name><value>mine</value><description>password to use against metastore database</description></property> </configuration>

说明:Derby实际上也有network模式,但在实际的项目中MySQL应用较多,因此采用本地模式时基于MySQL数据库。

2、MySQL安装

MySQL5.6.17版本

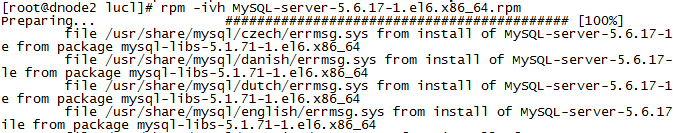

[root@nnode lucl]# rpm -ivh MySQL-server-5.6.17-1.el6.x86_64.rpm

由于版本冲突,卸载之前的MySQL5.1.71版本

[root@nnodelucl]# yum -y remove mysql-libs-5.1.71*

再次安装MySQL就可以了。

[root@nnode lucl]# rpm -ivh MySQL-server-5.6.17-1.el6.x86_64.rpm [root@nnode lucl]# rpm -ivh MySQL-client-5.6.17-1.el6.x86_64.rpm

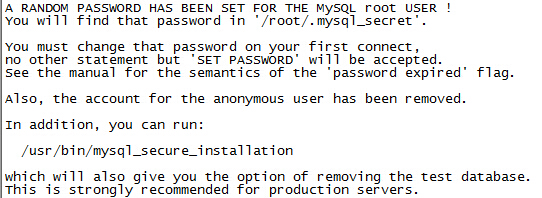

说明:通过RPM命令安装完MySQ后,会在密码文件生成随机密码。

安装后的目录说明:

| 目录 | 功能 | 备注 |

| /usr/bin | Client programs and scripts | |

| /usr/sbin | The mysqld server | |

| /var/lib/mysql | 数据文件 | |

| /usr/my.cnf | 配置文件 |

4、验证安装

[root@nnode lucl]# service mysql start

Starting MySQL.. SUCCESS!

[root@nnode lucl]# service mysql statusSUCCESS! MySQL running (4564)

[root@nnode lucl]# cat /root/.mysql_secret

# The random password set for the root user at Tue Jan 19 15:20:09 2016 (local time):

IhuKPeHDw5Ro0tW5[root@nnode lucl]# mysql -uroot -pIhuKPeHDw5Ro0tW5

Warning: Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 1

Server version: 5.6.17Copyright (c) 2000, 2014, Oracle and/or its affiliates. All rights reserved.Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.mysql> show databases;

ERROR 1820 (HY000): You must SET PASSWORD before executing this statement

mysql> set password=password("root");

Query OK, 0 rows affected (0.00 sec)mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)mysql> exit

Bye

[root@nnode lucl]# mysql -uroot -proot

Warning: Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 2

Server version: 5.6.17 MySQL Community Server (GPL)Copyright (c) 2000, 2014, Oracle and/or its affiliates. All rights reserved.Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.mysql>

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| test |

+--------------------+

4 rows in set (0.00 sec)mysql> exit;

Bye

[root@nnode lucl]#5、修改配置文件my.cnf

说明:不是必须的,可以采用默认配置。

[mysql]

port=3306

socket=/var/lib/mysql/mysql.sock[mysqld]

server-id=117

innodb_flush_log_at_trx_commit=2port=3306

socket=/var/lib/mysql/mysql.sockslave-skip-errors=all

lower_case_table_names=1max_connections=300innodb_flush_log_at_trx_commit=2

innodb_file_per_table=1datadir=/var/lib/mysql[mysqldump]

quick

max_allowed_packet = 16M说明:实际上通过service mysql start命令也是通过调用mysqld_safe来启动的mysql服务,并且在启动的时候默认指定了用户mysql,因此需要设定log目录log-bin所属用户和组为mysql:mysql。

[root@nnode mysql]# chown -R mysql:mysql log-bin/

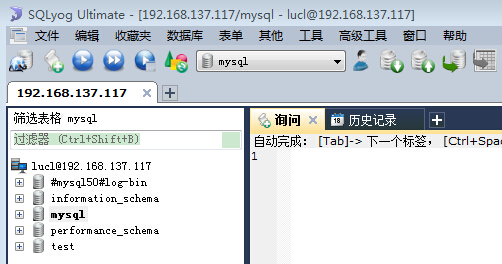

6、启用远程访问

[root@nnode mysql]# mysql -uroot -proot

Warning: Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 1

Server version: 5.6.17-log MySQL Community Server (GPL)Copyright (c) 2000, 2014, Oracle and/or its affiliates. All rights reserved.Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.mysql> use mysql;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -ADatabase changed

mysql> select host, user, password, password_expired from user;

+-----------+------+-------------------------------------------+------------------+

| host | user | password | password_expired |

+-----------+------+-------------------------------------------+------------------+

| localhost | root | *81F5E21E35407D884A6CD4A731AEBFB6AF209E1B | N |

| nnode | root | *6368C544225CD5B1670ACC7C0BFE1974F4221C85 | Y |

| 127.0.0.1 | root | *6368C544225CD5B1670ACC7C0BFE1974F4221C85 | Y |

| ::1 | root | *6368C544225CD5B1670ACC7C0BFE1974F4221C85 | Y |

+-----------+------+-------------------------------------------+------------------+

4 rows in set (0.00 sec)

# 说明:host表名了可以连接到该server的主机,如果为%则表示可以从任意主机连接。mysql> CREATE USER 'lucl'@'%' IDENTIFIED BY 'lucl';

GRANT ALL PRIVILEGES ON *.* TO lucl@'%';Query OK, 0 rows affected (0.04 sec)mysql> GRANT ALL PRIVILEGES ON *.* TO lucl@'%';

Query OK, 0 rows affected (0.00 sec)mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)mysql> select host, user, password, password_expired from user;

+-----------+------+-------------------------------------------+------------------+

| host | user | password | password_expired |

+-----------+------+-------------------------------------------+------------------+

| localhost | root | *81F5E21E35407D884A6CD4A731AEBFB6AF209E1B | N |

| nnode | root | *6368C544225CD5B1670ACC7C0BFE1974F4221C85 | Y |

| 127.0.0.1 | root | *6368C544225CD5B1670ACC7C0BFE1974F4221C85 | Y |

| ::1 | root | *6368C544225CD5B1670ACC7C0BFE1974F4221C85 | Y |

| % | lucl | *A5DC323CE3368879D65443454066316A01521C95 | N |

+-----------+------+-------------------------------------------+------------------+

5 rows in set (0.00 sec)mysql>

至此,MySQL安装配置完成。

7、准备Hive用户

在MySQL为Hive创建一个库,并新建Hive连接MySQL时的用户。

[hadoop@nnode conf]$ mysql -uroot -proot

Warning: Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 5

Server version: 5.6.17 MySQL Community Server (GPL)Copyright (c) 2000, 2014, Oracle and/or its affiliates. All rights reserved.Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.mysql> create database hive;

Query OK, 1 row affected (0.01 sec)mysql> CREATE USER 'hive'@'localhost' IDENTIFIED BY 'hive';

Query OK, 0 rows affected (0.01 sec)mysql> CREATE USER 'hive'@'%' IDENTIFIED BY 'hive';

Query OK, 0 rows affected (0.00 sec)mysql> GRANT ALL PRIVILEGES ON hive.* TO hive@'localhost';

Query OK, 0 rows affected (0.00 sec)mysql> GRANT ALL PRIVILEGES ON hive.* TO hive@'%';

Query OK, 0 rows affected (0.00 sec)mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)mysql> exit

Bye

[hadoop@nnode conf]$查看新创建的hive用户的权限

[hadoop@nnode conf]$ mysql -uhive -phive

Warning: Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 8

Server version: 5.6.17 MySQL Community Server (GPL)Copyright (c) 2000, 2014, Oracle and/or its affiliates. All rights reserved.Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.mysql> show grants for hive@localhost;

+-------------------------------------------------------------------------------------------------------------+

| Grants for hive@localhost |

+-------------------------------------------------------------------------------------------------------------+

| GRANT USAGE ON *.* TO 'hive'@'localhost' IDENTIFIED BY PASSWORD '*4DF1D66463C18D44E3B001A8FB1BBFBEA13E27FC' |

| GRANT ALL PRIVILEGES ON `hive`.* TO 'hive'@'localhost' |

+-------------------------------------------------------------------------------------------------------------+

2 rows in set (0.00 sec)mysql> show grants for hive@'%';

+-----------------------------------------------------------------------------------------------------+

| Grants for hive@% |

+-----------------------------------------------------------------------------------------------------+

| GRANT USAGE ON *.* TO 'hive'@'%' IDENTIFIED BY PASSWORD '*4DF1D66463C18D44E3B001A8FB1BBFBEA13E27FC' |

| GRANT ALL PRIVILEGES ON `hive`.* TO 'hive'@'%' |

+-----------------------------------------------------------------------------------------------------+

2 rows in set (0.00 sec)mysql>

mysql> exit;

Bye

[hadoop@nnode conf]$Hive环境配置

1、解压

[hadoop@nnode lucl]$ tar -xzv -f apache-hive-1.2.0-bin.tar.gz # 重命名 [hadoop@nnode lucl]$ mv apache-hive-1.2.0-bin/ hive-1.2.0

2、配置Hive环境变量

[hadoop@nnode ~]$ vim .bash_profile# Hive export HIVE_HOME=/lucl/hive-1.2.0 export PATH=$HIVE_HOME/bin:$PATH

3、配置hive-env.sh

[hadoop@nnode conf]$ cp hive-env.sh.template hive-env.sh[hadoop@nnode conf]$ vim hive-env.sh export JAVA_HOME=/lucl/jdk1.7.0_80 export HADOOP_HOME=/lucl/hadoop-2.6.0 export HIVE_HOME=/lucl/hive-1.2.0 export HIVE_CONF_DIR=/lucl/hive-1.2.0/conf

4、配置hive-site.xml

[hadoop@nnode conf]$ cp hive-default.xml.template hive-site.xml [hadoop@nnode conf]$ cat hive-site.xml <?xml version="1.0" encoding="UTF-8" standalone="no"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <configuration><!-- WARNING!!! This file is auto generated for documentation purposes ONLY! --><!-- WARNING!!! Any changes you make to this file will be ignored by Hive. --><!-- WARNING!!! You must make your changes in hive-site.xml instead. --><!-- Hive Execution Parameters --><!-- 空 --> </configuration> [hadoop@nnode conf]$

配置hive-site.xml

<configuration><property><name>javax.jdo.option.ConnectionURL</name><value>jdbc:mysql://nnode:3306/hive?createDatabaseIfNotExist=true</value><description>JDBC connect string for a JDBC metastore</description></property><property><name>javax.jdo.option.ConnectionDriverName</name><value>com.mysql.jdbc.Driver</value><description>Driver class name for a JDBC metastore</description></property><property><name>javax.jdo.option.ConnectionUserName</name><value>hive</value><description>username to use against metastore database</description></property><property><name>javax.jdo.option.ConnectionPassword</name><value>hive</value><description>password to use against metastore database</description></property> </configuration>

5、拷贝MySQL的jar到Hive的lib目录下

[hadoop@nnode conf]$ cp /mnt/hgfs/Share/mysql-connector-java-5.1.27.jar $HIVE_HOME/lib/

6、执行bin/hive

[hadoop@nnode hive-1.2.0]$ bin/hiveLogging initialized using configuration in jar:file:/usr/local/hive1.2.0/lib/hive-common-1.2.0.jar!/hive-log4j.properties [ERROR] Terminal initialization failed; falling back to unsupported java.lang.IncompatibleClassChangeError: Found class jline.Terminal, but interface was expectedat jline.TerminalFactory.create(TerminalFactory.java:101)at jline.TerminalFactory.get(TerminalFactory.java:158)at jline.console.ConsoleReader.<init>(ConsoleReader.java:229)at jline.console.ConsoleReader.<init>(ConsoleReader.java:221)at jline.console.ConsoleReader.<init>(ConsoleReader.java:209)at org.apache.hadoop.hive.cli.CliDriver.setupConsoleReader(CliDriver.java:787)at org.apache.hadoop.hive.cli.CliDriver.executeDriver(CliDriver.java:721)at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:681)at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:621)at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)at java.lang.reflect.Method.invoke(Method.java:606)at org.apache.hadoop.util.RunJar.run(RunJar.java:221)at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

错误原因

Hive has upgraded to Jline2 but jline 0.94 exists in the Hadoop lib.

解决方式

将hive安装目录lib/jline-2.12.jar拷贝到hadoop-2.6.0/share/hadoop/yarn/lib/目录下。

[hadoop@nnode lucl]$ ll hive-1.2.0/lib/|grep jline -rw-rw-r-- 1 hadoop hadoop 213854 4月 30 2015 jline-2.12.jar[hadoop@nnode lucl]$ ll hadoop-2.6.0/share/hadoop/yarn/lib/|grep jline -rw-r--r-- 1 hadoop hadoop 87325 11月 14 2014 jline-0.9.94.jar[hadoop@nnode lucl]$ mv hadoop-2.6.0/share/hadoop/yarn/lib/jline-0.9.94.jar hadoop-2.6.0/share/hadoop/yarn/lib/jline-0.9.94.jar.backup [hadoop@nnode lucl]$ cp hive-1.2.0/lib/jline-2.12.jar hadoop-2.6.0/share/hadoop/yarn/lib/ [hadoop@nnode lucl]$ ll hadoop-2.6.0/share/hadoop/yarn/lib/|grep jline -rw-r--r-- 1 hadoop hadoop 87325 11月 14 2014 jline-0.9.94.jar.backup -rw-rw-r-- 1 hadoop

再次执行

[hadoop@nnode lucl]$ hive-1.2.0/bin/hiveLogging initialized using configuration in jar:file:/usr/local/hive1.2.0/lib/hive-common-1.2.0.jar!/hive-log4j.properties hive> exit; [hadoop@nnode lucl]$

能够正确进入到hive的命令行,此时去查看MySQL的hive数据库,看都生成了那些表来存储元数据

[root@nnode ~]# mysql -uhive -phive Warning: Using a password on the command line interface can be insecure. Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 25 Server version: 5.6.17 MySQL Community Server (GPL)Copyright (c) 2000, 2014, Oracle and/or its affiliates. All rights reserved.Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.mysql> use hive; Reading table information for completion of table and column names You can turn off this feature to get a quicker startup with -ADatabase changed mysql> show tables; +---------------------------+ | Tables_in_hive | +---------------------------+ | bucketing_cols | | cds | | columns_v2 | | database_params | | dbs | | func_ru | | funcs | | global_privs | | part_col_stats | | partition_key_vals | | partition_keys | | partition_params | | partitions | | roles | | sd_params | | sds | | sequence_table | | serde_params | | serdes | | skewed_col_names | | skewed_col_value_loc_map | | skewed_string_list | | skewed_string_list_values | | skewed_values | | sort_cols | | tab_col_stats | | table_params | | tbls | | version | +---------------------------+ 29 rows in set (0.00 sec)mysql> select * from dbs; +-------+-----------------------+------------------------------------+---------+------------+------------+ | DB_ID | DESC | DB_LOCATION_URI | NAME | OWNER_NAME | OWNER_TYPE | +-------+-----------------------+------------------------------------+---------+------------+------------+ | 1 | Default Hive database | hdfs://cluster/user/hive/warehouse | default | public | ROLE | +-------+-----------------------+------------------------------------+---------+------------+------------+ 1 row in set (0.00 sec)mysql> select * from tbls; Empty set (0.00 sec)mysql> select * from version; +--------+----------------+-----------------------------------------+ | VER_ID | SCHEMA_VERSION | VERSION_COMMENT | +--------+----------------+-----------------------------------------+ | 1 | 1.2.0 | Set by MetaStore hadoop@192.168.137.117 | +--------+----------------+-----------------------------------------+ 1 row in set (0.00 sec)mysql> exit; Bye [root@nnode ~]#

通过dbs表能够看到实际上Hive对应的数据存储目录是我的hdfs集群

[hadoop@nnode local]$ hdfs dfs -ls -R hdfs://cluster/user/hive ls: `hdfs://cluster/user/hive': No such file or directory [hadoop@nnode local]$

简单SQL语句操作

[hadoop@nnode local]$ hive1.2.0/bin/hiveLogging initialized using configuration in jar:file:/usr/local/hive1.2.0/lib/hive-common-1.2.0.jar!/hive-log4j.properties

hive> create table student(sno int, sname varchar(50));

OK

Time taken: 4.054 seconds

hive> show tables;

OK

student

Time taken: 0.772 seconds, Fetched: 1 row(s)# insert操作竟然是MapReduce

hive> insert into student(sno, sname) values(100, 'zhangsan');

Query ID = hadoop_20151203234553_5f722629-2322-486b-97f2-f5b803e950ee

Total jobs = 3

Launching Job 1 out of 3

Number of reduce tasks is set to 0 since there's no reduce operator

Starting Job = job_1449147095699_0001, Tracking URL = http://nnode:8088/proxy/application_1449147095699_0001/

Kill Command = /usr/local/hadoop2.6.0/bin/hadoop job -kill job_1449147095699_0001

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 0

2015-12-03 23:47:07,405 Stage-1 map = 0%, reduce = 0%

2015-12-03 23:48:07,554 Stage-1 map = 0%, reduce = 0%

2015-12-03 23:48:51,645 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.01 sec

MapReduce Total cumulative CPU time: 5 seconds 10 msec

Ended Job = job_1449147095699_0001

Stage-4 is selected by condition resolver.

Stage-3 is filtered out by condition resolver.

Stage-5 is filtered out by condition resolver.

Moving data to: hdfs://cluster/user/hive/warehouse/student/.hive-staging_hive_2015-12-03_23-45-53_234_4559428877079063192-1/-ext-10000

Loading data to table default.student

Table default.student stats: [numFiles=1, numRows=1, totalSize=13, rawDataSize=12]

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Cumulative CPU: 5.63 sec HDFS Read: 3697 HDFS Write: 84 SUCCESS

Total MapReduce CPU Time Spent: 5 seconds 630 msec

OK

Time taken: 183.786 seconds# 查询数据

hive> select * from student;

OK

100 zhangsan

Time taken: 0.192 seconds, Fetched: 1 row(s)

hive> select sno from student;

OK

100

Time taken: 0.186 seconds, Fetched: 1 row(s)

hive> exit;

[hadoop@nnode local]$查看HDFS

# 查看目录 [hadoop@nnode local]$ hdfs dfs -ls -R /user/hive/ drwxrw-rw- - hadoop hadoop 0 2015-12-03 23:42 /user/hive/warehouse drwxrw-rw- - hadoop hadoop 0 2015-12-03 23:48 /user/hive/warehouse/student -rwxrw-rw- 2 hadoop hadoop 13 2015-12-03 23:48 /user/hive/warehouse/student/000000_0# 查看文件 [hadoop@nnode local]$ hdfs dfs -text /user/hive/warehouse/student/000000_0 100zhangsan [hadoop@nnode local]$

通过MySQL确认元数据

# 查询表信息

mysql> SELECT tbl_id, tbl_name, tbl_type, create_time, db_id, owner, sd_id from tbls;

+--------+----------+---------------+-------------+-------+--------+-------+

| tbl_id | tbl_name | tbl_type | create_time | db_id | owner | sd_id |

+--------+----------+---------------+-------------+-------+--------+-------+

| 1 | student | MANAGED_TABLE | 1449157379 | 1 | hadoop | 1 |

+--------+----------+---------------+-------------+-------+--------+-------+

1 row in set (0.00 sec)# 查询存储信息

mysql> SELECT sd_id, input_format, output_format, location from sds;

+-------+------------------------------------------+------------------------------------------------------------+--------------------------------------------+

| sd_id | input_format | output_format | location |

+-------+------------------------------------------+------------------------------------------------------------+--------------------------------------------+

| 1 | org.apache.hadoop.mapred.TextInputFormat | org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat | hdfs://cluster/user/hive/warehouse/student |

+-------+------------------------------------------+------------------------------------------------------------+--------------------------------------------+

1 row in set (0.00 sec)mysql>转载于:https://blog.51cto.com/luchunli/1693817

相关文章:

邮件安全隐患及其防范技术研究

邮件安全隐患及其防范技术研究<?xml:namespace prefix o ns "urn:schemas-microsoft-com:office:office" />陈小兵【antian365.com】摘要电子邮件是Internet上使用最为频繁和广泛的服务,在给人们带来便利的同时,亦带来令人担忧的邮件…

必看!52篇深度强化学习收录论文汇总 | AAAI 2020

所有参与投票的 CSDN 用户都参加抽奖活动群内公布奖项,还有更多福利赠送来源 | 深度强化学习实验室(ID:Deep-RL)作者 | DeepRLAAAI 2020 共收到的有效论文投稿超过 8800 篇,其中 7737 篇论文进入评审环节,最终收录数量…

朴素、Select、Poll和Epoll网络编程模型实现和分析——Epoll模型

在阅读完《朴素、Select、Poll和Epoll网络编程模型实现和分析——Select模型》和《朴素、Select、Poll和Epoll网络编程模型实现和分析——Poll模型》两篇文章后,我们发现一个问题,不管select函数还是poll函数都不够智能,它们只能告诉我们成功…

Scala 深入浅出实战经典 第88讲:Scala中使用For表达式实现map、flatMap、filter

高级函数 map,flatMap,filter用for循环的实现。package com.dt.scala.forexpressionobject For_Advanced {def main(args: Array[String]) {}def map[A, B](list: List[A], f: A > B): List[B] for(element <- list) yield f(element)def flatMap[A, B](list: List[A], f…

抛弃Python,我们为什么用Go编写机器学习架构?

所有参与投票的 CSDN 用户都参加抽奖活动群内公布奖项,还有更多福利赠送作者 | Caleb Kaiser译者 | 弯月,编辑 | 郭芮来源 | CSDN(ID:CSDNnews)如今,众所周知Python是机器学习项目中最流行的语言。尽管R、C…

朴素、Select、Poll和Epoll网络编程模型实现和分析——模型比较

经过之前四篇博文的介绍,可以大致清楚各种模型的编程步骤。现在我们来回顾下各种模型(转载请指明出于breaksoftware的csdn博客) 模型编程步骤对比《朴素、Select、Poll和Epoll网络编程模型实现和分析——朴素模型》中介绍的是最基本的网络编程…

使用VM虚拟机的一点小技巧

今天想为朋友弄一个虚拟机系统文件,这样就可以直接拷贝过去,直接让他用了。哪成想电脑里的系统镜像文件不能用,也不知道是不是VM不支持,反正怎么着也引导不起来了。无奈只好用硬件光驱来装虚拟系统,把2003系统盘装入光…

翻译:AKKA笔记 - Actor消息 -1(二)

消息 我们只是让QuoteRequest到ActorRef去但是我们根本没见过消息类! 它是这样的:(一个最佳实践是把你的消息类包装在一个完整的对象里以利于更好的组织) TeacherProtocol package me.rerun.akkanotes.messaging.protocolsobject …

远程安装oracle 10.2.1 for redhat 5.0 2.6.18-53.el5xen

远程安装oracle <?xml:namespace prefix st1 ns "urn:schemas-microsoft-com:office:smarttags" />10.2.1 for redhat 5.0 2.6.18-53.el5xen<?xml:namespace prefix o ns "urn:schemas-microsoft-com:office:office" />今天有个朋友打电…

伯克利新无监督强化学习方法:减少混沌所产生的突现行为

作者 | Glen Berseth译者 | Arvin编辑 | 夕颜出品 | AI科技大本营(ID: rgznai100)【导读】所有生命有机体都在环境中占据一席之地,使它们在周围不断增加的熵中可以保持相对可预测性。例如,人类竭尽全力保护自己免受意外袭击--我们…

朴素、Select、Poll和Epoll网络编程模型实现和分析——Poll、Epoll模型处理长连接性能比较

在《朴素、Select、Poll和Epoll网络编程模型实现和分析——模型比较》一文中,我们分析了各种模型在处理短连接时的能力。本文我们将讨论处理长连接时各个模型的性能。(转载请指明出于breaksoftware的csdn博客) 我们可以想象下场景,…

Topcoder SRM 663 DIV 1

ABBADiv1 题意: 规定两种操作,一种是在字符串的末尾添加A,另一种是在末尾添加B然后反转字符串。现在给你一个起始串,一个终点串,然后问你是否能够通过以上两种操作,从起始串变为终点串。 题解: …

跨平台PHP调试器设计及使用方法——立项

作为一个闲不住且希望一直能挑战自己的人,我总是在琢磨能做点什么。自从今年初开始接触PHP,我也总想能在这个领域内产生点贡献。那能做点什么呢?我经常看到很多phper说自己设计了一个什么框架,或者说自己搭建了一个什么系统。虽然…

机器推理文本+视觉,跨模态预训练新进展

作者 | 李根、段楠、周明来源 | 微软研究院AI头条(ID:MSRAsia)【导读】机器推理要求利用已有的知识和推断技术对未见过的输入信息作出判断,在自然语言处理领域中非常重要。本文将介绍微软亚洲研究院在跨模态预训练领域的研究进展。近年来&…

[LeetCode]:94:Binary Tree Inorder Traversal

题目: Given a binary tree, return the inorder traversal of its nodes values. For example:Given binary tree {1,#,2,3}, 1\2/3return [1,3,2]. 代码: public class Solution {public static ArrayList<Integer> listResult new ArrayList&l…

腾讯 AI 2019这一年

所有参与投票的 CSDN 用户都参加抽奖活动群内公布奖项,还有更多福利赠送近日,腾讯AI实验室总结了 2019 年其取得重大进展的两大研究方向,推动实现的行业应用以及前沿研究探索方面的成果。一、两大难题攻坚:通用人工智能与数字人用…

跨平台PHP调试器设计及使用方法——探索和设计

在《跨平台PHP调试器设计及使用方法——立项》一文中,我确定了使用xdebug作为调试器插件部分的基础组件。xdebug提供了一个远程调试的功能(相关资料可以详见https://xdebug.org/docs/remote),我们这个项目便是基于这个功能实现的。…

Ubuntu下允许Root用户直接登录图形界面

ubuntu root是默认禁用了,不允许用root登陆,所以先要设置root密码。 执行:sudo passwd root 接着输入密码和root密码,重复密码。再重新启动就可以用root登陆。 另外,默认情况下是不允许用root帐号直接登陆图形界面的。…

携程App for Apple Watch探索

在Apple Watch发布之后,很多App都针对它设计了相应的版本。旅行作为与Apple Watch时间管理特性契合度较高的场景,同时携程旅行作为国内领先的OTA行业App,也成为了首批适配Apple Watch并荣登Apple官网和App Store推荐的应用之一。InfoQ就App f…

跨平台PHP调试器设计及使用方法——通信

首先引用《跨平台PHP调试器设计及使用方法——探索和设计》中的结构图(转载请指明出于breaksoftware的csdn博客) 本文要介绍的是我们逻辑和pydbgp通信的实现(图中红框内内容)。 设计通信之前,我需要先设计一种通信协议…

MVP模式的相关知识

MVP 是从经典的模式MVC演变而来,它们的基本思想有相通的地方:Controller/Presenter负责逻辑的处理,Model提供数据,View负责显示。作为一种新的模式,MVP与MVC有着一个重大的区别:在MVP中View并不直接使用Mod…

“数学不行,还能干点啥?”面试官+CTO:干啥都费劲!

关于数学与程序员的“暧昧”关系,先看看网友的看法:同时编程圈也流传着一个段子:一流程序员靠数学,二流程序员靠算法,末端程序员靠百度,低端看高端就是黑魔法。想一想,我们日常学习、求职、工作…

CentOS7 yum 源的配置与使用

YUM:Yellowdog Updater Modified Yum(全称为 Yellow dog Updater, Modified)是一个在Fedora和RedHat以及CentOS中的Shell前端软件包管理器。基于RPM包管理,能够从指定的服务器自动下载RPM包并且安装,可以自动处理依赖…

跨平台PHP调试器设计及使用方法——协议解析

在《跨平台PHP调试器设计及使用方法——探索和设计》一文中,我介绍了将使用pydbgp作为和Xdebug的通信库,并让pydbgp以(孙)子进程的方式存在。《跨平台PHP调试器设计及使用方法——通信》解决了和pydbgp通信的问题,本文…

测试客户端发图图

转载于:https://blog.51cto.com/ericsong/116942

搜狐、美团、小米都在用的Apache Doris有什么好? | BDTC 2019

【导读】12 月 5-7 日,由中国计算机学会主办,CCF 大数据专家委员会承办,CSDN、中科天玑协办的中国大数据技术大会(BDTC 2019)在北京长城饭店隆重举行。100 顶尖技术专家、1000 大数据从业者齐聚于此,以“大…

cacti邮件告警设置

功能说明对指定流量图形(指定接口)设置最高或最低流量阀值,当流量出现异常偏高或偏低触发阀值,系统自动将异常信息以邮件形式通知指定收件人。如果收件人邮箱是139邮箱,还可以增设短信通知功能。设置前准备设置该功能之…

跨平台PHP调试器设计及使用方法——高阶封装

在《跨平台PHP调试器设计及使用方法——协议解析》一文中介绍了如何将pydbgp返回的数据转换成我们需要的数据。我们使用该问中的接口已经可以构建一个简单的调试器。但是由于pydbgp存在的一些问题,以及调试器需要的一些高级功能,我们还需要对这些接口进行…

Oracle的口令文件(passwordfile)的讲解(摘录)

初学oracle,很多概念迷糊,今天看到这文章,让我有一个比较清晰的认识。转载[url]http://www.itpub.net/viewthread.php?tid906008&extra&page1[/url]1、os认证oracle安装之后默认情况下是启用了os认证的,这里提到的os认证…

如何优雅地使用pdpipe与Pandas构建管道?

作者 | Tirthajyoti Sarkar译者 | 清儿爸编辑 | 夕颜出品 | AI科技大本营(ID: rgznai100) 【导读】Pandas 是 Python 生态系统中的一个了不起的库,用于数据分析和机器学习。它在 Excel/CSV 文件和 SQL 表所在的数据世界与 Scikit-learn 或 Te…