2019独角兽企业重金招聘Python工程师标准>>>

本文节选自《Netkiller Database 手札》

第 63 章 Apache Hive

目录

63.1. 安装 Apache Hive

63.1.1. MySQL

63.1.2. Hadoop

63.1.3. Hive

63.1.4. 启动 Hive

63.1.5. 访问 Hive

63.2. 管理 Hive

63.2.1. 表管理

63.2.1.1. 创建表

63.2.1.2. 显示表

63.2.1.3. 删除表

63.2.1.4. 查看表结构

63.2.1.5. 为表增加字段

63.2.1.6. 修改表名称

63.2.1.7. 使用已有表结构创建新表

63.2.2. 分区表

63.2.2.1. 创建分区表

63.2.2.2. 显示分区情况

63.2.2.3. 增加分区

63.2.2.4. 向分区表导入数据

63.2.3. 视图管理

63.2.3.1. 创建视图

63.2.3.2. 删除视图

63.2.4. 数据管理

63.2.4.1. 从文本文件导入数据

63.2.4.2. 从其他表查询数据并创建新表

63.2.4.3. 从其他表查询数据然后插入指定表中

63.2.4.4.

63.2.5. HDFS与本地文件系统管理

63.2.5.1. HDFS 目录迁移

63.2.5.2. 导出表数据到本地文件

63.2.5.3.

63.3. HiveQL - Hive查询语言

63.3.1. JOIN 连接查询

63.3.2. 子查询

Hive是基于Hadoop构建的一套数据仓库分析系统,它提供了丰富的SQL查询方式来分析存储在Hadoop 分布式文件系统中的数据。其在Hadoop的架构体系中承担了一个SQL解析的过程,它提供了对外的入口来获取用户的指令然后对指令进行分析,解析出一个MapReduce程序组成可执行计划,并按照该计划生成对应的MapReduce任务提交给Hadoop集群处理,获取最终的结果。

63.1. 安装 Apache Hive

安装 Apache Hive 需要 Hadoop和MySQL,这里假设你已经懂得如何安装Hadoop和MySQL,所以一下将采用Netkiller OSCM一件安装脚本来初始化Hadoop和MySQL,如果需要详细的安装步骤请参考笔者的相关文章。

63.1.1. MySQL

默认情况下, Hive 使用内嵌的 Derby 数据库保存元数据, 通常生产环境会使用 MySQL 来存放 Hive 元数据。

使用下面脚本一键安装MySQL 5.7 安装后会显示mysql的初始密码,是所有初始密码登陆后修改为你的需要密码

curl -s https://raw.githubusercontent.com/oscm/shell/master/database/mysql/5.7/mysql.server.sh | bash2016-02-16T08:22:58.253030Z 1 [Note] A temporary password is generated for root@localhost: sd%%my.Ak7Ma

安装 MySQL JDBC 连接库。

curl -s https://raw.githubusercontent.com/oscm/shell/master/database/mysql/5.7/mysql-connector-java.sh | bash

创建一个 hive 数据库用来存储 Hive 元数据,且数据库访问的用户名和密码都为 hive。

mysql> CREATE DATABASE hive; Query OK, 1 row affected (0.03 sec)

创建用户hive并授权访问hive数据库

mysql> CREATE USER 'hive'@'localhost' IDENTIFIED BY 'hive'; Query OK, 0 rows affected (0.04 sec)mysql> GRANT ALL ON hive.* TO 'hive'@'localhost' IDENTIFIED BY 'hive'; Query OK, 0 rows affected (0.01 sec)mysql> GRANT ALL ON hive.* TO 'hive'@'%' IDENTIFIED BY 'hive'; Query OK, 0 rows affected (0.00 sec)mysql> FLUSH PRIVILEGES; Query OK, 0 rows affected (0.00 sec)mysql> quit; Bye

63.1.2. Hadoop

安装 Hadoop 采用单机模式

curl -s https://raw.githubusercontent.com/oscm/shell/master/distributed/hadoop/hadoop-2.8.0.sh | bash curl -s https://raw.githubusercontent.com/oscm/shell/master/distributed/hadoop/single.sh | bash curl -s https://raw.githubusercontent.com/oscm/shell/master/distributed/hadoop/startup.sh | bash

63.1.3. Hive

可以从 Apache 镜像站点中下载最新稳定版的 apache-hive-2.1.1-bin.tar.gz

cd /usr/local/src wget http://mirrors.hust.edu.cn/apache/hive/stable-2/apache-hive-2.1.1-bin.tar.gztar zxf apache-hive-2.1.1-bin.tar.gz mv apache-hive-2.1.1-bin /srv/apache-hive-2.1.1 ln -s /srv/apache-hive-2.1.1/ /srv/apache-hive chown hadoop:hadoop -R /srv/apache-hive-2.1.1

cat > /srv/apache-hive/conf/hive-env.sh <<'EOF' export JAVA_HOME=/srv/java export HADOOP_HOME=/srv/apache-hadoop export HBASE_HOME=/srv/apache-hbase export HIVE_HOME=/srv/apache-hive export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HIVE_HOME/bin EOFcat >> ~/.bash_profile <<'EOF' export JAVA_HOME=/srv/java export HADOOP_HOME=/srv/apache-hadoop export HBASE_HOME=/srv/apache-hbase export HIVE_HOME=/srv/apache-hive export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HIVE_HOME/bin EOFsource ~/.bash_profile

安装JDBC驱动

[root@localhost apache-hive]# ln -s /usr/share/java/mysql-connector-java.jar /srv/apache-hive/lib/ [root@localhost apache-hive]# ll /srv/apache-hive/lib/mysql-connector-java.jar lrwxrwxrwx 1 root root 40 Jun 29 01:59 /srv/apache-hive/lib/mysql-connector-java.jar -> /usr/share/java/mysql-connector-java.jar

修改 hive-site.xml 配置文件,配置工作目录

<property><name>hive.querylog.location</name><value>/tmp/live/hadoop</value><description>Location of Hive run time structured log file</description></property><property><name>hive.exec.local.scratchdir</name><value>/tmp/hive</value><description>Local scratch space for Hive jobs</description></property><property><name>hive.downloaded.resources.dir</name><value>/tmp/hive/${hive.session.id}_resources</value><description>Temporary local directory for added resources in the remote file system.</description></property><property><name>hive.querylog.location</name><value>/user/hive/log</value><description>Location of Hive run time structured log file</description></property>把默认的 Derby 修改为 MySQL 需要在该文件中配置 MySQL 数据库连接信息。

<property><name>javax.jdo.option.ConnectionURL</name><value>jdbc:derby:;databaseName=metastore_db;create=true</value><description>JDBC connect string for a JDBC metastore.To use SSL to encrypt/authenticate the connection, provide database-specific SSL flag in the connection URL.For example, jdbc:postgresql://myhost/db?ssl=true for postgres database.</description></property><property><name>javax.jdo.option.ConnectionDriverName</name><value>org.apache.derby.jdbc.EmbeddedDriver</value><description>Driver class name for a JDBC metastore</description></property><property><name>javax.jdo.option.ConnectionUserName</name><value>APP</value><description>Username to use against metastore database</description></property><property><name>javax.jdo.option.ConnectionPassword</name><value>mine</value><description>password to use against metastore database</description></property>

将上面配置项 value 改为下面的配置

<property><name>javax.jdo.option.ConnectionURL</name><value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true&characterEncoding=UTF-8&useSSL=false</value></property><property><name>javax.jdo.option.ConnectionDriverName</name><value>com.mysql.jdbc.Driver</value></property><property><name>javax.jdo.option.ConnectionUserName</name><value>hive</value></property><property><name>javax.jdo.option.ConnectionPassword</name><value>hive</value></property>

63.1.4. 启动 Hive

启动 Hive 前你必须做两件事,一是创建HDFS目录,二是初始化 MySQL 数据库。

为 Hive 创建 HDFS 工作目录并给它们赋相应的权限。

[root@localhost ~]$ su - hadoop [hadoop@localhost ~]$ /srv/apache-hadoop/bin/hdfs dfs -mkdir -p /user/hive/warehouse [hadoop@localhost ~]$ /srv/apache-hadoop/bin/hdfs dfs -mkdir -p /tmp/hive [hadoop@localhost ~]$ /srv/apache-hadoop/bin/hdfs dfs -chmod g+w /user/hive/warehouse [hadoop@localhost ~]$ /srv/apache-hadoop/bin/hdfs dfs -chmod 777 /tmp/hive

初始化 MySQL 数据库

[hadoop@localhost ~]$ /srv/apache-hive/bin/schematool -dbType mysql -initSchema SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/srv/apache-hive-2.1.1/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/srv/apache-hadoop-2.8.0/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Metastore connection URL: jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true&characterEncoding=UTF-8&useSSL=false Metastore Connection Driver : com.mysql.jdbc.Driver Metastore connection User: hive Starting metastore schema initialization to 2.1.0 Initialization script hive-schema-2.1.0.mysql.sql Initialization script completed schemaTool completed

63.1.5. 访问 Hive

启动 Hadoop

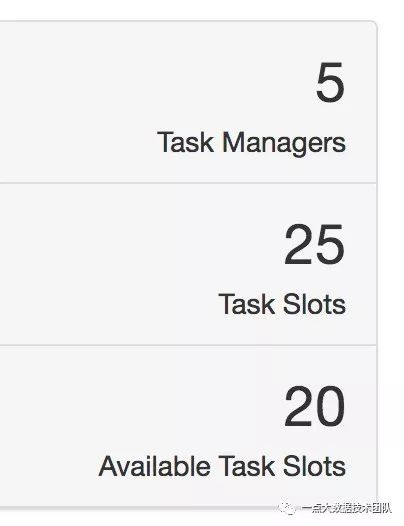

[hadoop@localhost ~]$ /srv/apache-hadoop/sbin/start-all.sh This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh Starting namenodes on [localhost] localhost: starting namenode, logging to /srv/apache-hadoop-2.8.0/logs/hadoop-hadoop-namenode-localhost.localdomain.out localhost: starting datanode, logging to /srv/apache-hadoop-2.8.0/logs/hadoop-hadoop-datanode-localhost.localdomain.out Starting secondary namenodes [0.0.0.0] 0.0.0.0: starting secondarynamenode, logging to /srv/apache-hadoop-2.8.0/logs/hadoop-hadoop-secondarynamenode-localhost.localdomain.out starting yarn daemons starting resourcemanager, logging to /srv/apache-hadoop-2.8.0/logs/yarn-hadoop-resourcemanager-localhost.localdomain.out localhost: starting nodemanager, logging to /srv/apache-hadoop-2.8.0/logs/yarn-hadoop-nodemanager-localhost.localdomain.out

进入 Hive 然后输入 show databases; 测试安装是否正常。

[hadoop@localhost conf]$ /srv/apache-hive/bin/hive SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/srv/apache-hive-2.1.1/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/srv/apache-hadoop-2.8.0/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]Logging initialized using configuration in file:/srv/apache-hive-2.1.1/conf/hive-log4j2.properties Async: true Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases. hive> show databases; OK default Time taken: 0.264 seconds, Fetched: 1 row(s) hive>

至此 Apache Hive 已经安装配置完成!

63.2. 管理 Hive

如果你又任何一种关系型数据库的使用经验,那么你将在这里看到非常熟悉的操作。

63.2.1. 表管理

63.2.1.1. 创建表

CREATE TABLE member (name string, age int, sex int);

hive> CREATE TABLE member (name string, age int, sex int); OK Time taken: 0.687 seconds hive>

63.2.1.2. 显示表

hive> SHOW TABLES; OK test Time taken: 0.041 seconds, Fetched: 1 row(s) hive>

通配符匹配表名称

show tables '*t*';

63.2.1.3. 删除表

hive> DROP TABLE test; OK Time taken: 1.337 seconds hive>

63.2.1.4. 查看表结构

hive> CREATE TABLE member (name string, age int, sex int); OK Time taken: 0.273 secondshive> desc member; OK name string age int sex int Time taken: 0.035 seconds, Fetched: 3 row(s) hive>

63.2.1.5. 为表增加字段

增加一个字段 phone 字符串类型

hive> ALTER TABLE member ADD COLUMNS (phone String); OK Time taken: 0.188 seconds hive> desc member; OK name string age int sex int phone string Time taken: 0.033 seconds, Fetched: 4 row(s)

63.2.1.6. 修改表名称

将 test 表重命名为 vipuser

hive> CREATE TABLE test (name string, age int, sex int); OK Time taken: 0.311 seconds hive> ALTER TABLE test RENAME TO vipuser; OK Time taken: 0.115 seconds hive> desc vipuser; OK name string age int sex int Time taken: 0.032 seconds, Fetched: 3 row(s) hive>

63.2.1.7. 使用已有表结构创建新表

仅仅创建表结构,不会复制数据过来。

hive> CREATE TABLE news_2017 LIKE news; OK Time taken: 0.311 seconds

63.2.2. 分区表

63.2.2.1. 创建分区表

hive> CREATE TABLE invites (foo INT, bar STRING) PARTITIONED BY (ds STRING);

63.2.2.2. 显示分区情况

hive> SHOW PARTITIONS passwd; OK computer=hadoop computer=hbase computer=hive Time taken: 0.056 seconds, Fetched: 3 row(s)

63.2.2.3. 增加分区

hive> alter table member add partition (province='shenzhen');

63.2.2.4. 向分区表导入数据

hive> CREATE TABLE passwd (a string, b string, c string, d string, e string, f string) PARTITIONED BY (computer string) ROW FORMAT DELIMITED FIELDS TERMINATED BY ':'; OK Time taken: 0.323 seconds hive> load data local inpath '/etc/passwd' overwrite into table passwd partition(computer="hive"); Loading data to table default.passwd partition (computer=hive) OK Time taken: 0.499 seconds hive> select * from passwd; OK root x 0 0 root /root hive bin x 1 1 bin /bin hive daemon x 2 2 daemon /sbin hive adm x 3 4 adm /var/adm hive lp x 4 7 lp /var/spool/lpd hive sync x 5 0 sync /sbin hive shutdown x 6 0 shutdown /sbin hive halt x 7 0 halt /sbin hive mail x 8 12 mail /var/spool/mail hive operator x 11 0 operator /root hive games x 12 100 games /usr/games hive ftp x 14 50 FTP User /var/ftp hive nobody x 99 99 Nobody / hive dbus x 81 81 System message bus / hive polkitd x 999 998 User for polkitd / hive avahi x 70 70 Avahi mDNS/DNS-SD Stack /var/run/avahi-daemon hive avahi-autoipd x 170 170 Avahi IPv4LL Stack /var/lib/avahi-autoipd hive postfix x 89 89 /var/spool/postfix hive sshd x 74 74 Privilege-separated SSH /var/empty/sshd hive ntp x 38 38 /etc/ntp hive rpc x 32 32 Rpcbind Daemon /var/lib/rpcbind hive qemu x 107 107 qemu user / hive unbound x 998 996 Unbound DNS resolver /etc/unbound hive rpcuser x 29 29 RPC Service User /var/lib/nfs hive nfsnobody x 65534 65534 Anonymous NFS User /var/lib/nfs hive saslauth x 997 76 "Saslauthd user" /run/saslauthd hive radvd x 75 75 radvd user / hive nagios x 1000 1000 /home/nagios hive apache x 48 48 Apache /usr/share/httpd hive exim x 93 93 /var/spool/exim hive tss x 59 59 Account used by the trousers package to sandbox the tcsd daemon /dev/null hive git x 996 994 /var/opt/gitlab hive gitlab-www x 995 993 /var/opt/gitlab/nginx hive gitlab-redis x 994 992 /var/opt/gitlab/redis hive gitlab-psql x 993 991 /var/opt/gitlab/postgresql hive nginx x 992 990 nginx user /var/cache/nginx hive www x 80 80 Web Application /www hive mysql x 27 27 MySQL Server /var/lib/mysql hive redis x 991 989 Redis Database Server /var/lib/redis hive epmd x 990 988 Erlang Port Mapper Daemon /tmp hive rabbitmq x 989 987 RabbitMQ messaging server /var/lib/rabbitmq hive solr x 1001 1001 Apache Solr /srv/solr hive mongodb x 184 986 MongoDB Database Server /var/lib/mongodb hive test x 1002 1002 /home/test hive sysaccount x 988 985 /home/sysaccount hive systemd-bus-proxy x 987 983 systemd Bus Proxy / hive systemd-network x 986 982 systemd Network Management / hive elasticsearch x 985 980 elasticsearch user /home/elasticsearch hive zabbix x 984 979 Zabbix Monitoring System /var/lib/zabbix hive mysqlrouter x 983 978 MySQL Router /var/lib/mysqlrouter hive hadoop x 1003 1003 /home/hadoop hive Time taken: 0.118 seconds, Fetched: 51 row(s)hive> SHOW PARTITIONS passwd; OK computer=hive Time taken: 0.058 seconds, Fetched: 1 row(s)

63.2.3. 视图管理

63.2.3.1. 创建视图

hive> CREATE VIEW v_test AS SELECT name,age FROM member where age>20; hive> select * from v_test;

63.2.3.2. 删除视图

hive> drop view test; OK Time taken: 0.276 seconds

判断视图是否存在

hive> DROP VIEW IF EXISTS v_test; OK Time taken: 0.495 seconds

63.2.4. 数据管理

63.2.4.1. 从文本文件导入数据

首先创建一个文本文件,如下:

[root@localhost ~]# cat /tmp/hive.txt 1 2 3 2 3 4 3 4 5 6 7 8

hive> CREATE TABLE test (a int, b int, c int) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'; OK Time taken: 0.294 seconds hive> LOAD DATA LOCAL INPATH '/tmp/hive.txt' OVERWRITE INTO TABLE test; Loading data to table default.test OK Time taken: 0.541 seconds hive> select * from test; OK 1 2 3 2 3 4 3 4 5 6 7 8 Time taken: 0.952 seconds, Fetched: 5 row(s)

63.2.4.2. 从其他表查询数据并创建新表

hive> CREATE TABLE mytable AS SELECT * FROM anytable;

63.2.4.3. 从其他表查询数据然后插入指定表中

INSERT OVERWRITE TABLE mytable SELECT * FROM other ;

63.2.4.4.

63.2.5. HDFS与本地文件系统管理

63.2.5.1. HDFS 目录迁移

[hadoop@localhost ~]$ hdfs dfs -ls /user/hive/warehouse Found 3 items drwxrwxr-x - hadoop supergroup 0 2017-06-29 03:36 /user/hive/warehouse/member drwxrwxr-x - hadoop supergroup 0 2017-06-29 03:32 /user/hive/warehouse/test drwxrwxr-x - hadoop supergroup 0 2017-06-29 03:41 /user/hive/warehouse/vipuser[hadoop@localhost ~]$ hdfs dfs -cp /user/hive/warehouse/vipuser /user/hive/warehouse/vipuser2

63.2.5.2. 导出表数据到本地文件

hive> INSERT OVERWRITE LOCAL DIRECTORY '/tmp/test' SELECT * FROM test;hive> INSERT OVERWRITE LOCAL DIRECTORY '/tmp/test' SELECT * FROM member; WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases. Query ID = hadoop_20170629040540_ddeda146-efed-44c4-bb20-a6453c21cc8e Total jobs = 1 Launching Job 1 out of 1 Number of reduce tasks is set to 0 since there's no reduce operator Starting Job = job_1498716998098_0001, Tracking URL = http://localhost:8088/proxy/application_1498716998098_0001/ Kill Command = /srv/apache-hadoop/bin/hadoop job -kill job_1498716998098_0001 Hadoop job information for Stage-1: number of mappers: 0; number of reducers: 0 2017-06-29 04:05:49,221 Stage-1 map = 0%, reduce = 0% Ended Job = job_1498716998098_0001 Moving data to local directory /tmp/test MapReduce Jobs Launched: Stage-Stage-1: HDFS Read: 0 HDFS Write: 0 SUCCESS Total MapReduce CPU Time Spent: 0 msec OK Time taken: 10.54 seconds

63.3. HiveQL - Hive查询语言

HiveQL 与 SQL 极其相似,SQL语法尽管尝试。

63.3.1. JOIN 连接查询

hive> SELECT t1.a,t1.b,t2.a,t2.b> FROM table1 t1 JOIN table2 t2 on t1.a=t2.a> WHERE t1.a>10;

Donations (打赏)

We accept PayPal through:

https://www.paypal.me/netkiller

Wechat (微信) / Alipay (支付宝) 打赏:

http://www.netkiller.cn/home/donations.html

作者相关文章:

Apache Hbase 快速入门

Spring cloud 之 Feign Client

Spring Cloud Netflix

Spring Cloud Config

Spring boot with Schedule (启用/禁用)

Spring boot with HTTPS SSL

Spring boot with Git version

Spring boot with Docker

Spring boot with Service

Spring boot with PostgreSQL

Struts2 S2-046, S2-045 Firewall(漏洞防火墙)

数据库与图片完美解决方案

数据库进程间通信解决方案

数据库进程间通信解决方案之MQ

Linux 系统安全与优化配置

Tomcat 安全配置与性能优化

Linux 系统与数据库安全

转载请注明出处与作者声明,扫描二维码关注作者公众好,不定期更新文章