如何使用TensorFlow Eager执行训练自己的FaceID ConvNet

by Thalles Silva

由Thalles Silva

Faces are everywhere — from photos and videos on social media websites, to consumer security applications like the iPhone Xs FaceID.

人脸无处不在-从社交媒体网站上的照片和视频到iPhone Xs FaceID等消费者安全应用程序。

In this context, computer vision, applied to faces, has many subareas. These include face detection, recognition, and tracking. Moreover, with the advance of Deep Learning, these solutions are getting more mature for commercial applications.

在这种情况下,应用于脸部的计算机视觉具有许多子区域。 这些包括面部检测,识别和跟踪。 此外,随着深度学习的发展,这些解决方案在商业应用中变得越来越成熟。

This post shows you, piece-by-piece, how to design and train your own Convolutional Neural Network (CNN) for face identification. Here, we propose a Tensorflow Eager implementation of Siamese DenseNets.

这篇文章逐步向您展示了如何设计和训练自己的卷积神经网络(CNN)进行面部识别。 在这里,我们提出了暹罗DenseNets的Tensorflow Eager实现。

You can find the complete code here.

您可以在此处找到完整的代码。

暹罗密集网 (Siamese DenseNets)

A Siamese CNN is a class of neural nets (NNs) that contains two or more identical network instances. The term identical refers to the fact that the two NNs share the same design configuration and, most important, their weights.

暹罗CNN是一类神经网络(NN),其中包含两个或多个相同的网络实例。 术语“相同”指两个NN共享相同的设计配置,最重要的是它们的权重。

To understand DenseNets, we need to focus on two principal components of its architecture. These are the dense block and the transition layer.

要了解DenseNet,我们需要专注于其体系结构的两个主要组成部分。 这些是密集块和过渡层 。

In short, a DenseNet is a stack of dense blocks followed by transition layers. A block consists of a series of units. Every unit packs two convolutions. Each convolution is preceded by batch normalization (BN) and rectified linear units (ReLU) activations.

简而言之,DenseNet是密集块的堆栈,后面是过渡层。 一个块由一系列单元组成。 每个单元包含两个卷积。 每次卷积之前都进行批量归一化 (BN)和整流线性单位 (ReLU)激活。

Each unit outputs a fixed number of feature vectors. This number is controlled by a single parameter — the growth rate. Essentially, it manages how much new information a given unit allows to pass through to the next one.

每个单元输出固定数量的特征向量。 此数字由一个参数控制- 增长率 。 本质上,它管理给定单元允许传递给下一个单元的新信息数量。

Similarly, transition layers are simple components. They are designed to down-sample feature vectors passing through the network. Each transition layer consists of a BN operation, followed by a 1x1 convolution plus a 2x2average pooling.

同样,过渡层是简单的组件。 它们旨在对通过网络的特征向量进行下采样。 每个过渡层都包含一个BN操作,然后是一个1x1卷积加上2x2平均池。

The big difference from other regular CNNs is that each unit within a dense block is connected to every other unit before it. Within a block, the nth unit receives as input the feature-vectors learned by the n-1, n-2, … all the way down to the first unit in the pipeline. Put differently, the DenseNets design allows for high-level feature sharing among its units.

与其他常规CNN的最大区别在于,密集块中的每个单元在连接之前都与其他每个单元相连。 在一个块内,第n个单元接收由n-1 , n-2 …一直到流水线中第一个单元学习的特征向量作为输入。 换句话说,DenseNets设计允许在其各个单元之间进行高级功能共享。

When compared to ResNets, DenseNets have feature reusing by concatenation instead of summation. As a consequence, DenseNets tend to be more compact in the number of parameters than ResNets. Intuitively, every feature-vector learned by any given DenseNet unit is reused by all the following units within a block. This minimizes the possibility of different layers of the network learning redundant features.

与ResNets相比,DenseNets具有通过级联而不是求和重用的功能。 结果,与ResNets相比,DenseNets的参数数量往往更紧凑。 直观地,任何给定DenseNet单元学习到的每个特征向量都会被一个块中的所有后续单元重用。 这使网络不同层学习冗余功能的可能性降到最低。

Both ResNets and DenseNets use the popular bottleneck layer design. It consists of 2 components:

ResNets和DenseNets都使用流行的瓶颈层设计。 它包含2个组件:

a 1x1 convolution to reduce the spatial dimensions of features

1x1卷积以减小要素的空间尺寸

a wider convolution, in this case a 3x3 operation for feature learning

更广泛的卷积,在这种情况下为特征学习的3x3操作

Regarding parameter efficiency and floating point operations per second (FLOPs), DenseNets surpass ResNets by a significant margin. DenseNets not only achieve smaller error rates on ImageNets, but also require fewer parameters and fewer FLOPs than ResNets.

关于参数效率和每秒浮点运算(FLOP),DenseNets大大超过了ResNets。 与ResNets相比,DenseNets不仅在ImageNets上实现了较小的错误率,而且还需要更少的参数和更少的FLOP。

Another trick that enhances model compactness is the compression factor. This procedure takes place on the transition layers and aims to reduce the number of feature vectors that go into the next dense block. DenseNets implement this mechanism by setting a factor, θ, between 0 and 1. θ controls how many of the current features are allowed to pass through to the following block. This technique allows DenseNets even more reduction in the number of feature-vectors, and to be very parameter efficient.

增强模型紧凑性的另一个技巧是压缩系数。 此过程在过渡层上进行,目的是减少进入下一个密集块的特征向量的数量。 DenseNets通过在0到1之间设置一个因子θ来实现这种机制。θ控制允许多少当前特征传递到下一个块。 这种技术使DenseNets可以进一步减少特征向量的数量,并且具有很高的参数效率。

学习面Kong相似度 (Learning face similarities)

This is not a classification task — we do not want to categorize images into classes. Instead, we want to learn a representation that can individually describe each input.

这不是一个分类任务-我们不希望将图像归类为类。 相反,我们想学习一种可以单独描述每个输入的表示形式。

Specifically, we want to find similarities between input images. To do that, we need a representation capable of expressing a relationship between two comparable things.

具体来说,我们想找到输入图像之间的相似性。 为此,我们需要一个能够表达两个可比较事物之间关系的表示。

In practice, we want to learn embedding vectors to represent relationships among people’s face images. We want vectors with the following properties:

在实践中,我们想学习嵌入向量来表示人脸图像之间的关系。 我们需要具有以下属性的向量:

If two images (X1 and X2) are similar, we want the distance between the 2 output vectors to be as small as possible

如果两个图像( X1和X2 )相似,我们希望两个输出矢量之间的距离尽可能小

If X1 and X2 are not similar, we want this distance to be as large as we can make it

如果X1和X2 不相似,我们希望该距离尽可能大

Below we represent the whole Siamese DenseNets framework for learning face embeddings. The next sections go over the specific building blocks of this architecture.

在下面,我们代表了用于学习人脸嵌入的整个Siamese DenseNets 框架 。 下一节将介绍此体系结构的特定构建块。

对比损失 (The contrastive loss)

To understand how the contrastive loss works, the first thing to keep in mind is that it works on pairs of images.

要了解对比损失是如何工作的,首先要记住的是它对成对的图像有效 。

Take the two images above as an example. At a given point, we give the pair (X1, X2) to the system with the following properties:

以上面的两个图像为例。 在给定的点上,我们将具有以下属性的对( X1 , X2 )赋予系统:

if X1 is considered to be similar to X2 we give it a label of 0

如果X1被认为与X2类似,我们给它加上标签0

otherwise X1 gets a label of 1

否则X1的标签为1

Now, let’s define Gw as a parametric function — a neural network. Its role is very simple, Gw maps high-resolution input to low-resolution outputs.

现在,让我们将Gw定义为参数函数-神经网络。 Gw的作用非常简单, Gw将高分辨率输入映射到低分辨率输出。

We want to learn a parameterized distance function Dw, between the inputs X1 and X2. This is the Euclidean distance between the outputs of Gw.

我们想学习输入X1和X2之间的参数化距离函数Dw 。 这是Gw的输出之间的欧式距离。

Note that m is the margin. It defines the radius around Gw. It controls how dissimilar images contribute to the total loss function. That is, a pair of images (X1, X2) from different people (class) only contribute to the loss if the distance between them is within the margin — if (m -Dw) > 0.

注意, m是余量 。 它定义了Gw周围的半径。 它控制不同的图像如何影响总损失函数。 也就是说,来自不同人(类)的一对图像( X1 , X2 )仅在它们之间的距离在边距之内(如果( m -Dw)> 0时)才造成损失。

In other words, we want to optimize the system such that:

换句话说,我们要优化系统,以便:

If the pair of images is similar (label 0) we minimize the distance function Dw.

如果这对图像相似(标签0),我们将距离函数Dw最小化。

If the pair of images is not similar (label 1), we increase the distance function Dw.

如果这对图像不相似(标签1),则我们增加距离函数Dw 。

The final loss function and its implementation in Tensorflow are defined as follows:

Tensorflow中的最终损失函数及其实现定义如下:

It is important to note how we calculate the distance in line 2. Since this loss function has to be differentiable with respect to the model’s weights, we need to ensure that negative side effects will not take place.

重要的是要注意我们如何计算第2行中的距离。由于此损失函数必须相对于模型的权重是可微的,因此我们需要确保不会发生负面影响。

Note that in the square root part of the equation, we add a small epsilon before computing the square root. And the reason is very subtle. In the case where the content inside the square root is zero, the square root of 0 is also 0 — which is fine.

请注意,在方程的平方根部分中,我们在计算平方根之前添加了一个小的epsilon。 原因很微妙。 在平方根内的内容为零的情况下,平方根0也为0-很好。

Yet, if the content is 0 and we are calculating the gradients, the derivative of the square root would have a divide by 0 operation. That is bad.

但是,如果内容为0,并且我们正在计算梯度, 则平方根的导数将被0除 。 那很不好。

As a take-away, always make sure the routines you are using are computationally safe.

作为外卖, 始终确保您使用的例程在计算上是安全的。

Moreover, when minimizing the contrastive loss using Stochastic Gradient Descent (SGD), there are two possible scenarios.

此外,当使用随机梯度下降(SGD)最小化对比损失时,有两种可能的情况。

First, if the pair of input samples (X1, X2) is of the same class (label 0), the second part of the equation is zeroed-out. In this situation, we only minimize the distance between the two images of the same class. In practice, we are pushing the two representations to be as close to each other as possible.

首先,如果一对输入样本( X1 , X2 )属于同一类别(标签0),则等式的第二部分归零。 在这种情况下,我们只会最小化同一类的两个图像之间的距离。 实际上,我们正在推动两种表示形式尽可能地接近。

In the second case, if the input pair (X1, X2) is not from the same class (label 1), the first part of the equation is canceled. Then, in the second term of the summation, two situations may occur.

在第二种情况下,如果输入对( X1 , X2 )不是同一类(标签1),则将等式的第一部分取消。 然后,在求和的第二项中,可能会出现两种情况。

First, if the distance between the two image pairs X1 and X2 is greater than m, nothing happens. Note that if Dw >; m, then the difference between them will also be negative. As a result, the derivative of the remaining function will be 0 — no gradient equals no learning.

首先,如果两个图像对X1和X2之间的距离大于m ,则什么也不会发生。 请注意,如果Dw> ; m,那么它们之间的差异也将为负。 结果,剩余函数的导数将为0-没有梯度等于没有学习。

However, if the distance Dw between the input pair X1 and X2 is less than m, the opposite situation occurs. Now the gradient signal will act as a repulsive force. In practice, it will push the two representations farther away from one another.

但是,如果输入对X1和X2之间的距离Dw小于m ,则会发生相反的情况。 现在,梯度信号将充当排斥力。 在实践中,它将使两种表示形式彼此远离。

数据集 (Dataset)

To train a Siamese CNN for face similarity we used the popular Large-scale CelebFaces Attributes (CelebA) dataset. It contains more than 200k celebrity images from 10,177 different identities. To ease the data pre-processing, we chose the aligned and cropped faces part of dataset. The following picture shows some of the dataset samples.

为了训练暹罗CNN的人脸相似性,我们使用了流行的大规模CelebFaces属性(CelebA)数据集 。 它包含来自10,177个不同身份的200,000多张名人图像。 为了简化数据预处理,我们选择了数据集的对齐和裁剪的面部部分。 下图显示了一些数据集样本。

To use the contrastive loss, we need to build the dataset in a very specific way. Basically, we need to build a dataset that contains a lot of face image pairs. Some of them from the same people, some of them from different ones.

要使用对比损失,我们需要以非常特定的方式构建数据集。 基本上,我们需要构建一个包含很多面部图像对的数据集。 其中一些人来自同一个人,其中一些人来自不同的人。

To put it simply, given an input image Xi we need to find a set of sample S = {X1, X2,…,Xj} such that Xi and Xj belong to the same class. Put it another way, Xi and Xj are face images of the same person.

简而言之,给定输入图像Xi,我们需要找到一组样本S = {X1,X2,…,Xj} ,使Xi和Xj属于同一类。 换句话说, Xi和Xj是同一个人的面部图像。

In the same way, we need to find a set of pictures D = {S1, S2,…,Sj} such that Sj does NOT belong to the same class as Xi.

同样,我们需要找到一组图片D = {S1,S2,…,Sj} ,以使Sj与Xi不属于同一类。

Finally, we combine the input image Xi with samples from both similar and dissimilar sets. For every pair (Xi, Xj) if Xj belongs to the set of similar samples S, we assign a label of 0 to the pair, otherwise, it gets a label of 1.

最后,我们将输入图像Xi与来自相似和不相似集合的样本进行组合。 如果Xj属于相似样本S的集合,则对于每对( Xi,Xj ),我们给该对分配一个标签0,否则它的标签为1。

训练细节 (Training Details)

We used the DenseNet-121 design as described in the original paper. The growth rate parameter (k) was set to 32. Instead of the 1000D fully-connected layer at the end, we learn embedding vectors of size 32.

我们使用了原始论文中所述的DenseNet-121设计。 增长率参数(k)设置为32。而不是最后的1000D全连接层,我们学习了大小为32的嵌入向量。

To optimize the model parameters, we used the Adam Optimizer with a cyclical learning rate schedule. Inspired by fast.ai super-convergence, we fixed the beta2 Adam parameter as 0.99 and applied a cycle policy to beta1.

为了优化模型参数,我们将Adam Optimizer与周期性学习率计划一起使用。 受fast.ai超收敛启发,我们将beta2 Adam参数固定为0.99,并将循环策略应用于beta1 。

In this way, both parameters:— the learning rate and beta1 — vary cyclically between a maximum and minimum value. Simply put, while the learning rate increases the beta1 decreases in a fixed interval.

这样,两个参数:—学习率和beta1 — 最大值和最小值之间周期性变化。 简而言之,虽然学习率增加,但是beta1在固定的时间间隔内减少。

结果 (Results)

The results are very good.

结果非常好。

For these examples, a single threshold of 1 would correctly classify most of the samples. Also, the network is invariant to many transformations of the input images. These transformations include variations of brightness and contrast, the size of the face, pose, and alignment. It is invariant to small changes in peoples looks such as age, haircut, hats, and glasses.

对于这些示例,单个阈值1将正确分类大多数样本。 而且,网络对于输入图像的许多变换是不变的。 这些转换包括亮度和对比度,面部大小,姿势和对齐方式的变化。 外观,年龄,理发,帽子和眼镜等人的外观发生细微变化都是不变的。

The similarity value below is smaller for similar faces and higher for dissimilar ones. Labels of 0 means that the pair of images are from the same person.

下面的相似度值对于相似的面Kong较小,而对于相似的面Kong则较高。 标签为0表示这对图像来自同一个人。

Thanks for reading!

谢谢阅读!

有关深度学习的更多有趣内容,请查看 (For more cool stuff on deep learning, check out)

Dive head first into advanced GANs: exploring self-attention and spectral normLately, Generative Models are drawing a lot of attention. Much of that comes from Generative Adversarial Networks…medium.freecodecamp.orgDiving into Deep Convolutional Semantic Segmentation Networks and Deeplab_V3Deep Convolutional Neural Networks (DCNNs) have achieved remarkable success in various Computer Vision applications…medium.freecodecamp.org

首先深入研究高级GAN:探索自我注意和频谱规范 最近,生成模型引起了很多关注。 其中很大一部分来自剖成对抗性网络...... medium.freecodecamp.org 潜入深卷积语义分割网络和Deeplab_V3 深卷积神经网络(DCNNs)已经实现在不同的计算机视觉应用了显着成效... medium.freecodecamp.org

翻译自: https://www.freecodecamp.org/news/how-to-train-your-own-faceid-cnn-using-tensorflow-eager-execution-6905afe4fd5a/

相关文章:

jquery判断一个元素是否为某元素的子元素

$(node).click(function(){if($(this).parents(.aa).length > 0){//是aa类下的子节点}else{//不是aa类下的子节点} });在判断点击body空白处隐藏弹出框时用到转载于:https://www.cnblogs.com/qdog/p/7067909.html

Sublime Text 3 (含:配置 C# 编译环境)

Sublime Text 3http://www.sublimetext.com/3http://www.sublimetext.com/3dev1. 关闭自动更新 菜单:Preferences->Settings User,打开User配置文档,在大括号内加入(或更改): "update_check&q…

小程序仿安卓动画滑动效果滑动动画效果实现

微信小程序开发交流qq群 173683895 承接微信小程序开发。扫码加微信。 效果图: 源码 var start_clientY; //记录当前滑动开始的值 var end_clientY; //记录当前滑动结束的值 var animation wx.createAnimation({duration: 400 }); //初始化动画var history_dis…

react中使用scss_我如何将CSS模块和SCSS集成到我的React应用程序中

react中使用scssby Max Goh由Max Goh 我如何将CSS模块和SCSS集成到我的React应用程序中 (How I integrated CSS Modules with SCSS into my React application) I recently started on an Isomorphic React project. I wanted to use this opportunity to utilize tools that …

-bash:syntax error near unexpected token '('

在Xshell5中编写int main(int argc,char** argv)时, 出现-bash:syntax error near unexpected token ( ; 可是我是按照Linux语句编写的,其他代码没有出错; 检查发现, Xshell5对应的Linux版本是Linux5,在Li…

iOS手机 相册 相机(Picker Write)

把图片写到相册UIImageWriteToSavedPhotosAlbum(<#UIImage *image#>, nil, nil, nil); ————————————————————————————从相册,相机获取图像设置代理《UINavigationControllerDelegate, UIImagePickerControllerDelegate》 #pragm…

php删除指定对象的属性及属性值

微信小程序开发交流qq群 173683895 承接微信小程序开发。扫码加微信。 unset($address[/Api/User/addAddress]); 删除了 address 对象的 /Api/User/addAddress 属性

前端分离的前端开发工具_使我成为前端开发人员工作的工具和资源

前端分离的前端开发工具Learning front-end development can be a bit overwhelming at times. There are so many resources and tools, and so little time. What should you pick? And what should you focus on?有时,学习前端开发可能会有些困难。 资源和工具…

C# 开启及停止进程

1.本篇内容转发自http://www.cnblogs.com/gaoyuchuanIT/articles/2946314.html 2. 首先在程序中引用: System.Diagnostics; 3. 开启进程: /// <summary> /// 开启进程 /// </summary> /// <param name"aProPath&quo…

COJN 0575 800601滑雪

800601滑雪难度级别:B; 运行时间限制:1000ms; 运行空间限制:51200KB; 代码长度限制:2000000B 试题描述Michael喜欢滑雪百这并不奇怪, 因为滑雪的确很刺激。可是为了获得速度…

JS删除数组指定下标并添加到数组开头

微信小程序开发交流qq群 173683895 承接微信小程序开发。扫码加微信。 代码 let id e.currentTarget.dataset.idlet arrays ;let items this.data.itemsfor (let i 0; i < this.data.items.length; i) {if (id this.data.items[i].id) {arrays items.splice(i, 1)i…

scala akka_如何对Scala和Akka HTTP应用程序进行Docker化-简单的方法

scala akkaby Miguel Lopez由Miguel Lopez 如何对Scala和Akka HTTP应用程序进行Docker化-简单的方法 (How to Dockerise a Scala and Akka HTTP Application — the easy way) Using Docker is a given nowadays. In this tutorial we will how to learn to dockerise our Sca…

Freemarker详细解释

A概念 最经常使用的概念1、 scalars:存储单值字符串:简单文本由单或双引號括起来。数字:直接使用数值。日期:通常从数据模型获得布尔值:true或false,通常在<#if …>标记中使用2、 hashes:…

洛谷P1057 传球游戏(记忆化搜索)

点我进入题目题目大意:n个小孩围一圈传球,每个人可以给左边的人或右边的人传球,1号小孩开始,一共传m次,请问有多少种可能的路径使球回到1号小孩。 输入输出:输入n,m,输出路径的数量。…

微信小程序 自定义导航栏,只保留右上角胶囊按钮

微信小程序开发交流qq群 173683895 承接微信小程序开发。扫码加微信。 navigationStyle 导航栏样式,仅支持以下值:default 默认样式custom 自定义导航栏,只保留右上角胶囊按钮 在 app.json 的 window 加上 "navigationStyle":…

azure多功能成像好用吗_如何使用Azure功能处理高吞吐量消息

azure多功能成像好用吗Authored with Steef-Jan Wiggers, Azure MVP.由Azure MVP Steef-Jan Wiggers撰写。 With Microsoft Azure, customers will push all types of workloads to its services. Workloads are ranging from datasets for Machine Learning purposes to a la…

document.all使用

document.all 一个. document.all它是在页面中的所有元素的集合。例如: document.all(0)一个元素 二. document.all能够推断浏览器是否是IE if(document.all) { alert("is IE!"); } 三. 也能够通过给某个元素设置id属性(id…

微信小程序动画无限循环 掉花

微信小程序开发交流qq群 173683895 承接微信小程序开发。扫码加微信。 动画效果 源码 <!-- 动画 --><block wx:if"{{donghua}}"><view classdonghua><image bindtaphua styleleft:{{left1}}px animation"{{animationData1}}" clas…

程序员远程办公_如何从办公室变成远程程序员

程序员远程办公by James Quinlan詹姆斯昆兰(James Quinlan) My name is James, and I’m a Software Engineer at a company called Yesware, based in Boston. Yesware is the fourth job I’ve had in which I’m paid to write code, but it’s the third time now that I’…

从头学起androidlt;AutoCompleteTextView文章提示文本框.十九.gt;

文章提示可以很好的帮助用户输入信息,以方便。在Android它也设置有类似特征,而要实现这个功能需要依靠android.widget.AutoCompleteTextView完毕,此类的继承结构例如以下: java.lang.Object↳ android.view.View↳ android.widget…

微信小程序动态设置 tabBar

微信小程序开发交流qq群 173683895 承接微信小程序开发。扫码加微信。 使用微信提供的API wx.setTabBarItem(Object object) 动态设置 tabBar 某一项的内容 参数 Object object 属性类型默认值必填说明indexnumber 是tabBar 的哪一项,从左边算起textstring 否…

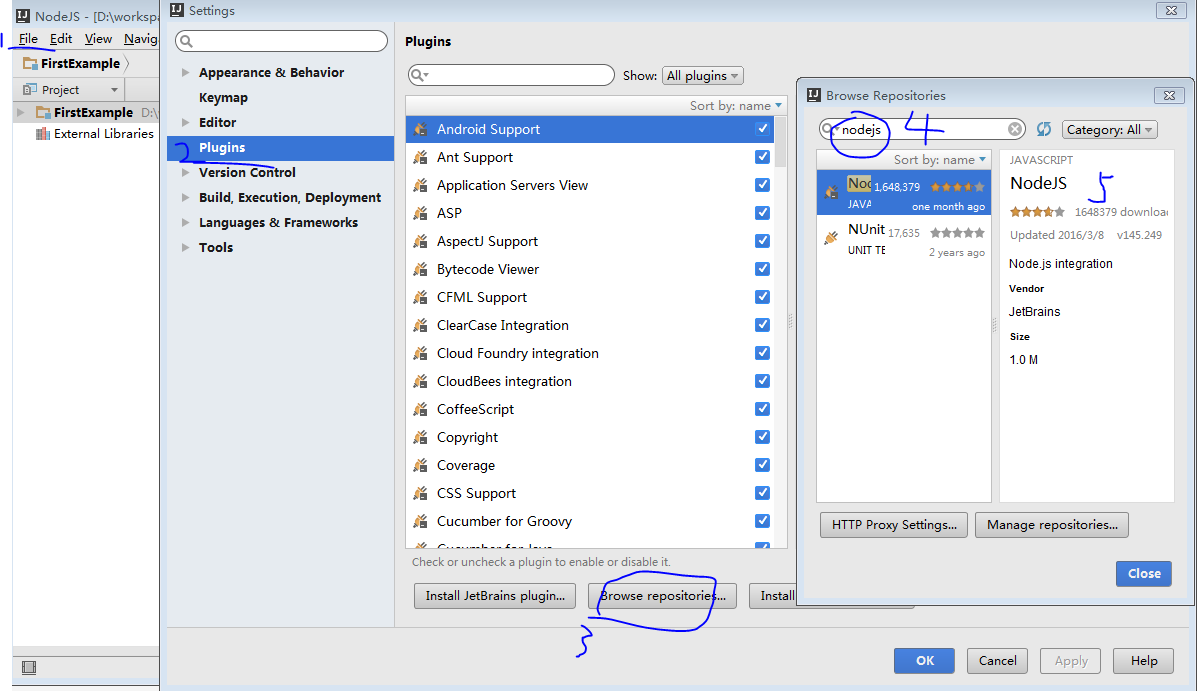

NodeJS入门--环境搭建 IntelliJ IDEA

NodeJS入门–环境搭建 IntelliJ IDEA 本人也刚开始学习NodeJS,所以以此做个笔记,欢迎大家提出意见。 1、首先 下载安装NodeJS,下载安装IntelliJ IDEA2、接下来我们详细介绍在IDEA中配置NodeJS 默认安装好了IDEA,在IDEA的file ->…

如何使用React.js和Heroku快速实现从想法到URL的转变

by Tom Schweers由汤姆史威士(Tom Schweers) 如何使用React.js和Heroku快速实现从想法到URL的转变 (How to go from idea to URL quickly with React.js and Heroku) When I was first starting out as a developer, the one thing that I wanted to do was get a web applica…

F - Count the Colors - zoj 1610(区间覆盖)

有一块很长的画布,现在想在这块画布上画一些颜色,不过后面画的颜色会把前面画的颜色覆盖掉,现在想知道画完后这块画布的颜色分布,比如 1号颜色有几块,2号颜色有几块。。。。*****************************************…

小程序弹窗并移动放大图片的动画效果

微信小程序开发交流qq群 173683895 承接微信小程序开发。扫码加微信。 效果图 触发条件 <block wx:if{{bg_hui_show}}> <view classbg_hui catchtaphide_bg_hui></view> <image classanimation animationData1 bindtapto_hed mode"widthFix&quo…

代码片段管理工具_VS代码片段:提高编码效率的最强大工具

代码片段管理工具by Sam Williams通过山姆威廉姆斯 VS代码片段:提高编码效率的最强大工具 (VS Code snippets: the most powerful tool to boost your coding productivity) 用更少的按键编写更多的代码 (Write more code with fewer keystrokes) Everyone wants t…

我的C++笔记(数据的共享与保护)

*数据的共享与保护: * 1.作用域: * 作用域是一个标识符在程序正文中有效的区域。C中标识符的作用域有函数原型作用域、局部作用域(块作用域)、类作用域和命名空间作用域。 * (1).函数原型作用域: * 函数原型作用域是C中最小的作用域ÿ…

最新Java中Date类型详解

一、Date类型的初始化 1、 Date(int year, int month, int date); 直接写入年份是得不到正确的结果的。 因为java中Date是从1900年开始算的,所以前面的第一个参数只要填入从1900年后过了多少年就是你想要得到的年份。 月需要减1,日可以直接插入。 这种方…

ES6 常用的特性整理

微信小程序开发交流qq群 173683895 承接微信小程序开发。扫码加微信。 1.默认参数 2.模板对象-反引号 3.多行字符串-反引号 4.解构赋值-对象,数组 5.增强的对象字面量- 直接给对象里面的属性赋值给变量 6.给对象的属性赋值的时候可以直接给一个参数…

crontab 最小间隔_今天我间隔了:如何找到不在数组中的最小数字

crontab 最小间隔by Marin Abernethy通过Marin Abernethy 今天我间隔了:如何找到不在数组中的最小数字 (Today I Spaced: how to find the smallest number that is not in the array) TIS在我的第一次技术采访中。 这是我学到的。 (TIS in my first technical int…